More than one year ago I discovered Kong which, at the time, was a very lightweight API gateway technology which you could easily deploy and run yourself by using Docker.

Thoughtworks Technology Radar started following Kong in late 2017 and they already suggested back then that enterprises have a serious look at it.

In December, Kong 1.0 was made available to the general public. This version provides more capabilities than just an API gateway. With this feature-rich version now available, this is the perfect time to have a closer look at what it offers and how we can use it.

But first, what is an API gateway?

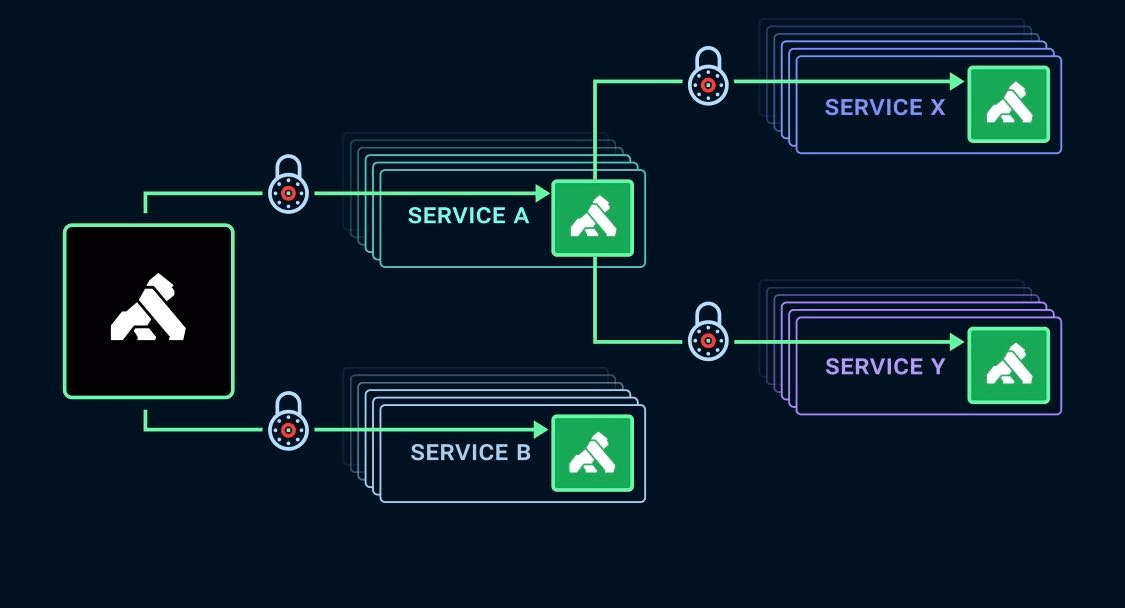

An API gateway allows consumers to call operations on an API. In reality, however, they are only calling a façade which acts a reverse proxy on steroids in front of upstream APIs.

The gateway is in charge of forwarding all the traffic to the physical API, also known as upstream API, and return the responses to the consumers.

However, the gateway provides more functionality than just traffic routing – it also allows you to handle service authentication, traffic control such as rate limiting, request/response transformation, and more.

The beauty of API gateways is that it allows you to align all your APIs, without having to change anything on the physical API.

For example, you can expose your APIs to your consumers by using key authentication, while the API gateway uses mutual authentication with the upstream system.

What is Kong?

Kong, previously known as Kong Community (CE), is an open-source scalable API gateway technology initiated by Kong Inc and has a growing community.

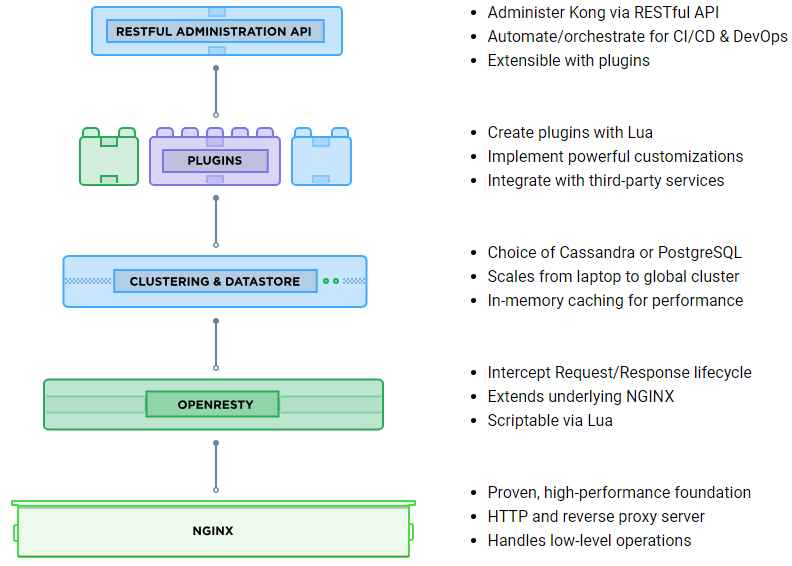

The gateway is built on top of NGINX and can be extended further by using the open-source plug-ins, either by Kong themselves, or the community, or you can write your own plug-ins via Lua.

Once the gateway is running, it will expose two endpoints:

- a proxy endpoint where consumers can send all their traffic to,

- a management endpoint, also known as Kong Management API, to configure the gateway itself.

Administrators can use the Admin REST API or CLI to configure the gateway which allows them to fully script repetitive tasks. However, there is no out-of-the-box support for infrastructure-as-code to manage your gateway.

Every gateway is backed by a data store that contains all the relevant configuration which can be stored in Apache Cassandra or PostgreSQL. This is also where Kong shines as it is a cluster-based technology that allows you to easily scale out by adding more nodes, but it still only requires one data store making it easier to manage.

Here is a bird’s-eye overview of the gateway stack:

Running Kong

Given Kong is a platform-agnostic technology, you can run it on a variety of official or community supported platforms wherever you want – be it on-premises, hybrid or in the cloud.

One of those options is to run Kong as a Docker container. Personally, I love this approach and is a big differentiator in my opinion, because it allows me to run it locally the same way as we will run it in production on a cloud platform of choice. This allows developers to test all my changes locally before rolling it out.

However, as we’ve seen in the gateway stack Kong needs to have a data store where it can store metadata and gateway configurations. This means that we either need to provide an Apache Cassandra or PostgreSQL instance which is close to our gateway.

Most cloud vendors have support for one of these which makes it easier to get started, but it’s still a dependency that we need to monitor & manage.

Out-of-the-box features

So we know that Kong is an API gateway and we need to run it ourselves but what does it bring out-of-the-box?

- Simple Deployments – Container based and easy to deploy wherever we want, if we also have a data store collocated.

- Flexible gateway routing – You can fully configure how the gateway should route your traffic by using

Routes. These define if traffic should be by Host-header, Method, URI path or a combination of those. (docs | example) - Open-Source Plug-in Ecosystem – A rich collection of plug-ins which provide the capability to use built-in features from Kong Hub in a variety of areas: Authentication, Traffic Control, Analytics, Transformations, Logging, Serverless. (More later on)

- Load Balancing – Besides traditional DNS-based balancing, Kong provides a Ring-based balancer where you can either use round-robin or a customized hash-based balancer following the Ketama principle. In the case of hash-based balancing, it is up to the users to define if they want to balance based on a hash of

consumer,ip,header, orcookie. (docs)- Ring-based load balancing also performs health checks and uses circuit breakers.

- Community Support

I was pretty impressed with what Kong delivers for a free product – it’s super easy to deploy, an entire ecosystem of plugins and some flexible routing! However, I’m typically working with enterprises who need more than this.

Things I thought were lacking were:

- A developer portal where customers can browse the APIs that we expose. This is the biggest downside for me.

- An administration portal where we can monitor and configure everything. APIs are great for automation, but it always helps if you have a UI for operations and troubleshooting.

- Analytics of how our APIs are being used as this is crucial to gain insights in your system. This can help you optimize your platform by tweaking your throttling, diagnosing issues, etc.

And this is where Kong Enterprise comes in! We’ll discuss this in a second.

An ecosystem of plugins

One of the core components of Kong is the ecosystem of plugins – they provide the extensibility to install additional components that enrich your APIs with additional capabilities and extends your API landscape.

These plugins are either built and maintained by Kong Inc, or by the community providing you with an easy way to extend your APIs by installing scoped units of features. For example, not everybody needs rate limiting, but if you do you can easily install a plugin for that.

Next, it provides you with the capability to easily integrate Kong with a 3rd party service so that you can, for example, trigger an Azure Function, expose metrics for Prometheus or log requests to loggly.com.

A plugin can also be installed on different levels in the Kong object structure such as only for a specific route, consumer or even globally so that it applies for everything and everybody.

Kong Hub, the Kong Plugin store if you will, consolidates official and open-source plugins and are grouped in the following categories:

- Authentication – Protect your services with an authentication layer

- Security – Secure your API traffic against malicious actors

- Traffic Control – Manage, throttle, and restrict inbound and outbound API traffic

- Serverless – Invoke serverless functions via APIs

- Analytics & Monitoring – Visualize, inspect, and monitor APIs and microservice traffic

- Transformations – Transform requests and responses on the fly

- Logging – Stream request and response data to logging solutions

- Deployment – Run Kong on a platform of choice which is not officially supported by Kong

However, not all OSS plugins are in Kong Hub as developers can apply to add them but it’s not a must. You can find more on LuaRocks.org or GitHub.

Last but not least, you can write your own Kong plugins in Lua that will run on NGINX. Interested in learning more? They have a plugin development guide.

The beauty of this ecosystem is that it’s open and everybody can contribute to them. As an example, I’ve contributed how you deploy Kong on Microsoft Azure Container Instances, but we’ll talk more about this in another article.

Aside from that, all Kong plugins are OSS which you can browse or even contribute to it.

It’s good to keep in mind that the more advanced official plugins are only available for Kong Enterprise. However, I think it makes sense given they are only applicable for big enterprises and also because there is no such thing as a free lunch.

Personally, it feels like this is part of the heart and soul of Kong which I very much like. They provide a lightweight gateway for you and you add the features that you need; either provided by Kong, the community or self-written. It’s all possible!

Exploring Kong Enterprise

Kong Enterprise takes API management a step further and comes with all the tooling that you need to manage a full-blown infrastructure. If you want to run Kong at scale, you’ll need Kong Enterprise!

Running large infrastructure means that it needs to be well-managed – this should be achieved by scripting as much as possible which Kong supports very well by exposing REST APIs.

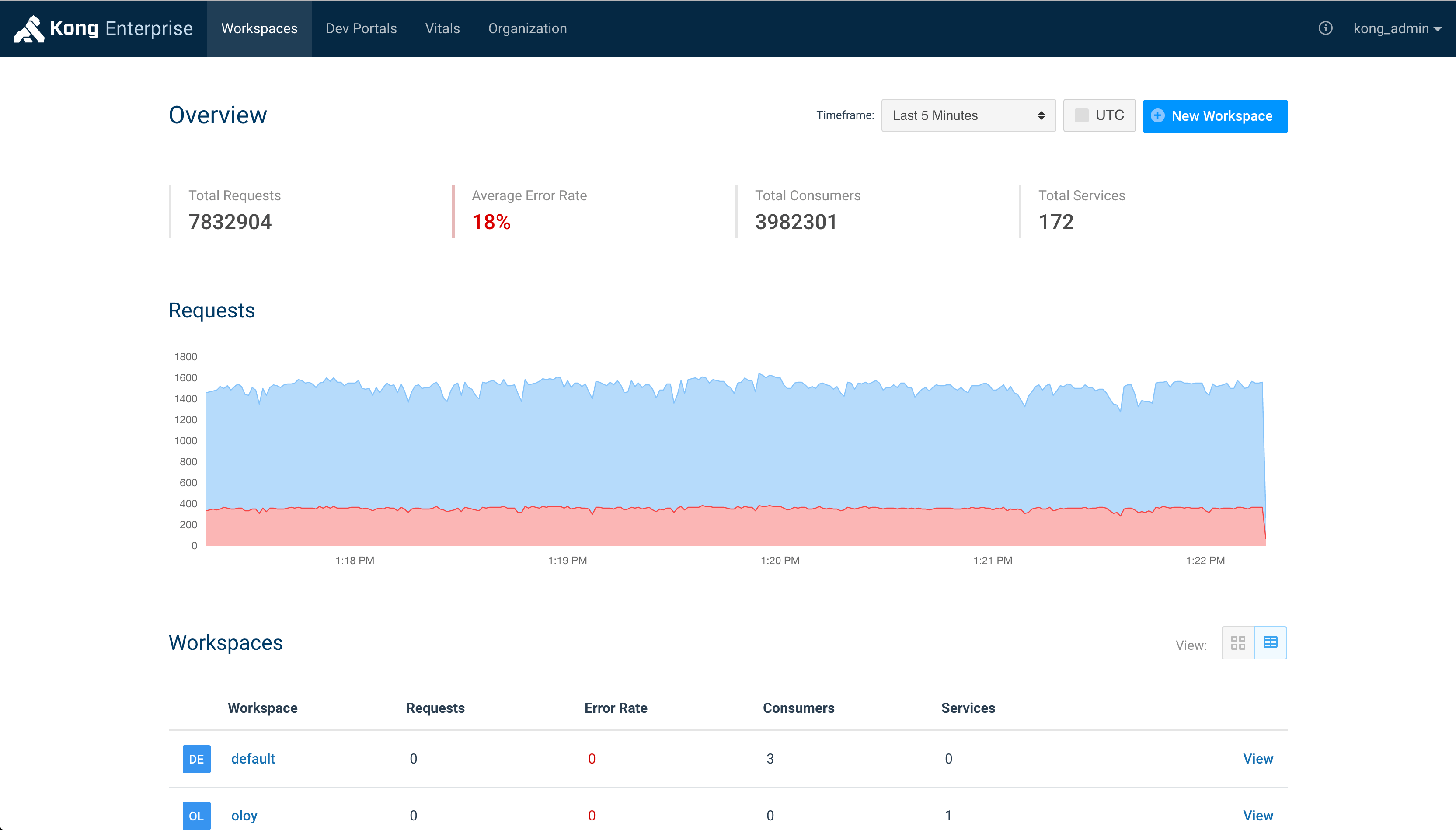

However, sometimes it can be very handy to have a GUI that easily displays all the information that you need – this is what Kong Manager brings to the table!

It gives you a management portal where you can manage your Kong cluster, plugins, APIs, consumers and more!

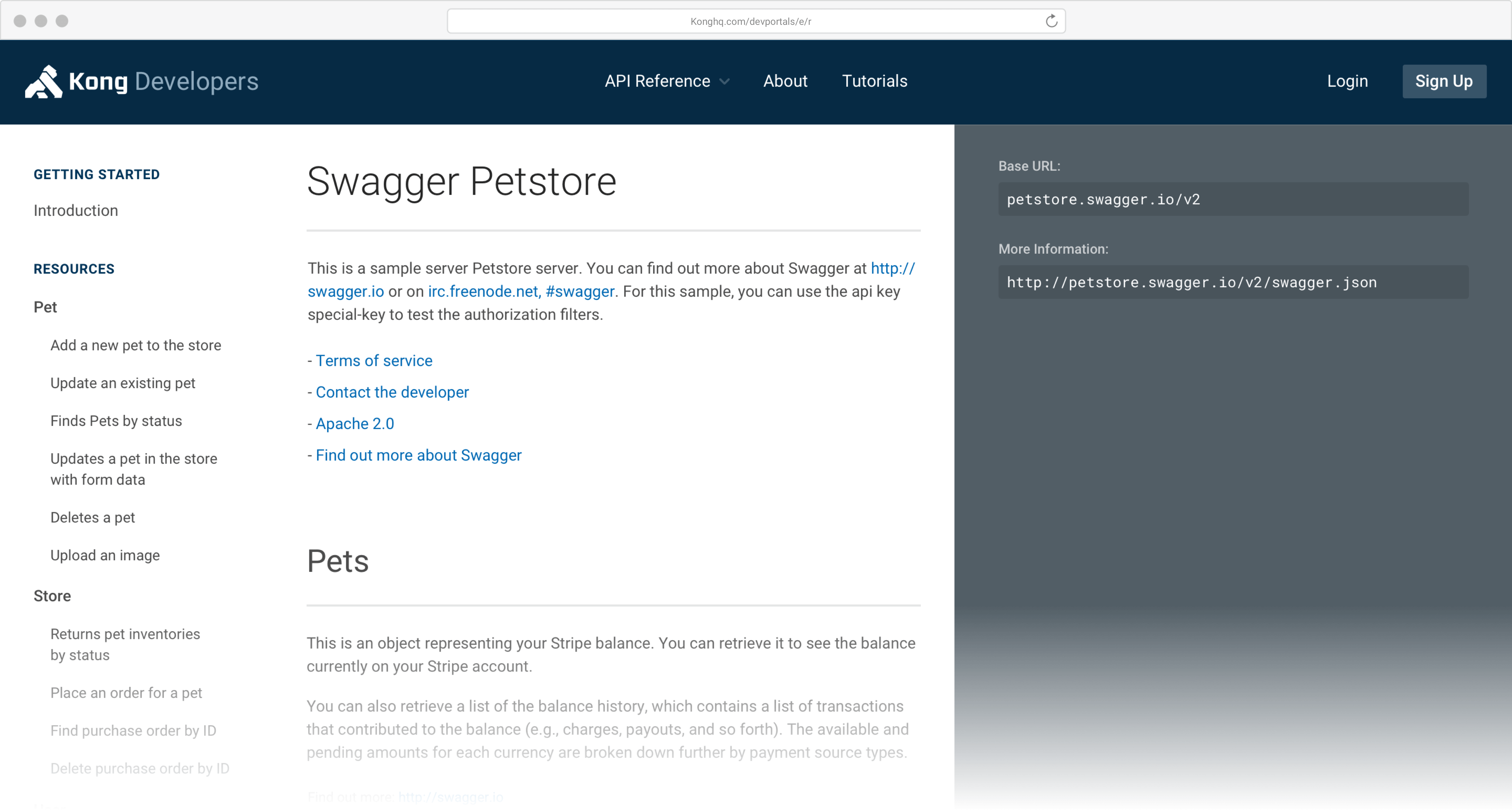

While Kong does not provide a developer portal; Kong Enterprise brings you Kong Dev Portal, the successor of Gelato, which will be the one-stop shop for all consumers of your APIs.

The portal provides an interactive API documentation experience where developers can browse your APIs and interact with them. It also provides developer management tooling where you can onboard new people.

Now that we can manage our APIs and our consumers can explore our API catalog it is just the beginning, we need to operate and gain insights into how our APIs and infrastructure is doing.

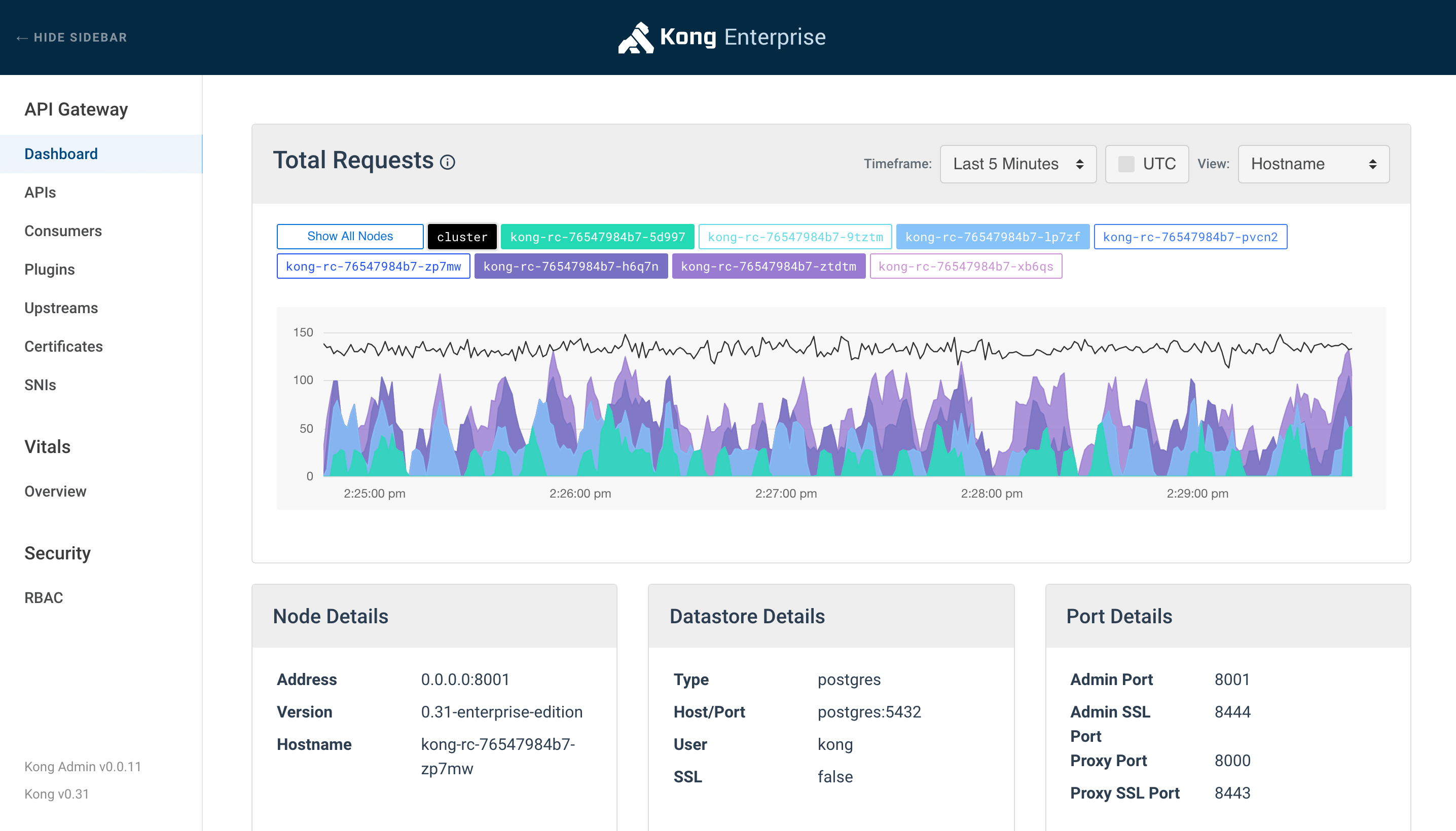

With Kong Vitals we can monitor and visualize our APIs in real-time and learn how our consumers are using our APIs.

By using Vitals we can also see how our infrastructure is running, for example, how is our cache doing? What is the health of our cluster? And so on.

Having APIs for our customers is important, but having APIs that are up and running is even more important.

But that’s not all, it also provides additional benefits:

- Security – Support for Role-based Access Control (RBAC), OpenID Connect and OAuth 2.0 Introspection.

- High Availability – Edge caching, canary deployments and more advanced rate limiting & transformations.

- Plugins – Use any of the plugins, including Enterprise-grade, that are available or build your own with Lua.

- Kong Experts from the Customer Success Team or Solution Architects help your teams implement your solution on Kong.

- 24/7 Support by a dedicated support team.

Kong Cloud, a managed Kong offering

With both Kong and Kong Cloud, it is your responsibility to run, monitor and scale your Kong instances giving you full control over what happens and where you want to run it. While that is great for some customers, it’s always nice to be able to delegate that “burden” to somebody else and focus on what is really important – your application.

Kong Cloud is a new managed offering of Kong Enterprise where Kong HQ runs, operates and scales your Kong nodes on a cloud platform of choice – either Microsoft Azure, AWS or Google Cloud.

This allows you to run the Kong nodes as close as possible to your application without the operational overhead. Kong HQ will also make sure that you are always running the latest and greatest so that you can benefit from new features.

Kong Cloud is a great way to kickstart your applications and see if Kong Enterprise is really what you need, without having to learn how to run it first. Once you are familiar with Kong, you can still decide if you want Kong HQ to run everything for you or if you want to have full control and ownership.

However, Kong Cloud is not cheap. Kong Cloud is not only backed by Kong Enterprise, but operating Kong is not for free, so Kong HQ needs to be paid for that.

Frankly, the sweet spot for Kong Cloud would be either consumption-based or on a per-node basis. This would make it easier for new people to give it a spin, at a low cost. If they decide to proceed with it, they can still scale out and pay more.

Another interesting aspect would be to have the capability to choose which Kong type we want to run. For some customers, Kong Enterprise is a must, but for others, this might be overkill but they could still benefit from the managed aspect.

Integration with Azure

As a Microsoft Azure shop, we’re always interested to know how new technologies integrate with Azure if there is any at all.

In the case of Kong, you can very easily trigger Azure Functions from within Kong by using the Invoke an Azure Function policy.

Great, but I want more!

It would be great if there would be more plug-ins in the future which allows us to integrate more with Azure.

Examples of potential additional extension scenarios are:

- Invoke an Azure Logic App

- Invoke an Azure Data Factory pipeline

- Write telemetry to Azure Application Insights

- Emit Azure Event Grid events about your process

Azure Event Grid integration would allow us to emit events for scenarios where we don’t have control over our downstream services and yet need to provide extension points. This can be because it’s owned by a different team/company, or simply because it’s a legacy system which we expose to other consumers.

Kong is more than an API gateway

As Kong evolved over time it became more than just a lightweight API gateway. With the Kong Service Control Platform, they provide all the tooling we’ve discussed around the core with Kong Enterprise.

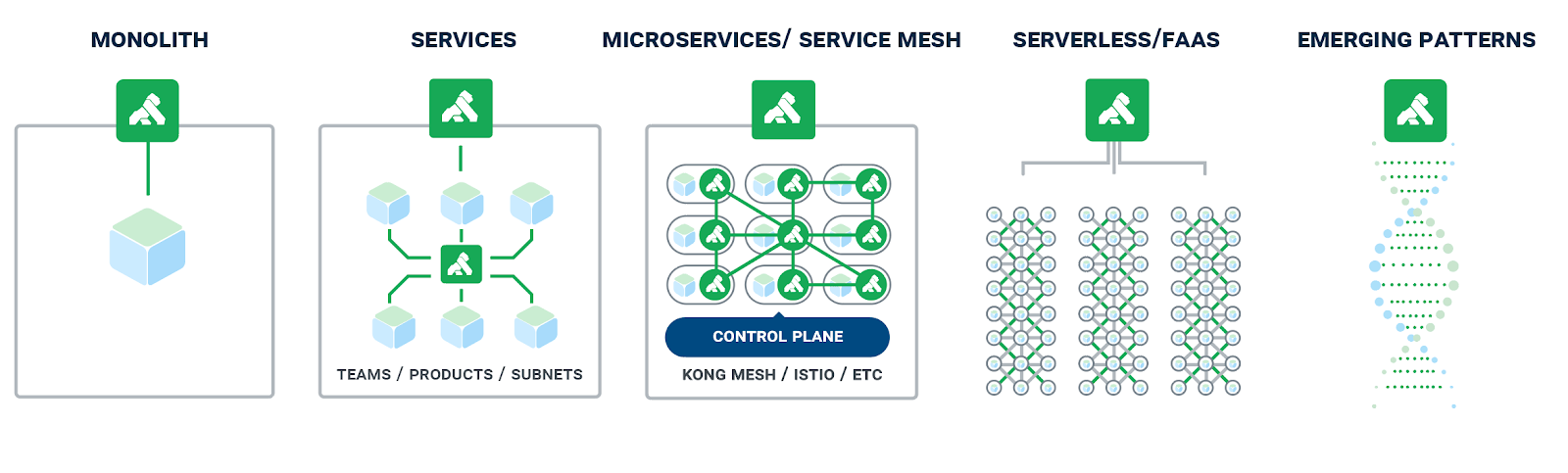

Aside from that, it’s starting to support more architecture flavors for your applications:

Starting with Kong 1.0 you can now also use it as a service mesh by using it as a sidecar proxy.

This not only gives you the traditional Service Mesh capabilities that you might be looking for, but it also allows you to re-use the technology you already know.

They are building a whole ecosystem around the product which makes it really interesting to invest time and effort in using this product.

Pricing

That’s great, but how much does it cost?!

- Kong (Community) is free of charge which is great because this allows you to use it in all environments or even locally without any cost!

- Kong Enterprise, however, is a different story. It comes with a big set of features and tooling around the free version so I expected this to be pretty pricey. It is using a pricing model that is based on amount of Kong users/administrators and not consumers with a very high API call limitation (which you will probably never hit).

Unfortunately, I was not able to get an exact number of how much it costs unless I signed an NDA which makes me thinks it’s pretty damn expensive.

The only indication that I’ve received is that it pricing that begins in the high-five-figures (USD). I’m guessing it will be around $75 000+/year ($6 250/month) which is not cheap.

There is more coming soon!

We’ve seen a lot of great stuff that Kong currently provides, but they are working on some very interesting stuff.

- Kong Brain (Private Beta) – Use advanced machine learning and artificial intelligence (AI) to automate tasks, map connections, increase governance and fortify resiliency without human intervention (product page)

- Kong Immunity (Private Beta) – Use advanced machine learning and artificial intelligence (AI) to run adaptive security which will respond immediately for you by proactively identify anomalous traffic before threats propagate. (product page)

As there is not much information available about these new products, I had to use the product page examples to extrapolate this information.

There is no information about the timelines for these products, nor if they will be part of Kong or Kong Enterprise only.

Feedback is an opportunity to improve

No product is perfect and that’s fair.

Up until now I haven’t had any field experience with Kong, except as simple prototyping but I already have some improvement requests:

No support for OpenAPI specifications for Kong Community – As far as I’ve seen Kong doesn’t provide support for importing OpenAPI specification nor exposing them to consumers. This is a fundamental problem as well documented APIs are crucial because it acts as living documentation that guides your consumers through your API landscape and sets expectations on how to use them and what to expect. That said, Kong Enterprise does support this where you can import your specifications and let it generate all the required route/service definitions.

Luckily the community jumped in by providing the Kong Spec Expose plug-in to expose OpenAPI Specs and raised a feature request for OpenAPI import.

Improved high-level documentation – The API documentation is very thorough and provides simple guides to get started which is awesome! However, I love schematics as they help you understand how things work pretty quickly, but unfortunately, those are not really present.

I’ve found it’s a bit hard to get an understanding of the high-level components that are part of Kong and how they work together. A simple example of this is how Kong handles request routing towards my downstream APIs. After a while, I’ve found that they are documented in the Proxy reference docs which makes sense afterward.

Nonetheless, this could’ve been documented in a Kong Concepts that explains all the core concepts of Kong and how they work together, such as what is an API and how it relates to your downstream APIs, how the users and applications relate to APIs, etc. but unfortunately this does not exist.

By improving this documentation, it will be easier for new people to onboard and set clear expectations of what it offers but also what it doesn’t offer.

Lack of PaaS offering – You can very easily get started and run Kong yourself, but I prefer to manage as few as possible. However, hopefully, Kong Cloud will help us tackle this.

No official Azure templates – The Azure marketplace currently provides two templates by Bitnami (Kong Certified by Bitnami and Kong Cluster) that provisions a Kong cluster. Nevertheless, it would be great to have some official Kong templates that provide the capability to either uses VMs or alternatives such as Azure Container Instances.

Conclusion

Kong has caught my interest and for a good reason – they have a nice product which you can run in a variety of scenarios such as an API gateway or service mesh and it is easy to install.

This is also one of its biggest advantages – just spin up a container and you’re good to go; be it locally, on-premises or in the cloud! However, I’m also looking forward to seeing Kong Cloud go live so that I can remove the responsibility of running everything to Kong, while still be able to run it locally myself.

Over the past year, I’ve seen Kong grow from a small OSS technology that provided an API gateway to expand its capabilities in terms of scenarios, offering, and innovation of their own product.

I think there is more coming and I’ll certainly keep an eye out! But are they really an upcoming leader in the API ecosystem? We’ll have to see, but they are well on track of becoming one.

One thing is clear, the API Management/Gateway business is booming and other companies are competing with Kong such as Azure API Management & NGINX

In my next post, we’ll have a closer look at how we can run Kong in Microsoft Azure and how we can expose our services via the gateway.

Wondering about a specific topic that I didn’t cover? Feel free to write a comment.

Thanks for reading,

Tom.

Subscribe to our RSS feed