Through Azure IoT Edge, you can collect a large amount of data from industrial assets without paying high cloud costs for data ingestion by leveraging Edge storage. The largest bulk of data which you will collect from these assets don’t have the “real-time” requirement to end up in the cloud for processing. At the same time, some data points will need to be send in “real-time” to the cloud which is still possible using IoT Edge. Azure IoT Edge also provides the flexibility to perform analysis in real-time at the Edge, sending alarms and alerts in real time while the large quantities of machine data at a more relaxed pace utilizing Edge storage.

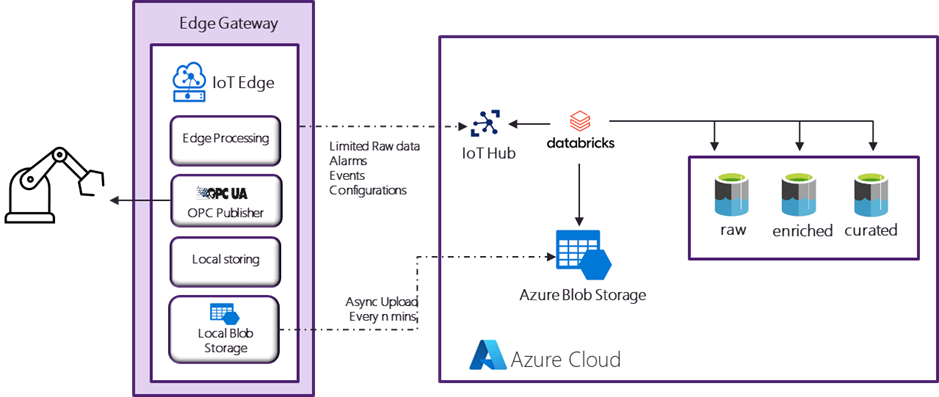

The solution exists by utilizing Azure Blob storage deployed as a Module on top of IoT Edge. This Module will allow you to temporarily store the data locally at the Edge, and synchronize the data with a Blob storage account in the cloud without paying for a real-time data transfer. This results in the following architecture:

Component overview:

- OPC Publisher: make the connection with the Industrial assets over OPC UA. The list of tags (sensors) that need to be monitored/collected is stored in a configuration file linked to the module.

- Local Blob Storage: temporarily store all machine data locally on the Edge using Blob storage. This local Blob storage solution has the capability to automatically upload the data to a cloud Blob storage account with different settings, such as automatic deletion, waiting until successful upload, delay time before deletion, etc. This enables you to build a robust solution without manual or additional logic to keep the Edge clean of historical data.

- Local storing: Storing the data in local Blob storage requires the use of the Blob storage API, in the same way you would for a cloud Blob storage account. At the moment, it is not possible to use Edge hub routes to ingest data in the local Blob storage. Consequently, this module will have an input route from the OPC Publisher and use the APIs (Blob Storage client) to insert the data into the Blob storage.

- Edge processing: in case you still require Edge processing to gain insight into the data in real-time, you can deploy additional modules on top of IoT Edge. In some cases, you can also filter out data tags that you can still send over in real-time to the cloud, but only if this is required for the business case.

Setting up this solution requires the following steps:

1. Create IoT Hub and create an IoT Edge device (the unique ingestion end-point for the device).

2. Install IoT Edge on a suitable host device and configure the connection string from the IoT Edge created in IoT Hub.

3. Create the Local storing module according to your requirements.

The following example reads all the data from the OPC Publisher and stores the data in files per minute into the local Blob storage. Depending on your needs, you can perform some processing before storing the data, making files for longer time ranges (depending on the amount of data to store), etc:

4. Create an IoT Edge deployment script that includes the OPC Publisher and Blob storage, which are both modules from Microsoft and your custom-developed module to store the data.

- You will need to insert the container registry credentials which will host your custom module.

- You will need to create a storage key (a 64-byte base64 key.) for your local Blob storage (https://generate.plus/en/base64).

- Setup the routes within IoT Edge.

- Setup the connection string for the cloud storage account, so files are automatically synced to the cloud.

5. Configure the OPC publisher to receive data from a server.

6. Deploy IoT Edge script onto the Edge device.

7. Confirm that the data is synchronized with the cloud storage account.

We have performed a demo with this setup where we are collecting over 25, 000 sensor readings every minute, resulting in files of around 12 MB. With minimal latency, the files arrive in the cloud storage account, where they can be picked up for further processing.

In case you don’t have the need for a real-time data transfer but want to have the flexibility to update your Edge component to integrate real-time analysis, processing, etc., this solution would be a good fit for your business case. In case a limited subset of sensor data is still required to go to the cloud in real-time, you can still set up a route through the IoT hub to receive that data and have the big bulk of sensor data transferred between the storage accounts.

Thanks for reading!

Talk to the author

Contact Francis

Chief Portfolio & Marketing Officer