From Servers to Serverless: BizTalk Migrator

Dan Toomey kicked off the final day. His session was about migrating your BizTalk environment to Azure using the BizTalk Migrator tool.

He started off by naming the reasons why you would want to migrate from BizTalk to Azure. According to him, the most important reason would be to make use of the scalability and modern architecture of Azure.

He then continued to compare the BizTalk features to Azure features that can be used. Most Azure features even have more possibilities than their BizTalk counterparts (like Azure Service Bus as compared to the BizTalk Message Engine: you don’t have to worry about throttling anymore). Only for the BizTalk Rules Engine and Business Activity Monitor you might need 3rd party options like Inrule or Atomic Scope [or even better: Invictus for Azure].

Now Dan told us about the BizTalk Migrator tool, but not before telling us it’s not a ‘click and done’ solution. It will give you a decent starting point for your migration but you still would need to do a lot of work yourself.

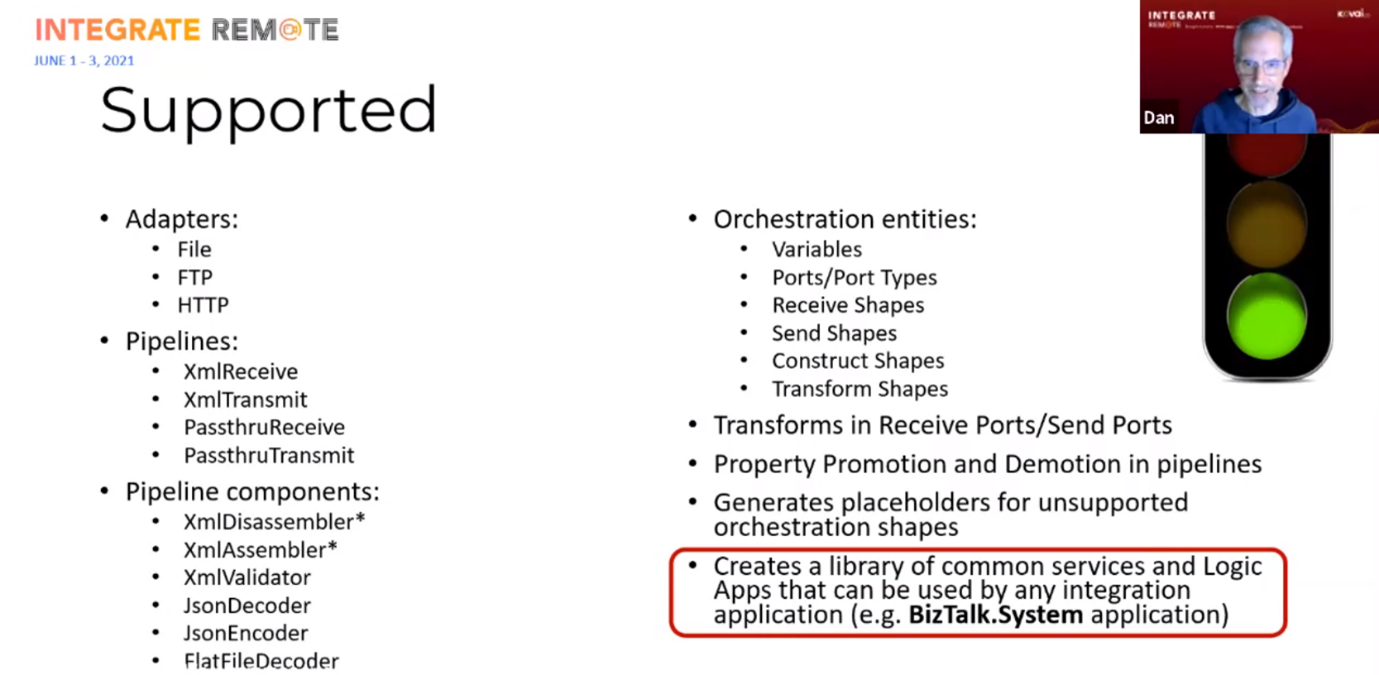

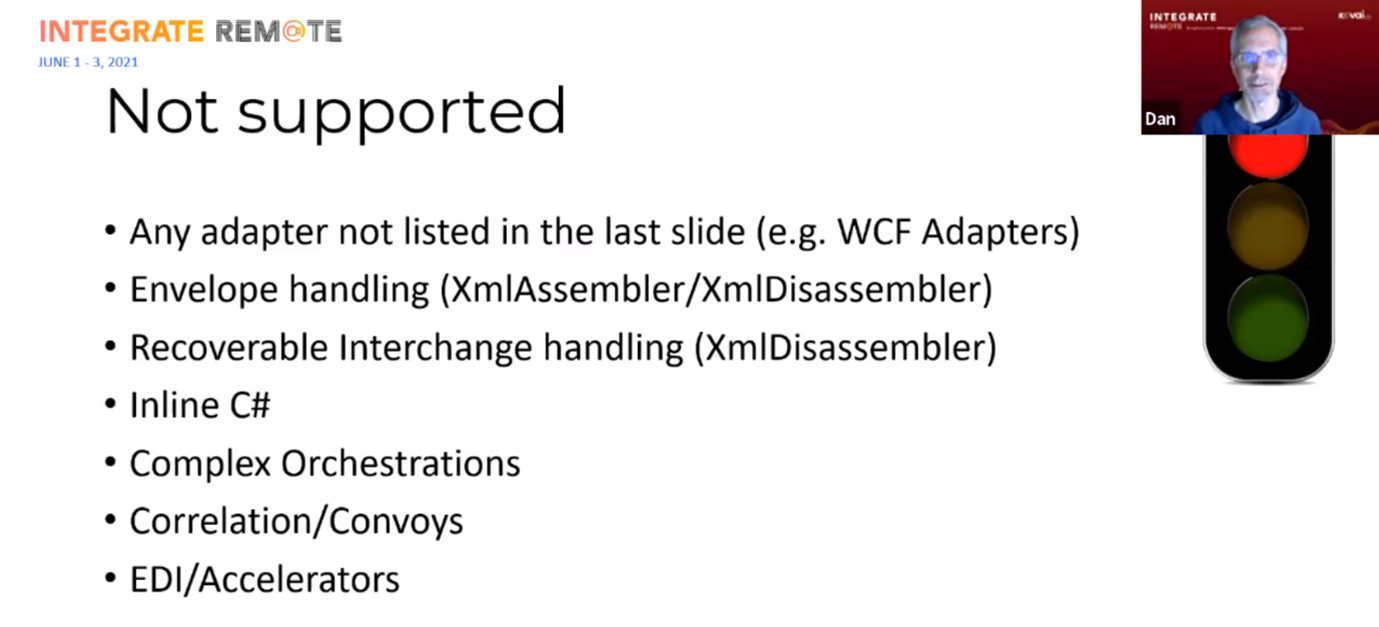

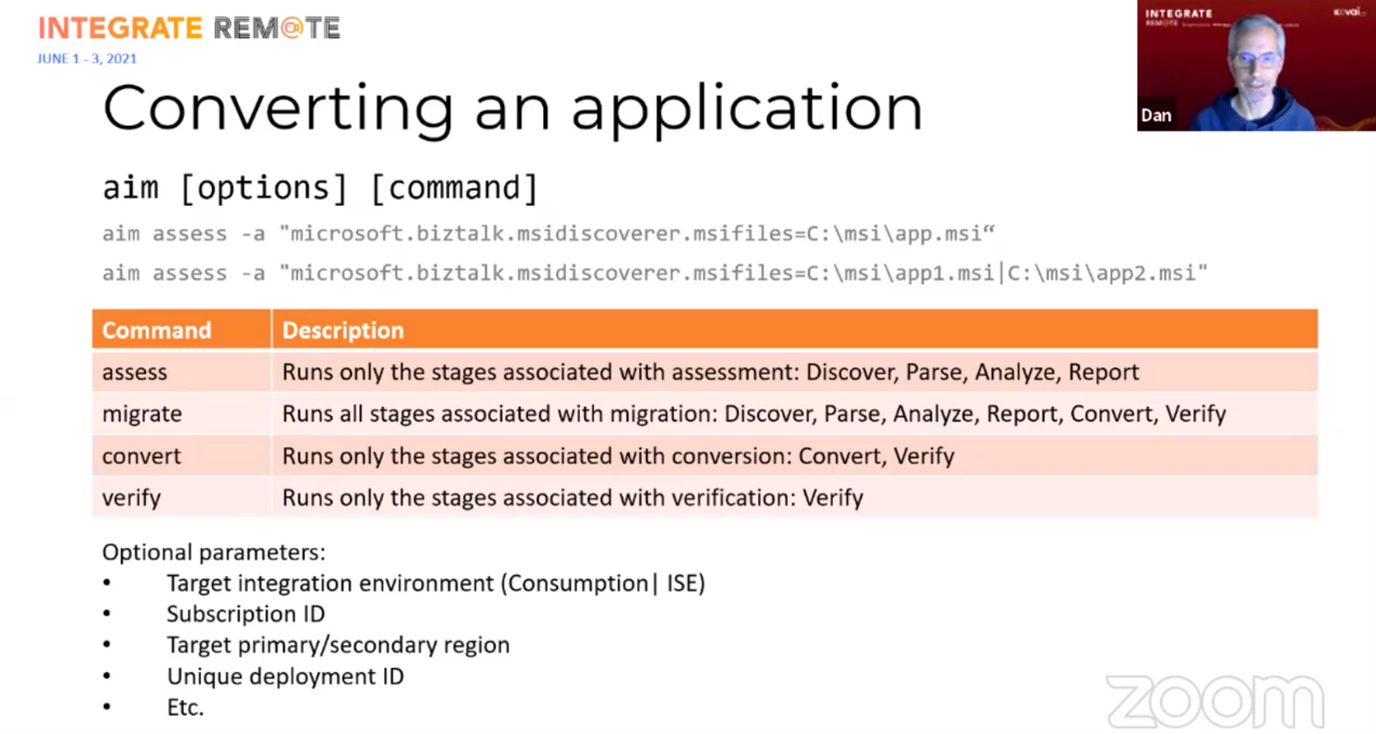

The BizTalk Migrator tool is an open source command-line utility that reads a BizTalk MSI, and generates an extensive report and ARM-templates for all artifacts you would need to deploy in Azure. Dan showed a list of what currently is supported and what is not supported. For unsupported features (like an expression shape inside an orchestration), a placeholder will be generated. But Dan expects that more and more features will be supported in the near future.

After this, Dan told us what we need to install and run the tool (Chocolatey), and showed us how the tool works.

The ‘Verify’ option isn’t implemented yet though. A complete ‘migrate’ run of a simple BizTalk application only took 8 seconds to complete!

After the demo Dan told us about the architecture principles behind the tool and how it actually works.

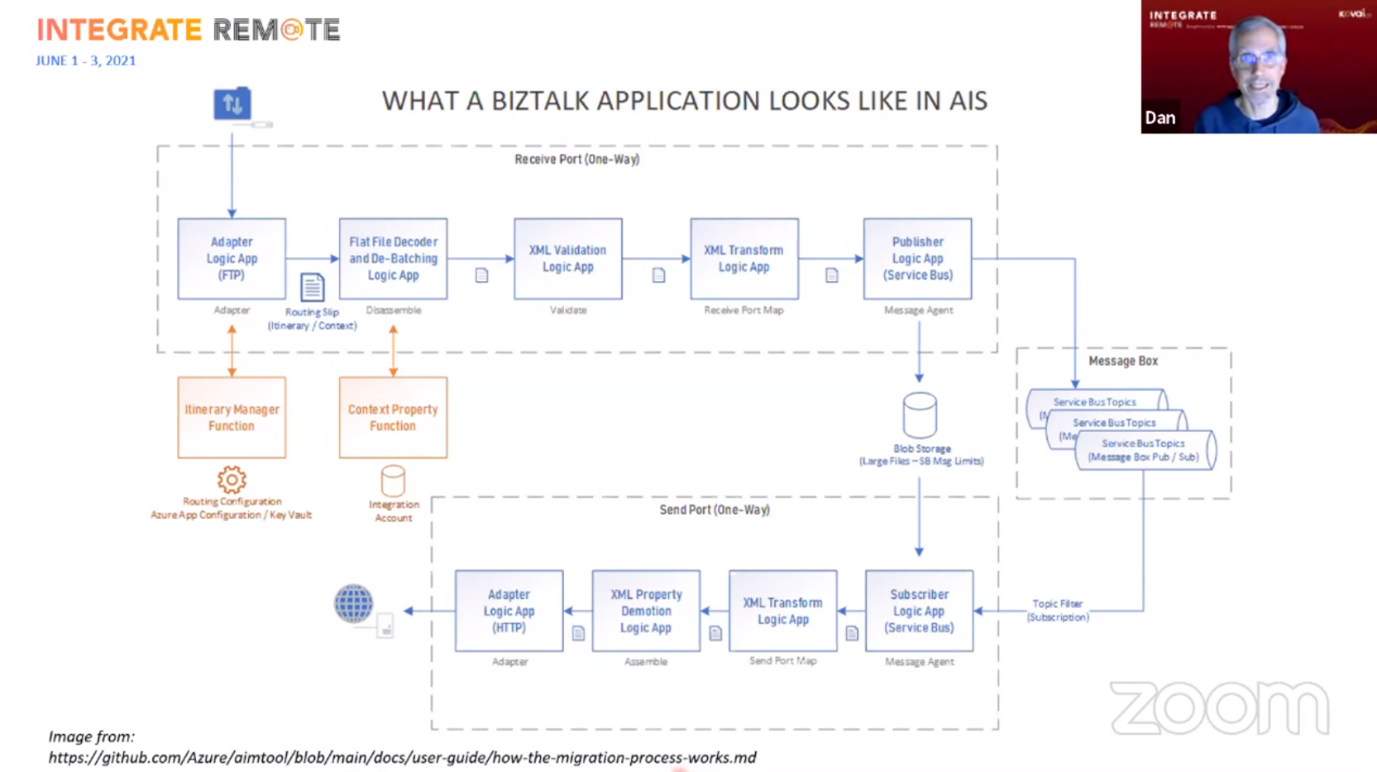

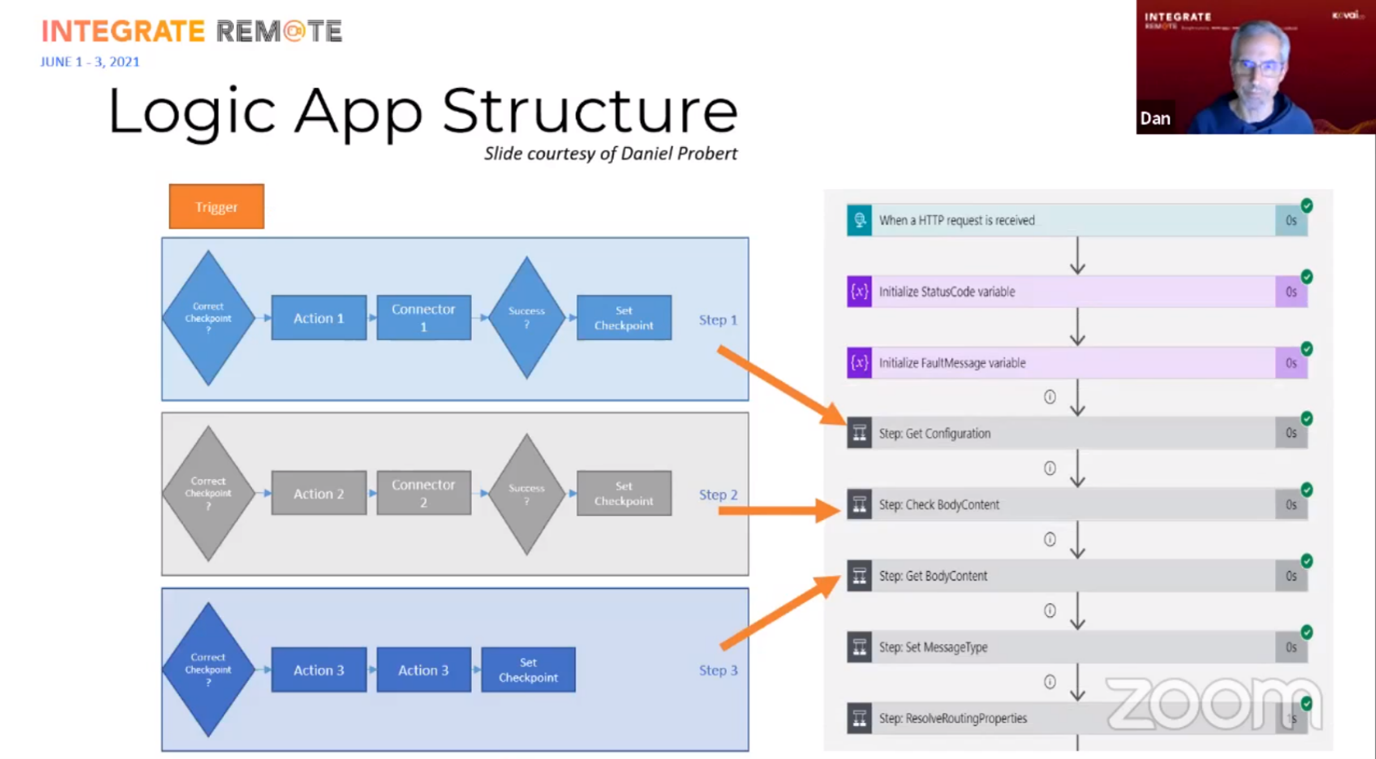

Most BizTalk features (pipelines, orchestrations) will be migrated to a number of Logic Apps using the Routing Slip pattern.

Dan wrapped up his presentation using a sample scenario, where he showed what Azure components would be generated based on a small BizTalk solution. Finally he mentioned some problems you might encounter.

His take-aways were that you shouldn’t compare BizTalk to Azure: it’s like comparing apples and oranges; the tool will only give you a jump-start for your migration; and finally the tool is community driven and Dan invites us all to participate in improving the tool.

It was a very clear presentation and Dan gave a very good overview of what the BizTalk Migration tool can do at the present time. It will give you a nice starting point to migrate your BizTalk solution to Azure. But because it has to take into account so many possibilities, even with the very simple BizTalk solution Dan used in his demo, it will generate an enormous amount of Azure artifacts, which in my opinion might give rise to problems from a maintenance point-of-view.

Advanced integration with Logic Apps

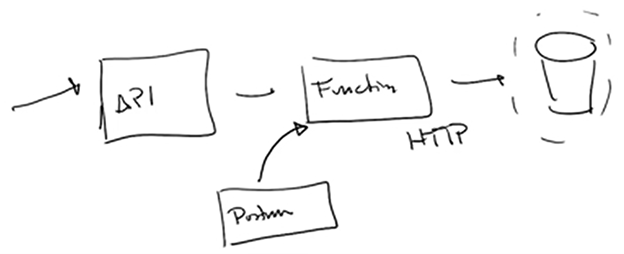

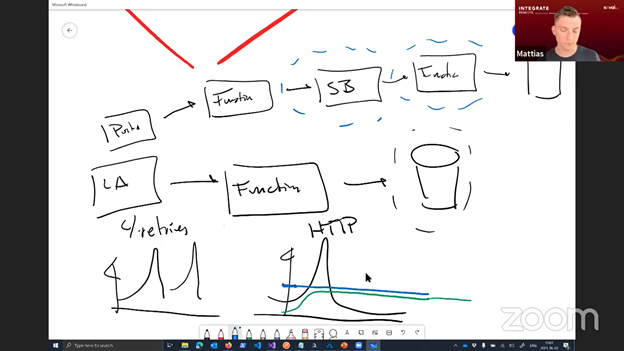

The second session of the day is Derek Li turning it up to 11 and showing us more of the advanced things you can do with Logic Apps through Demo’s & Tips and tricks. Derek made a cool, pre-recorded session with a multi-camera setup, so he could even do some “whiteboard” design session before he kicked off his demos.

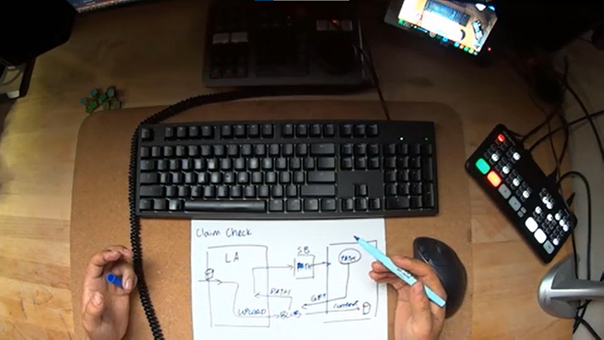

The first pattern he showed was the Claim Check Pattern, where he explained how you can use Logic Apps to upload large files to blob storage and then only process the pointer (blob path) on the ServiceBus, circumventing the message size limit. The second Logic App then receives this path from the ServiceBus, and goes and fetches the file from the blob storage. This is a pattern that is implemented in the PubSub component of the Invictus Framework for Azure, making it easier for you to get started with the Claim Check.

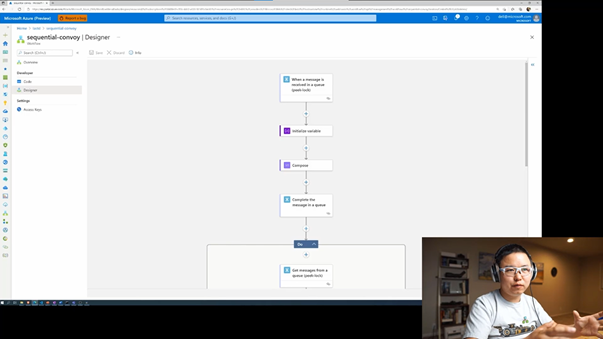

The next messaging pattern is the Sequential Convoy, in which Derek leveraged Service Bus Queue sessions and foreach loops to achieve sequential processing. While this is a good solution when receiving messages from Service Bus, it requires sessions to be used, and doesn’t work for other retrieval methods. The (Time)Sequencer component in the Invictus Framework for Azure solves this issue for you while not being limited to Service Bus Queues.

Afterwards he demonstrated how to use ngrok.io to forward local webhook callback urls from outside your localhost to the running Logic App instance. Allowing you to debug your webhook logic apps locally and still make them accessible from the internet.

When running multiple Logic- or Function Apps locally you might run into port conflicts. Derek told us that by updating the tasks.json you can add a “—port” parameter to the startup command to indicate which TCP port you want to run on.

He ended the session by showing us how to use GitHub Actions to deploy your Logic App Standards to deploy your infrastructure and workflows automatically to your Azure subscription. Including demonstrating how to parameterise your workflows for environment specific deployments.

All in all a nice session with some very useful tips & tricks that really showcased the new experience when developing and testing your logic locally with the new runtime.

Azure security baseline for integration

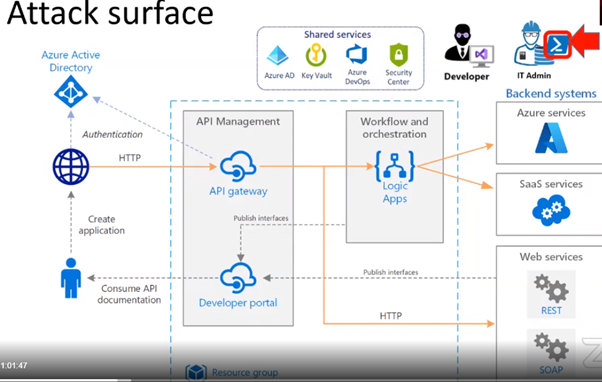

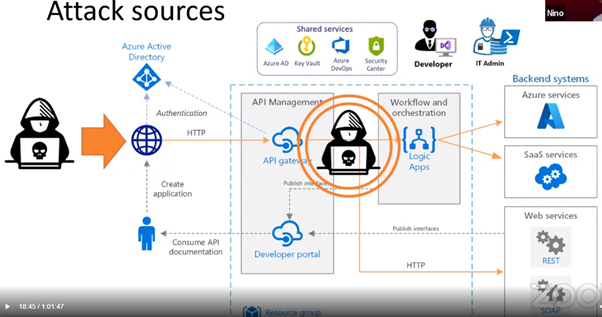

In this session Nino Crudele has highlighted some important factors related to the security in the Azure cloud. He emphasize on the weakest point of the cloud Azure and also on how hackers can attack and impersonate into the cloud environment.

As developers can be mainly focused on delivering solutions the security aspect sometimes gets put on low priority. Furthermore, cost can play a role in not approving certain security measurements, which can be a risk to the organization. As a developer it is important to understand the different kind of attacks and security measurements that can be taken. Nino mentioned that it can make sense to assign a dedicated person to take care of the security of the platform.

Most Used Attacks are

- Social Engineering

- Malware

- Phishing

- Shoulder Surfing

- Intrusion with privilege escalation

Most wanted target

- Human

- Infrastructure and by design

He has demoed how attack can happen with PowerShell and how it can be a weakness

Attacks can be of two type:

- Internal

- External

External Attack

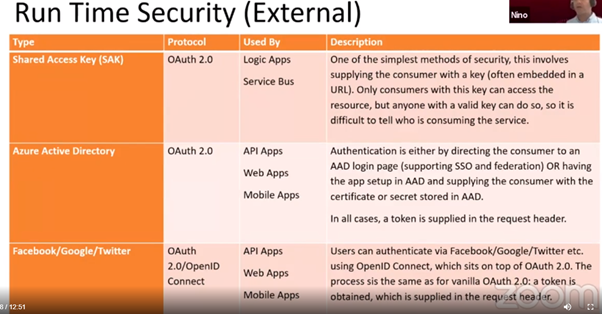

To reduce these attacks Nino suggested the approach which is currently available in Azure cloud space.

Below are the options using OAuth 2.0 protocols:

For LogicApps security Nino suggested

- Enable IP Filtering for Logic Apps Trigger

- Logic Apps with a request or HTTP should always be called by APIM

- Enabling IP filtering for Runtime logs

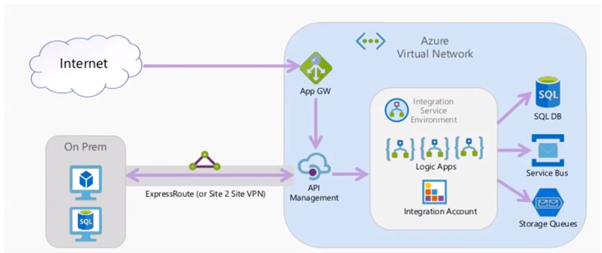

Network Isolation

For organizational use, Vnet will be used to separate the application in a subscription. Express route or Site2Site VPN should be used to connect on-Prem and Azure Virtual network.

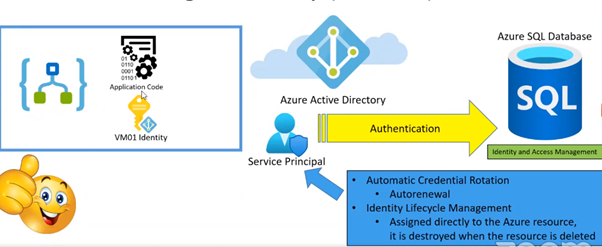

Internal Attack

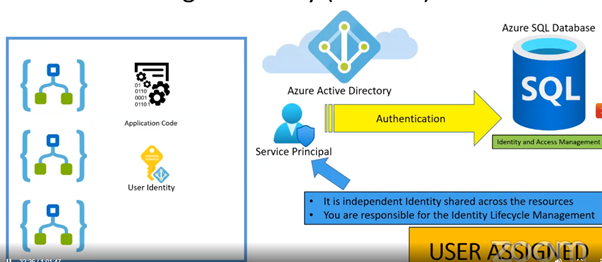

One of the most important aspects Nino has suggested is the Azure Managed Identity to protect from Internal attack.

He has suggested it is not a best practice to use Azure Key vault but instead use Azure managed identity. It has power of both identity key and key vault. It uses service principle account to authenticate. It is said to be a top level security. Managed identity is of two types user assigned and system assigned.

- Access can be restricted with contributor or operator

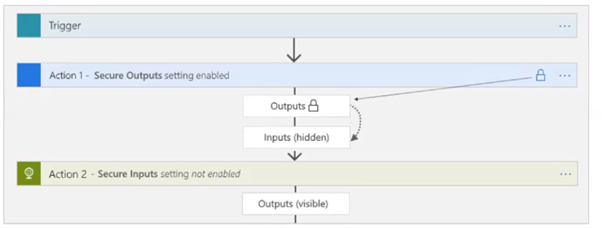

- Input and output design can be modified like below

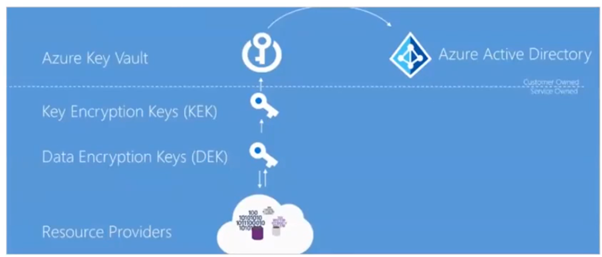

Further Nino has suggested by applying strong policy as a security wall. Further as a best practice Nino has also suggested to follow Azure encryption at rest as a baseline for any integration solutions.

Overall Nino has shared lot of information, best practice and standard baseline for integration.

Serverless360 V2 - new capabilities

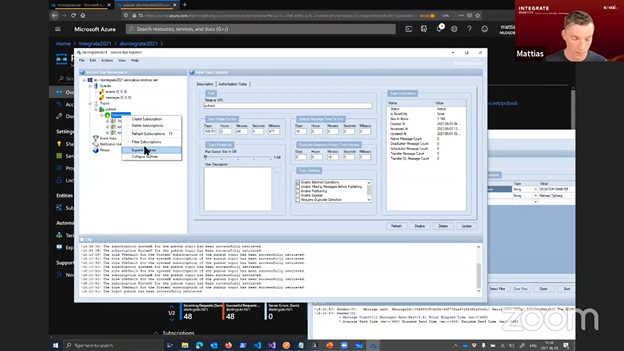

In this session Saravana Kumar lead us through the new features for Serverless360 V2, which is currently not released.

Why the need for this product?

Many businesses are reluctant to allow support staff access to the Azure portal.

He gave a brief history of the product.

Serverless360 V2 is the revamped ServiceBus360 tool for managing and monitoring Azure messaging resources with a new look and feel.

It has expanded capabilities and the ability to support more Azure resources, it currently supports 28 Azure resource types.

Serverless360 is built to complement Azure portal. The motivation for creating the product is because the Azure portal is too complicated for most support users.

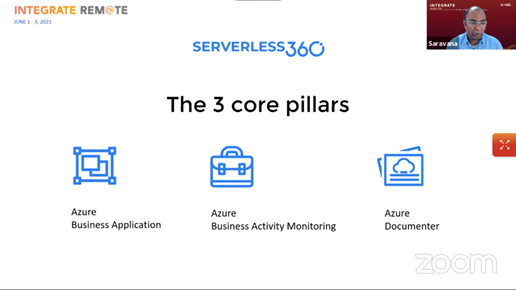

Serverless360 is organized into modules, there is the option to add more modules in the platform.

The reorganization of features improves collaboration, ease of use, and logical separation.

It comes with a new dashboard with the different filtering capability added and with the full screen mode capability with dark themes supported.

The new Serverless360 V2 has the following modules –

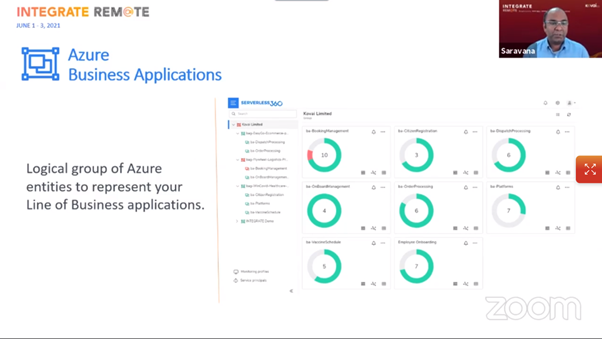

Business Applications – this is where various resources from Azure Subscriptions (same or multiple subscriptions) that represent a Line of Business (LoB) applications can be grouped together.

Saravana demonstrated how easy it was to setup a new application, add resources, and configure monitoring. Multiple dashboards are possible to which widgets and charts can be added.

A very nice feature is the ability to create maps of your business applications and the resources that comprise these.

Another very useful feature is automated tasks which can create automated clean-up tasks for storage accounts for example, or respond to event thresholds e.g. queue depth, or ageing of data.

It is also possible to auto apply monitoring rules when new resources are added to the business application.

Key benefits –

- Dashboard to visualize application health

- Provide access to users

- Monitor the application

- Tools focussed on support

- Visualize the relationship between entities

- Run automated tasks

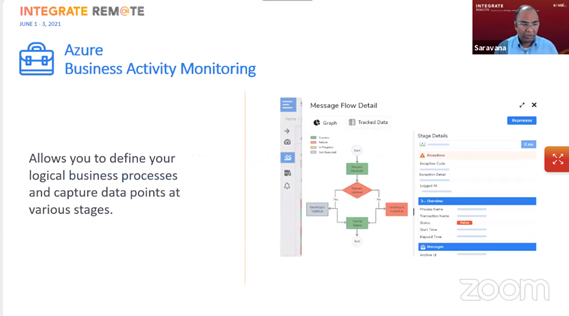

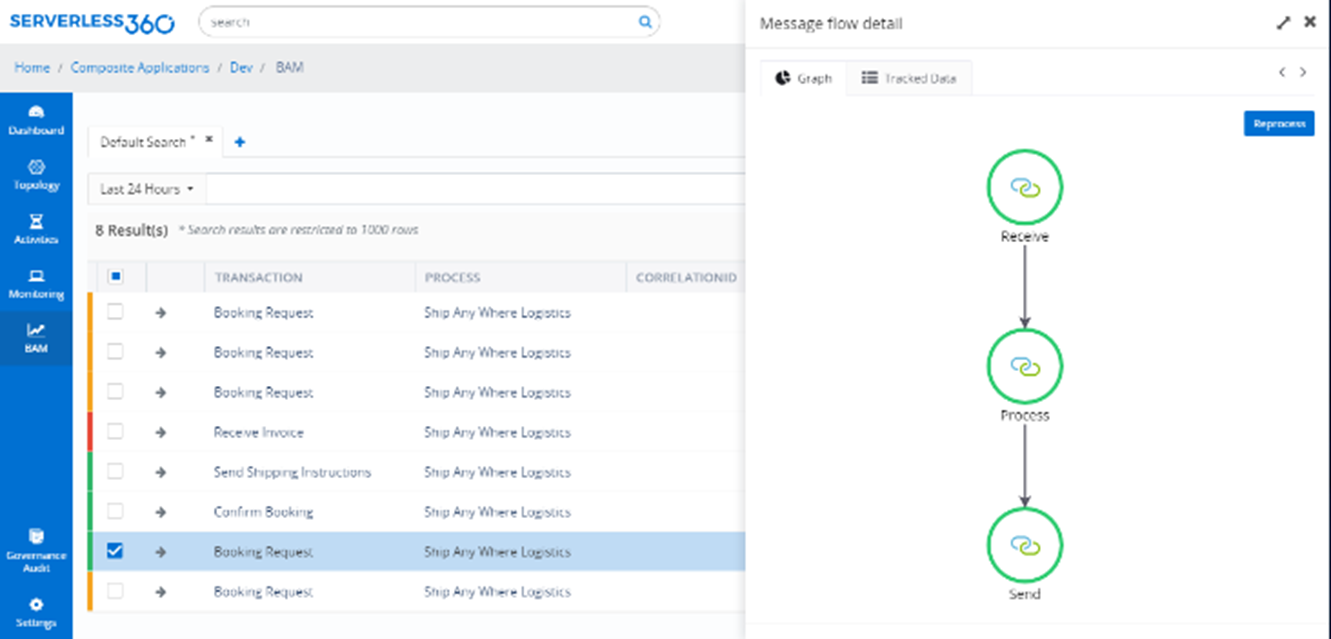

Business Activity Monitoring – this is an end-to-end business activity tracking and monitoring product for Cloud Scenarios. Allows for full visibility of end-to-end business process flows across Azure resources. With the new UI it is easier to view the complete message flow and drag and drop artifacts.

Saravana demonstrated how its possible to create maps of the process flows in the designer GUI, view tracking information, and add custom logging.

There is a rich query capability and there is also resubmit functionality for retrying failed processes.

Key benefits –

- End-to-end message visibility

- Figure out “where did the message go?”

- Reprocess failed business transactions

- Better business insights

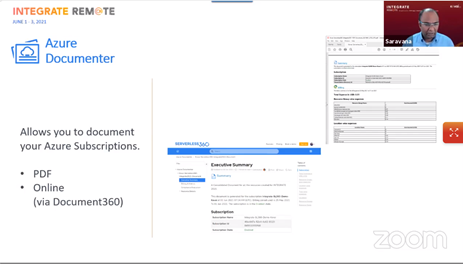

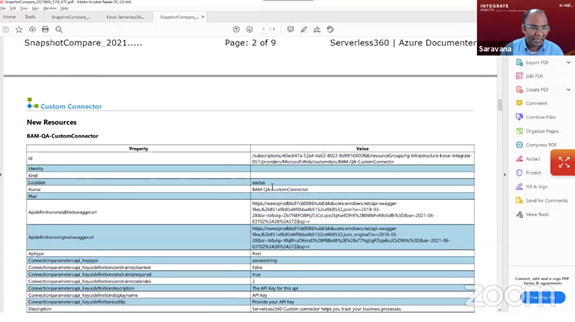

Saravana then talked about Azure Documenter which can generate extensive technical documentation on your Azure Subscription.

The design goal in this feature is to allow the layman to understand the context of the Azure applications.

This tool generates documentation or reports on your cloud usage. It provides details about resource usage, deployments, type, configuration, SKU, etc.

It can be cumbersome to generate and maintain the documents, and difficult to share with various stakeholders.

Saravana then demonstrated the generation of comprehensive documentation for an application.

The report contained a table of contents, billing metrics, compliance details, resource details, executive summary, etc.

The report allows you to see at a glance if something has been deployed to the wrong region, misconfigured, etc.

There is also a history feature allowing past report snapshots to be viewed.

Key benefits –

- Understand your Azure subscriptions

- Executive reports

- Compliance reports

- Billing reports

- Snapshot compliance

- Change tracking reports (coming soon)

- Spot problems early

In summary there is much to recommend this new version. Its currently not released ye but will be available in preview soon either as a SAAS option or privately hosted.

Comparing App Insights Log Analytics and BAM

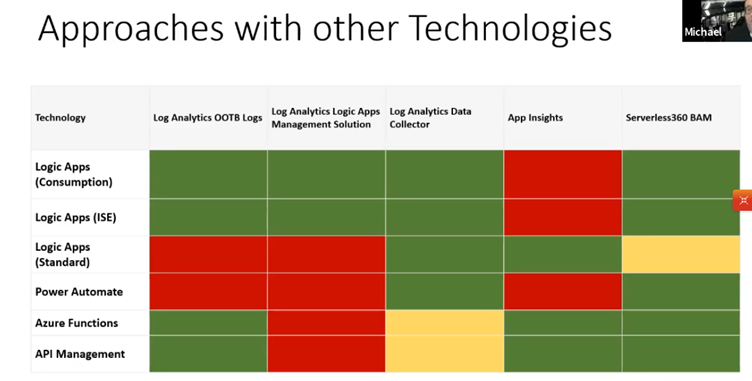

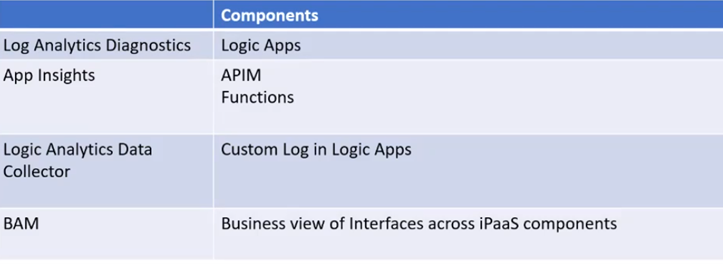

In this session Michael Stephenson discussed with the audience the differences and similarities between Log Analytics Workspace, Application Insights and Serverless360 BAM when using Logic Apps.

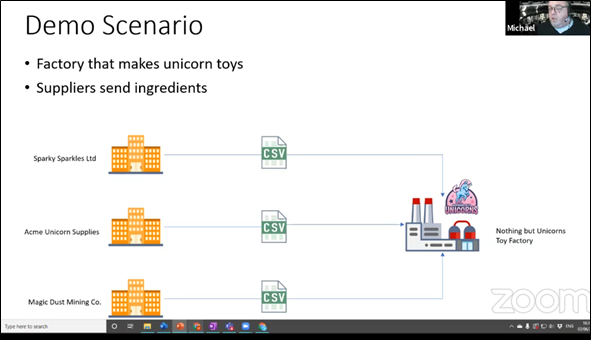

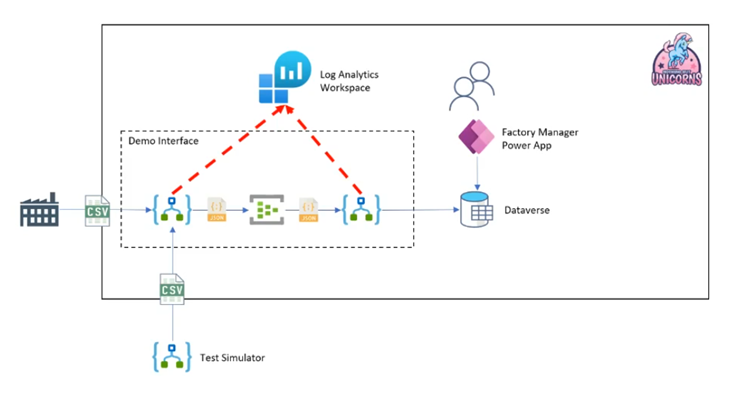

Michael used his famous Unicorn factory as a sample scenario to show the various options for gaining insights out of Logic Apps.

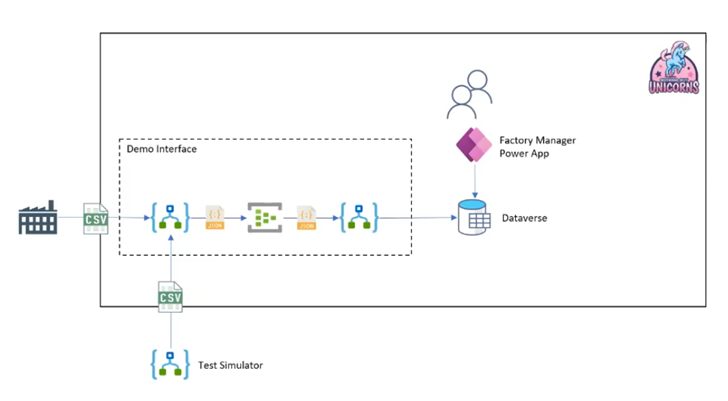

The scenario consisted of two Logic Apps that receive and process a CSV message sent by suppliers:

Michael prepared four variations of this scenario to demonstrate the several possibilities when it comes to monitoring for Logic Apps.

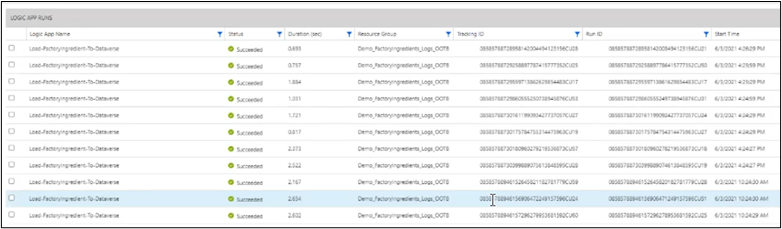

In the first demo, Michael showed the out of the box Log Analytics functionality for Logic Apps.

Because Logic Apps are connected to the same Log Analytics workspace, the data will be centrally available. However, the Log Analytics Workspace remains very technical, so it is not very useful for non-IT people:

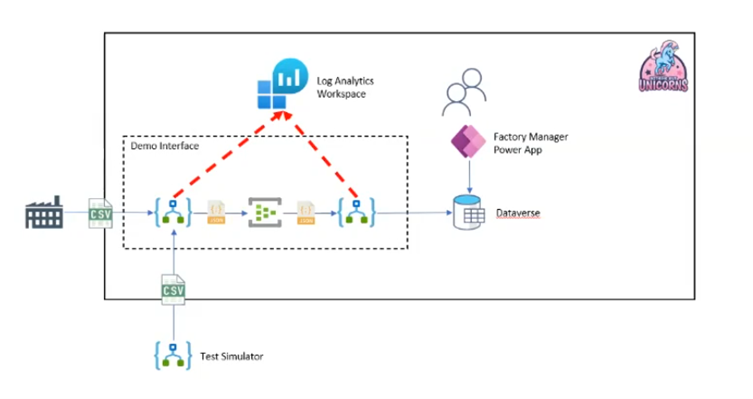

Michael noted that is also possible to create Workbooks (based on the queries used) that enable the creation of predefined queries, which can be used in Dashboards.

In the second demo, the Logic App setup was a bit different by having extra actions that call out to the Log Analytics Data Collector. This collector makes it possible to send custom messages/data to log analytics. That, in turn, can be used to create more powerful and simpler queries.

With this option, the Log Analytics is still used, but the Logic Apps write custom messages to it. Because everything is in Log Analytics Workspace, the Workbooks option remains available.

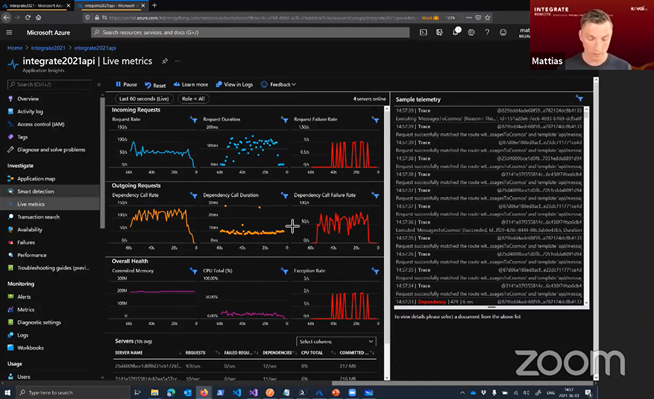

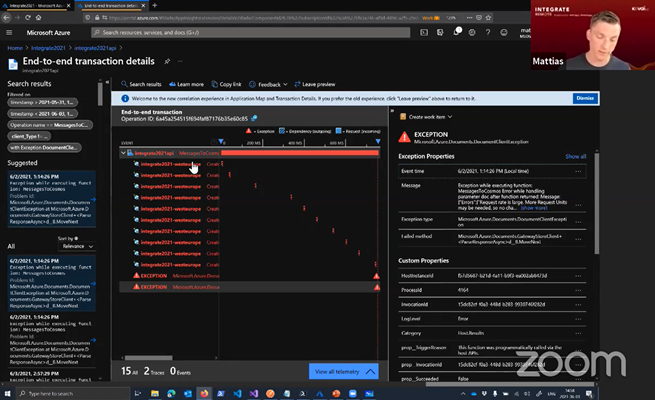

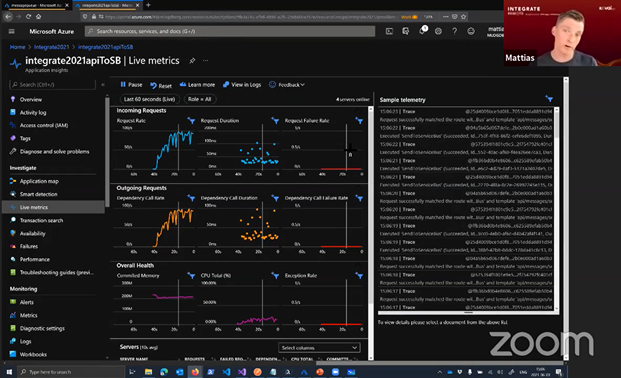

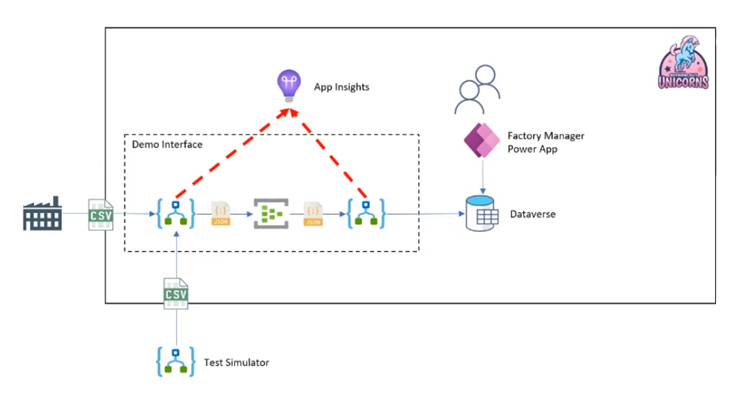

On the third demo Michael showed us the same setup but with the use of Application Insights, which is currently only available the new Logic App Standard.

The advantage of this setup is that there is no need for extra or special actions to create log information. In Application Insights it is possible to do a fuzzy search on the tracked properties to find the correct data.

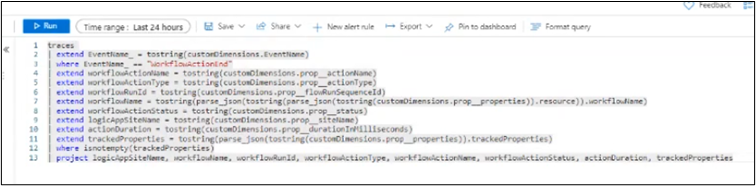

Michael shared with the audience various queries and showed us the level of detail that was possible to get out of it, proving to us how powerful this solution is.

Yet, although powerful, this solution remains very technical, and some data may even be lost under heavy load (due to data retention policies – feature of Application Insight).

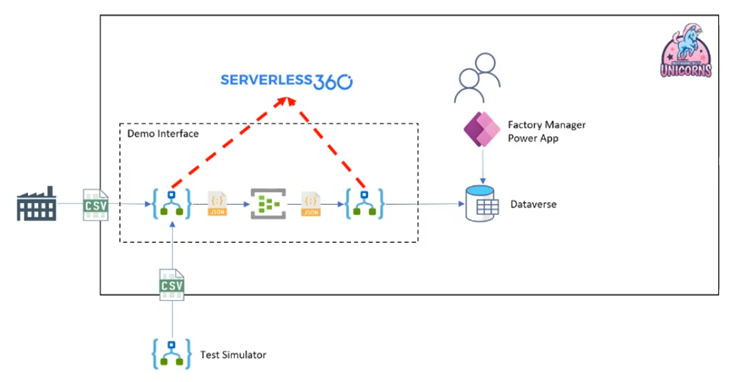

In the fourth and last demo, Michael demonstrated the same solution but using Serverless360 Business Activity Monitoring. For this to work, the Logic Apps need to be extended with extra actions that call out to Serverless360.

Once the data is in Serverless360, the Business Application object will show all relevant data belonging to the same process in a single view

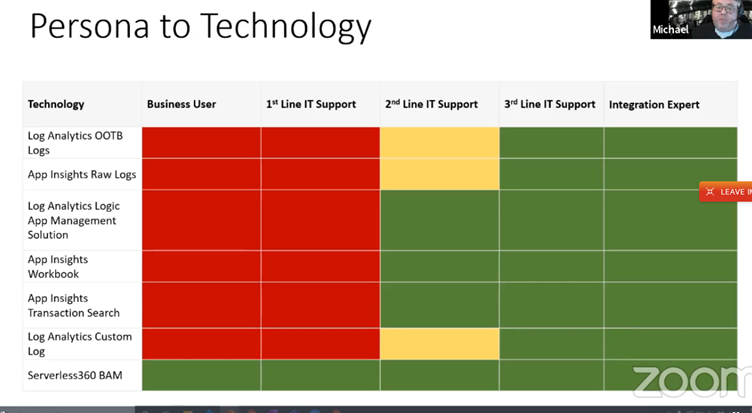

Getting closer to the end of the session, Michael gave a nice overview of the various monitoring options that he worked with, illustrating the various Azure monitoring services, how they are mapped into Logic Apps, Power Automate, Functions and API Management, but also how they fit into the several roles IT support.

Based on the information on this Michael shared with audience that unfortunately the monitoring services available today, are not useful for end/business users except for Serverless360 BAM. As such, and based on his real world experience, Michael shared with us a table that summarizes the technologies he uses on his projects:

Closing the session… Michael shared the link to his Integration Playbook project which also has article on this topic.

The article can be found on https://www.integration-playbook.io/docs/compare-la-appinsights-bam-overview#.

Azure Event Hubs update

The Azure messaging fleet provides a variety of messaging services. However, it is important to choose the right tool for the job.

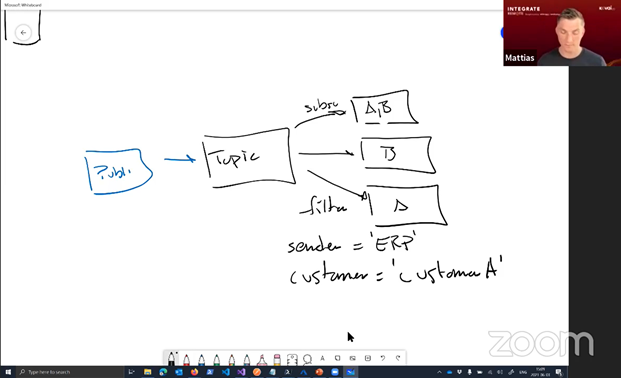

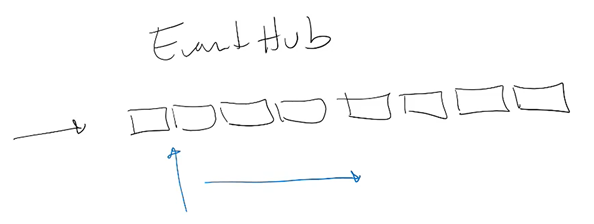

As Kevin Lam states, you don’t want to use a spork to eat your sushi. Event hubs can analyze patterns in real time, it works in a Push-pull model and can be replayed as often as you like. The main difference between event hubs and queues is that the event hubs record a stream and partitions the data.

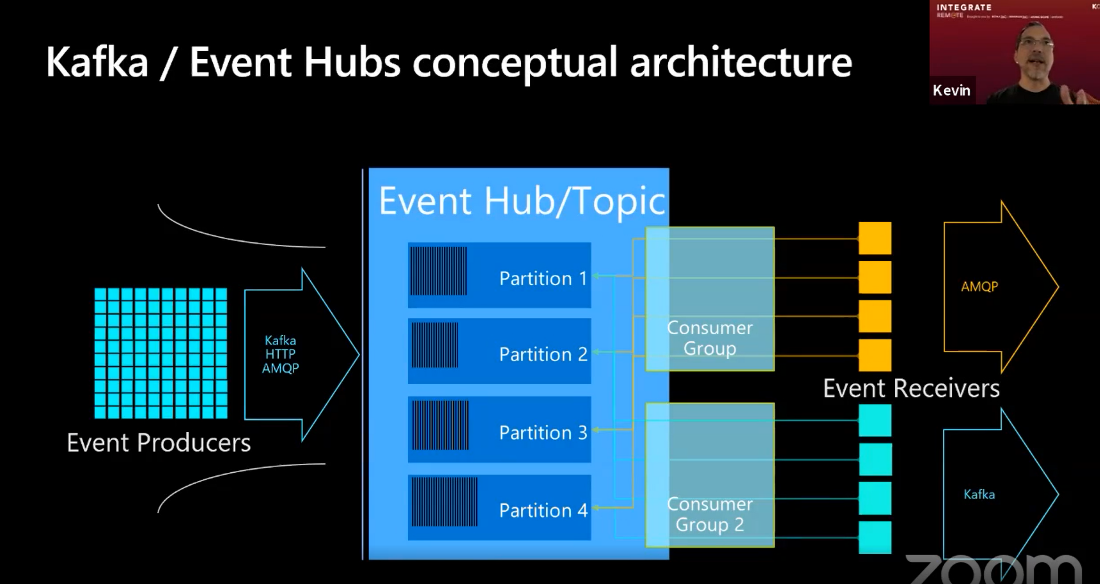

Kafka / Event hubs share the same conceptual architecture. Namely, a set of producers sent events to the event hub/topic through a variety of protocols. In the Event hub the messages are partitioned based on a partition ID. The event receivers create a consumer group that listens to all the partitions. It is the consumer groups responsibility to maintain the context for where they are and what they care about while reading. This is different from Queues because here this is the servers side responsibility. What this means is that you can have a consumer group reading AMQP whereas another consumer group reads using the Kafka protocol.

Kafka with Event Hubs

Following up on the brief introduction to what an event hub is, Kevin explains that event hubs support Apache Kafka. It does so by implementing the protocol head but does not host or run Kafka. This allows an Open API approach making Event Hubs a single broker that supports HTTP, AMQP and Kafka. Choosing for EventHub for Kafka you get a fully managed PAAS, that is highly scalable. It makes it easier to move data to the cloud and integrate with azure services.

Event hubs capabilities

Some of the Key event Hubs capabilities were highlighted:

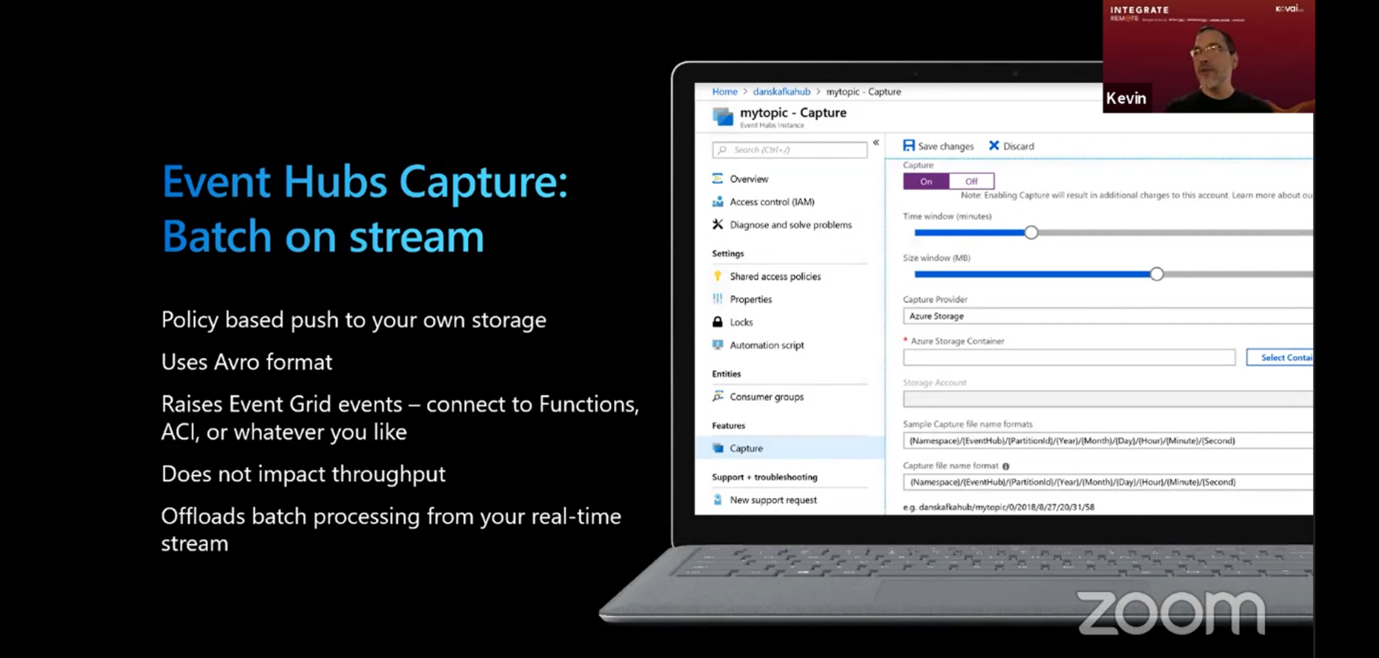

Event Hubs Capture: Batch on stream

Event hubs capture allows policy-based push to your own storage. Furthermore, it makes use of an Avro format which has a self-describing model. This means that the schema is embedded in the message itself. When a batch is created, an event can be connected to Azure functions, logic apps or container instances.

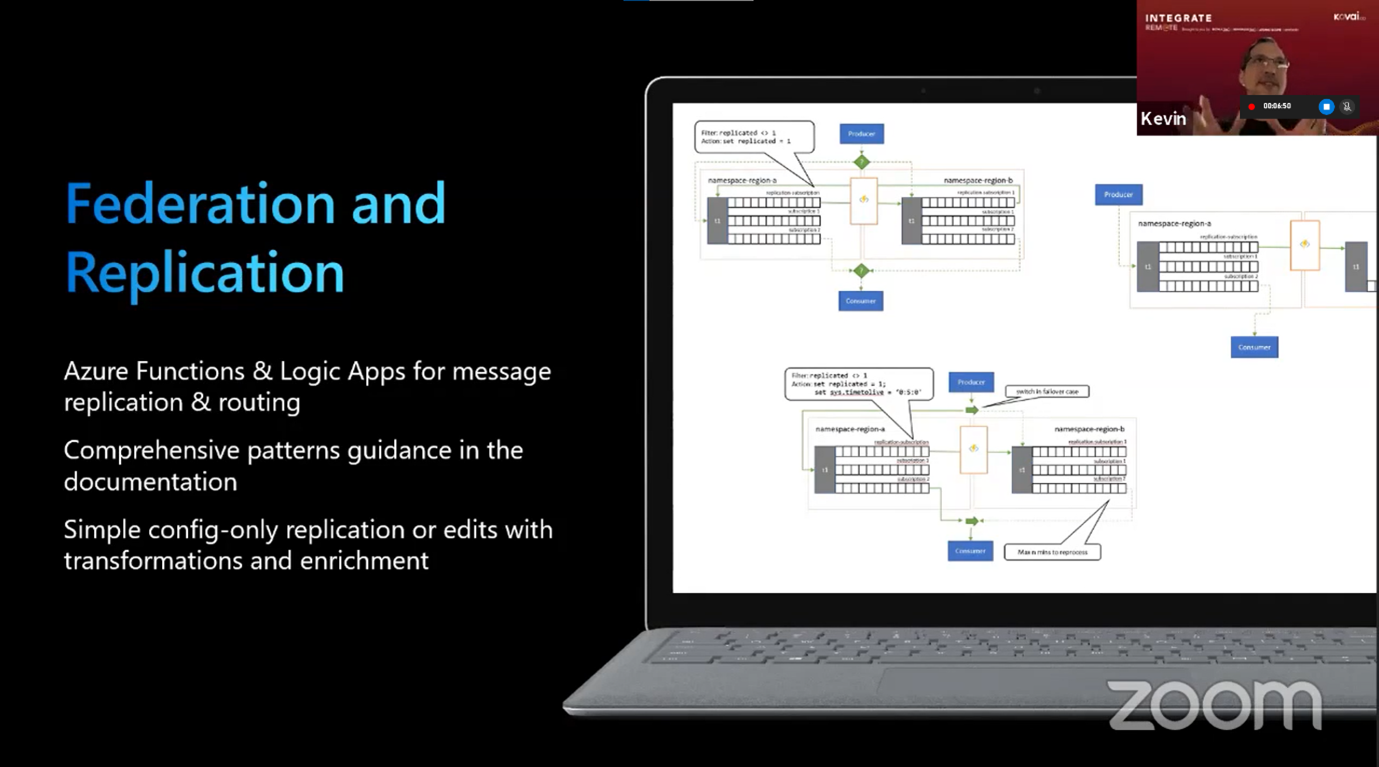

Federation and Replication which is important for events hubs because it provides the ability to replicate the events to another event hub, different messaging entity or another service. There are config-only functions available where all you need to do is describe the input and output. However, it is also possible to crack this open and do transformations or enrichments with functions or logic apps.

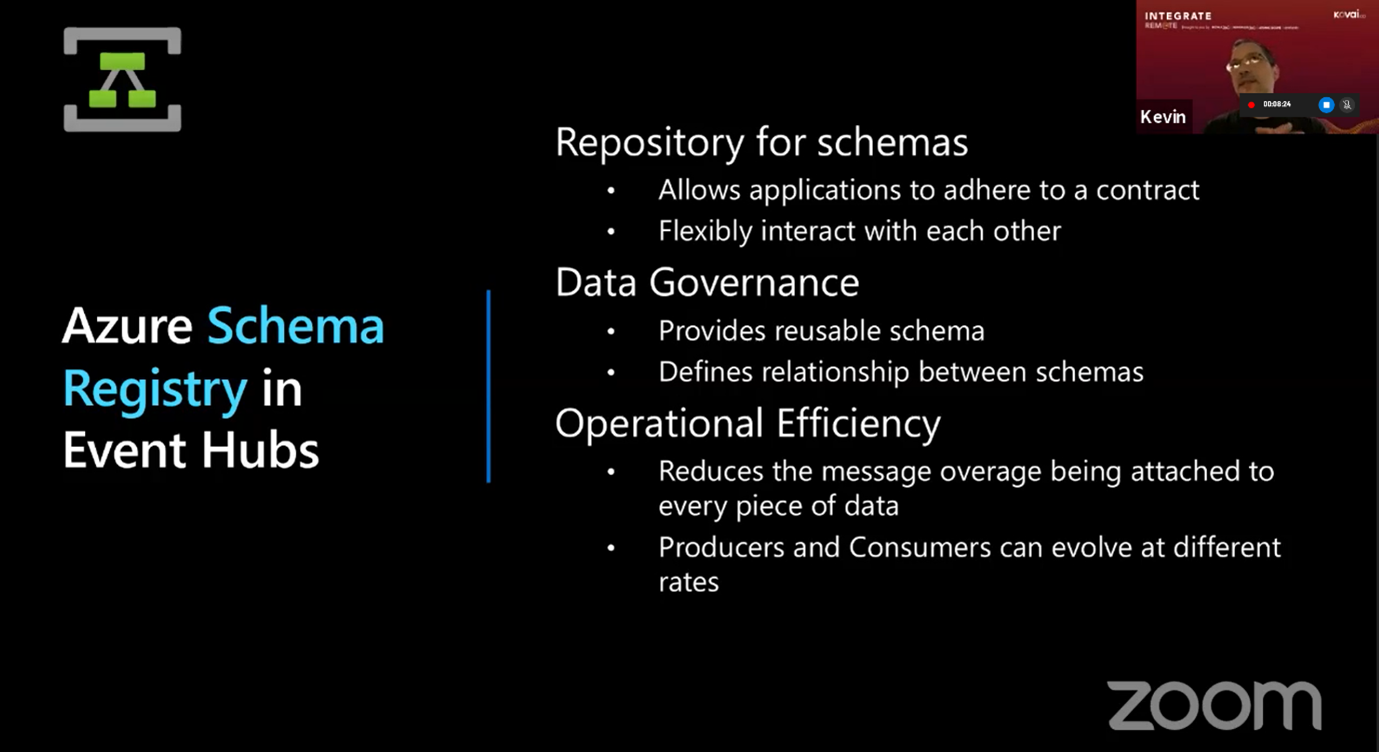

Schema Registry provides a repository for schemas to perform message validation. This repository allows for reusable schemas, so you can now have one schema used by multiple event hub. A major benefit as this allows for easier management. Using the schema repository also increases efficiency; where previously a schema was attached to an error message, now the error message contains a reference to the schema.

In addition to the key features, some more features were mentioned

- Stream analytics to test your query or preview event hubs data in the azure portal

- AAD integration for RBAC, Conditional Access and Managed Identities

- Customer Managed Keys (BYOK)

- Security-Always encrypted

- VNET support with private endpoints and IP Filtering

- Auto scale-up with Auto Inflate

- Geo-DR, metadata

- Availability on Azure Stack Hub

What’s New

The newest additions to the Azure Event Hubs are: Idempotent Producer for Event Hubs Client, Event Hubs on Azure Stack hub, 1000 namespace per subscription, 40 throughput unit for standard, the Schema registry (discussed in detail before, preview) and the Event hubs premium tier (preview)

Event Hubs Premium

The premium model has been added to the 3 previously existing (Standard, Dedicated and Azure Stack Hub) hosting models. Features included in the premium offering are:

- Deployment across availability zones

- Private Links

- Customer Managed Keys

- Capture included in the costs

- Extend Retention

- Dynamic Partition Scale Up to increase the number of partitions without recreating the event hub

- Extended limits

- Increased limits

- 100 partitions per event hub

- 100 consumer groups per event hub

- 10.000 brokered connections

- 100 schema groups

- 100 MB schema storage

- 90 days of message retention

- 1TB storage included per PU

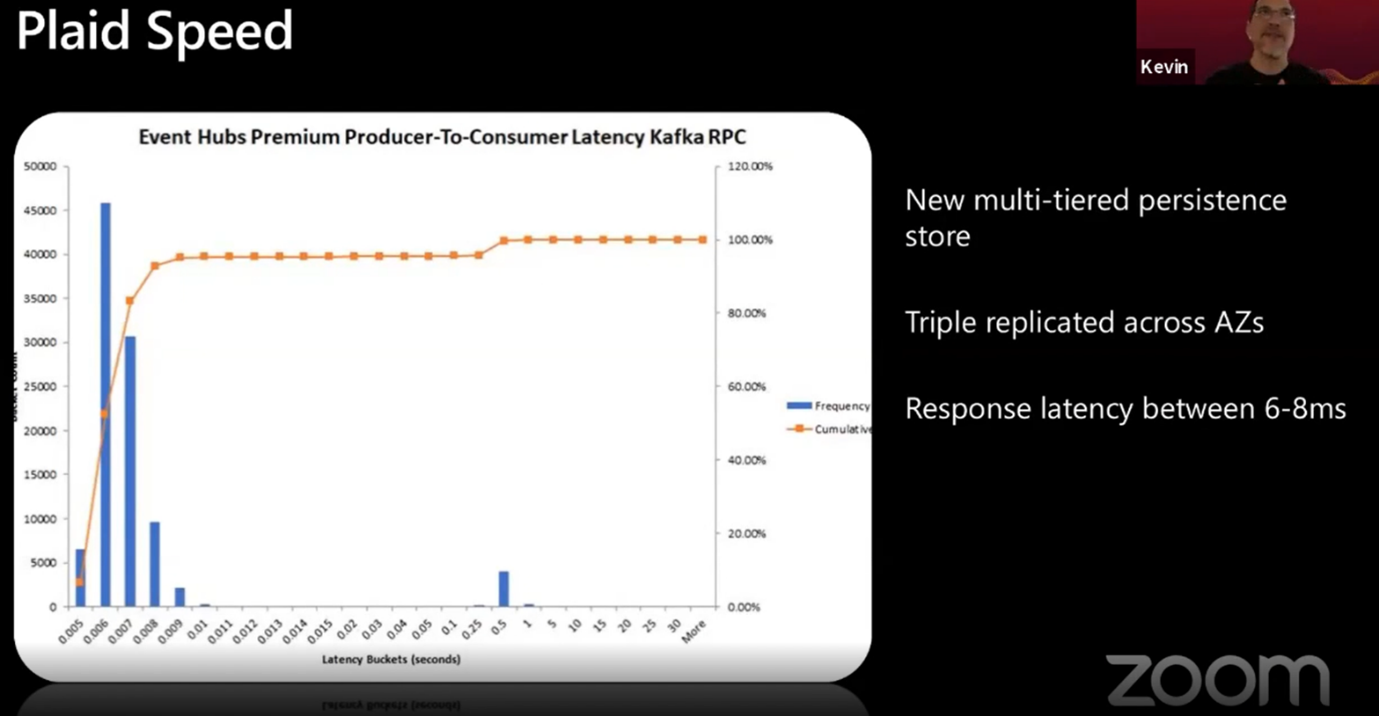

By rebuilding the broker, the development team obtained a multi-tier persistence store where the hot path goes to local disks, and the overflow goes to storage. This resulted in an incredible speed performance with a response latency between 6-8 ms. For those with a keen eye, indeed a small peak of latency can be observed at 500 ms, but this is a bug that will be fixed before GA.

What’s Coming

To conclude his talk, Kevin summarized what is coming up for the Event hubs

- Managed identity for Capture, allowing managed identity or system identity to go and authenticate with the storage account. This removes the necessity of the storage account needing to be in the same subscription.

- Self-serve Dedicated, so that the Event Hub does not have to be predeployed, but can be defined as a dedicated hub right from deployment. This also allows auto scaling.

- Schema registry to GA

- Premium offering to GA

- Kafka Compact Topics

- Data Replication

- Capture on Azure stack Hub

Special Thanks

3 days of integration, 28 interesting sessions. Integrate Remote 2021 was one to remember! We want to thank all speakers to inspire us and of course the Integrate Team for the organization.

And a very special thanks to our Coditers who took the time to write down all these great highlights:

Bram Takken, Brice Guidez, Camil Iovanas, Carl Batisse, Chiranjib Ghatak, Daniel Abadias, Jasper Defesche, Jef Cools, Jesse Van der Ceelen, Marc Fellman, Martin Peters, Michel Pauwels, Miriam Roccella, Peter Brouwer, Pim Simons, Ricardo Marques, Ronald Lokers, Ruud Wichers Schreur & Vincent ter Maat.

Subscribe to our RSS feed