Microsoft's Event Bus in the Cloud

In this session Steef-Jan Wiggers showed us how to implement Azure Event-Grid into your integration architectures. He started with an example of processing events from Dynamics 365 where Dynamics sends business events to an Event Grid Topic, the event is sent to a Logic App subscriber which enriches the event with data by calling Dynamics 365, finally the enriched message is sent to file storage.

Next Steef-Jan talked about the benefits of an Event Driven Architecture, mainly these are:

- Near real-time processing

- Loose coupling

- Plug and play

- Data exchange based on events

He mentioned that Event Driven Architecture isn’t necessarily a new concept, in a way this is something we have been doing in BizTalk for years with the publish – subscribe pattern. While this is more about messaging the concept is the same, both are about loose coupling

It is important to know when to use an Event Driven Architecture, mainly it is applicable when you need real-time processing and have a high volume and high velocity of data. Making it the method of choice for IoT type of scenario’s.

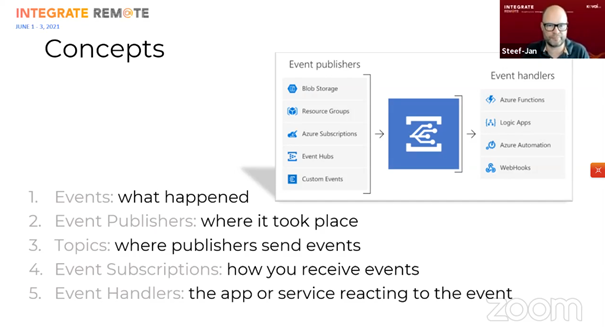

To implement an Event Driven Architecture in Azure we need to use Azure Event Grid. With Azure Event Grid you can specify Event Sources and Event Handlers. Azure Event Grid implements the following concepts:

With Azure Event Grid, you can choose between 2 different event schema’s:

- Proprietary (Microsoft)

- CloudEvent (CNCF)

Both have a data property but it must be noted that it is discouraged to misuse this data property as we are dealing with events, not messages.

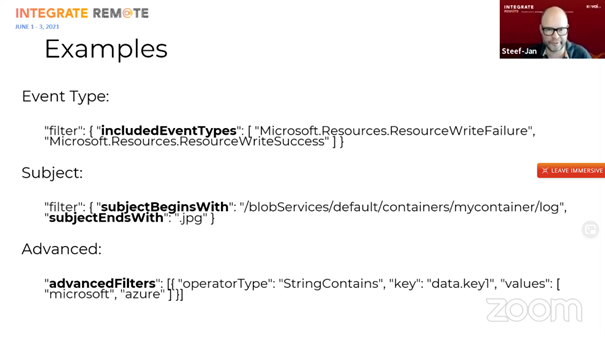

Routing of these events is based on subject, type or data:

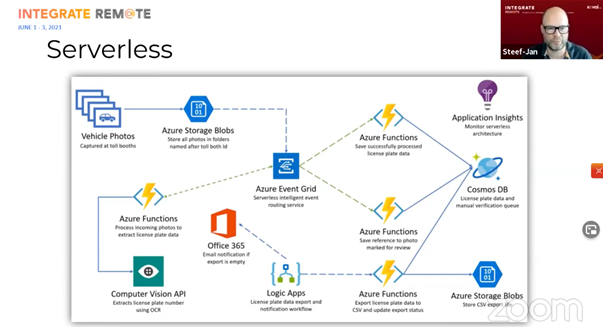

Next, Steef-Jan gave a demo of an application that processes image files from toll booths and retrieves the license plate of cars.

Here the Event Grid takes a central place in the architecture where a large volume of data is expected.

However, there are some important points when using Azure Event Grid that you must not forget. For example make sure that you use dead-lettering of events that fail, make sure that your event is not misused as a message and contains a lot of data since the size limit of an event is 64KB and don’t forget you can use Event Grid Domains to manage the flow of events.

Interestingly, Steef-Jan also showed us some competitors to Azure Event Grid, such as

- AWS EventBridge

- Google Eventarc

- TriggerMesh

While all these applications all give us the same base functionality, it would be interesting to have a more detailed comparison of for example the performance and running costs, but that is probably something for a different session.

As a final takeaway, he mentioned that Azure Event Grid is proven technology based on a modern architecture, it is a mature service for event-driven solutions and has a low barrier of entry. This wrapped a nice session about how to use eventing in your architectures based on Azure Event Grid.

New Scenarios with Logic Apps by Wagner Silveira.

Azure Logic Apps is evolving! A new runtime is now in public preview, an improved designer tool, different models of execution, and so on. This now enables scenarios that were complex or not possible in the past and will affect some of your previous and future design decisions.

Identity Based Authentication

Before, unless you hid the logic apps in front of API management the only type of inbound security was shared keys. From the outbound point of view, you had the ability to have an identity associated to connectors, which belonged to each one of them. There wasn’t a way to identify the logic app as a system identity.

What’s coming up? OAuth based inbound authentication will be available for Logic Apps on the consumption model and not yet on the Standard model, also the ability to use Managed Identities will be available for certain outbound connectors.

State

The stateful model is a little bit slower compared to other technologies but very popular due to easy adoption. In terms of auditability, resubmission and troubleshooting, you would know exactly everything that happened, even showing data that in theory could be private, in some cases having to go extra lengths to secure it.

Now you have a stateless option where you have a lot more throughput. It will be cheaper compared to stateful workflows because you’re not doing all the operations that involve state and will favor data privacy. You still have the choice to combine the best of both when you need to.

Things to bear in mind: selecting between stateless and stateful is a design decision. Moving from stateless to stateful is relatively easy because everything that you have on stateless you would have on stateful. But this is not true the other way round. For example, you don’t have the ability to have managed connection triggers. All the triggers that are on stateless are the built-in triggers. Another thing to keep in mind is that variables are not available on stateless.

Logic Apps Anywhere

You probably heard a lot about this already but in the past Logic Apps were not running everywhere. And an Azure only component, bound to a Resource Group, you had to treat each one of them individually, parameterize and deploy them with its own components.

Now you can choose where you want to deploy, on Azure, on-prem or on any other clouds, having control over the packaging, deployment, compute, consumption and bundling, taking advantage of a smaller and reusable building block.

What are the gotchas in this case? The main thing is your choice of storage. For example, if I use Logic Apps on Docker, I’m not totally disconnected from Azure. SQL storage is a viable option that is currently in private preview.

Private network integration

In the past we only had the ability to do outbound integration. You could connect it to a VM on a virtual network but was always using the on-premises data Gateway.

What is new now? VNet integration and hybrid connection, an evolution of what on premise data Gateway would offer. In terms of inbound options, we now have Private Endpoints.

Gotcha: Selected connectors only.

Key Takeaways from this session:

Logic Apps opened a lot of new scenarios, bringing Workflows closer to app development, but it is still a new technology and there are gaps that might affect your design. Make sure you understand your choices. Don’t discard consumption, it is part of the puzzle too.

Integration beyond point to point; Implementing integration patterns using Azure by Eldert Grootenboer

In this session Eldert discusses the various patterns available to prevent point-2-point integrations which quite often lead to a poor overview of the integration landscape. The patters are available in enterprise integration patterns.

As Eldert discusses, patterns are very important to consider. Patterns are pre-invented solutions for recurring problems based on expert experience and knowledge. Another big advantage of using patterns, is that other people don’t have a steep learning curve when starting in the project or organization.

Eldert argues that a lot the existing integration patterns can be implemented using the services and tools available in Azure. He thinks it’s important to not only use a single technology but compose the various services when implementing a pattern. And of course, there are often multiple options to achieve the same goal. Codit agrees with this, but thinks that the architect within the organization should document and prescribe the preferred solution to prevent a myriad of implementations achieving the same thing.

Also it is highly recommendable to take a look at the Azure Architecture Center to see Microsoft’s opinion on various patterns and the preferable way to implement them.

Eldert continues to show us some of the more common patterns used in integration.

Channel Adapter

In Azure the Channel adapter would most likely be an APIM endpoint linked to a Logic app. The channel pushes the message to the recipient which is a role for Service Bus. The Message endpoint is most likely a logic app that receives and processes the message.

Message router

The Message Router routes messages based on content and/or message properties. This can be achieved by Service Bus Topics and Subscriptions.

Pipes & Filters

Eldert showed a sample where a function is called by a logic app that filters out messages.

Message Translator

The message translator pattern describes a component that transforms messages from one format to the other. This can be done using xslt, liquid or custom code.

Pub/sub channel

The pub/sub channel pattern describes a message being published and received by a multitude of recipients (subscribers). For messaging, Codit believes the more appropriate choice would be ServiceBus Topics and Subscriptions. Eldert however suggests the use of EventGrid, where the message content is included in the event.

In summary, Eldert did a nice job reminding us that integration is not just linking source and target systems and we are done. It is much better to look at available patterns and apply these with the most appropriate Azure service(s).

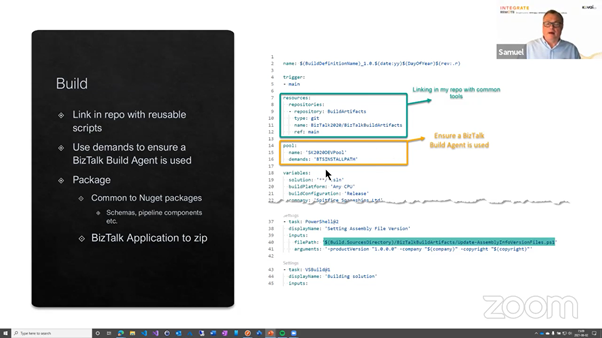

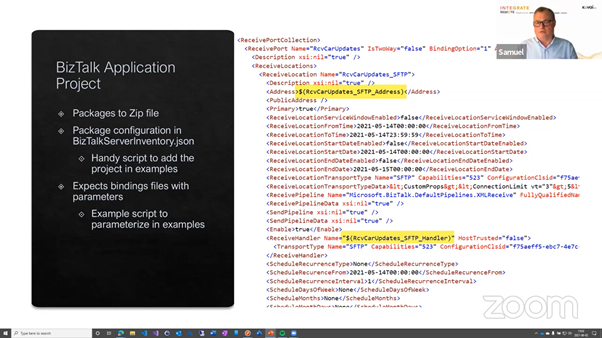

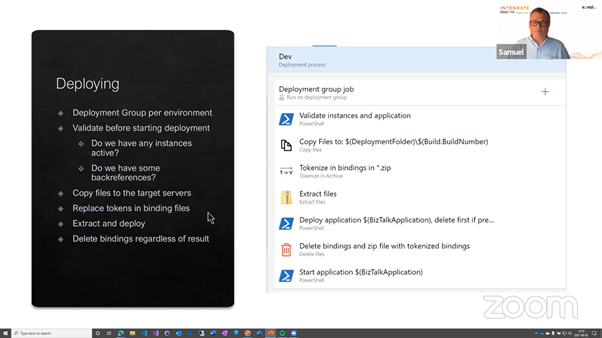

Build and release your BizTalk applications with Azure DevOps

In this presentation, Samuel clarified how you can do BizTalk deployments using BizTalk Application Project. Please note that with the existence of BizTalk Deployment Framework it can be done much more efficient.

To build the BizTalk projects with DevOps pipelines you need an on-premises Build Server with a full BizTalk installed. You do not need to configure the BizTalk environment to build the projects on this server.

For this demo, the build pipelines are written in YAML and publish the BizTalk artifacts as a Nuget package in Artifacts.

The release pipeline is done via Classic Pipelines. Samuel has written some PowerShell scripts to do validation and deployment. The release pipelines could have been written in YAML as well. There was no clarification why he chose two different methods for the build and release pipelines.

While this might be an interesting demo for some. One must keep in mind that it makes much more sense to use the BizTalk Deployment Framework. This does a lot of the manual tasks for you, and you can script additional tasks that are required for your application. The Visual Studio Marketplace contains an extension which allows you to deploy BizTalk BTDF packages.

Azure and BizTalk 2020 - Better Together by Stephen W. Thomas

Stephen Thomas gave a presentation on integrating BizTalk with Azure, going over the Why’s, How’s and Roadblocks of this process with the aid of a short demonstration.

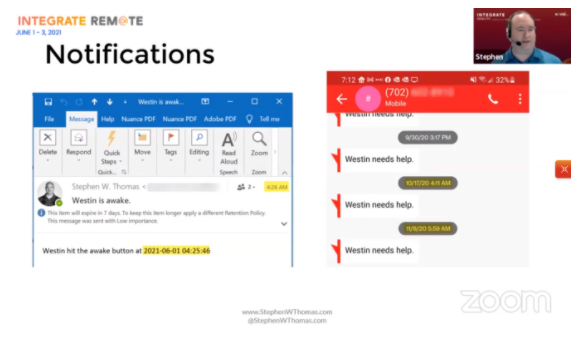

The presentation started off with an example of a non-enterprise application of Azure integration in his personal life to demonstrate the potential use of Logic Apps. Because his son has physical issues and often has surgeries he requires help from time to time, and his parents are not always around. To this end he has three wi-fi buttons that each trigger a different logic app, which send several types of notifications, such as emails and text messages. Data such as when he gets up is also logged.

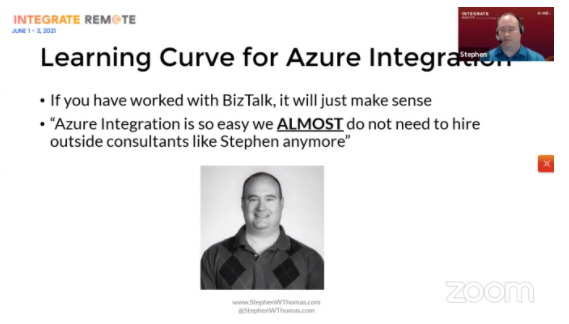

Continuing to talk about the ‘why’ of Azure integration, Stephen noted Azure’s high demand, low entry threshold and its ability to handle many different scenarios. A throughline in the presentation is that BizTalk is very much still alive and kicking – demand of even pure BizTalk solutions is up, despite predictions that BizTalk would start being phased out. Instead, Azure serves as more of a facilitator of integrations and expanding scenarios. An example of such a scenario given was a social media connector, allowing for automated brand recognition analysis.

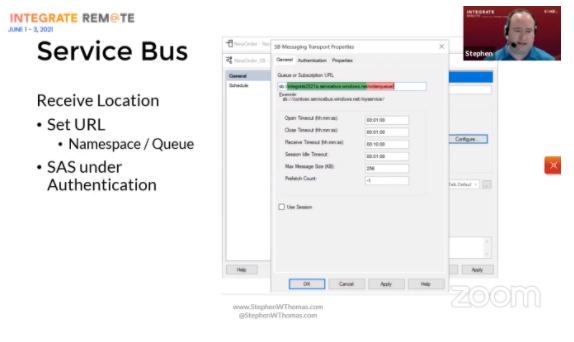

Moving on to the ‘how’ Stephen identifies four built-in Azure adapters for the Event Hub, Service Bus, Blob Storage and Logic Apps, placing particular emphasis on Logic Apps. A brief explanation for how to set up these adapters was given, while also highlighting some key features, mainly the ability to easily translate context properties in BizTalk to metadata in Azure. This and other features were also showcased in a short and simple demonstration that showed both the BizTalk and Azure sides.

Finally, Stephen addressed some roadblocks that can make adoption of Azure difficult. This was a balanced assessment, acknowledging the limitations of Azure while also highlighting its flexibility. The low barrier to entry and low costs of simple solutions were particularly highlighted, while a key sticking point was that Azure may not be the best choice for sensitive workloads, though the question of whether it is better to trust your own security team or Microsoft’s was also raised.

Overall, my main takeaway from the presentation is best summarized in a question asked during the Q&A, asking what the future of BizTalk would be in the face of this new technology. Stephen’s answer to this was that while claims that BizTalk would disappear have been around for a decade, it is still around. The way he sees it is that BizTalk is likely to remain in common usage for a long time, but that functionality is slowly evolved to make more heavy use of cloud-based solutions – a natural evolution of the integration between these two disparate systems.

BizTalk360 v10 - Not just a new face

In this session, Saravana Kumar (founder/CEO of Kovai.co) talked about improvements and new features in v10 of the BizTalk360 product.

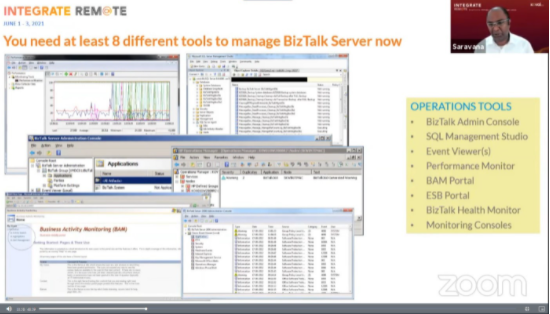

To manage BizTalk platforms using out of the box functionality, you would need to use at least 8 different tools. The Biztalk360 product fills the gap between these different tools, allowing users to manage their BizTalk platforms from a unified interface. Biztalk360 is used by more the 650 customers and has a 10-year history on the market.

This year on the 10th anniversary of the product, a new version, v10, will be released. This new version offers a lot of improvements, enhancements and new features such as brand new dashboards, restructured navigation, query consistency, filter improvements and small improvements to the user interface.

On the monitoring side there are alarm management improvements, modernized monitoring dashboards, modernized data monitoring, dark mode and much more.

All in all, the new version has a lot of interesting features and improvements. Don’t hesitate to check out the BizTalk360 site for more information.

Azure Service Bus Update

Service Bus?

In the this talk Clemens Vasters discussed that the Service Bus is part of the messaging services as is the Event Hub and the Event Grid. Each of them has their unique and own use cases based on the patterns you need but they share a lot as well.

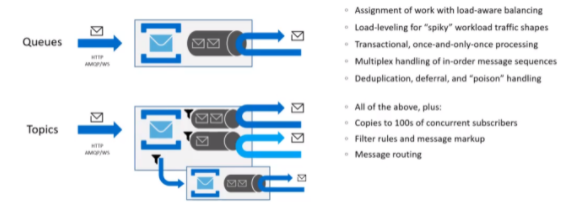

Within Service Bus Queues and Topics can be used:

The Service Bus is the Swiss army knife for message-driven workloads! Based on your needs you can choose between a standard version and a premium version where the premium version provides dedicated resources and some extra premium options and the premium version can be used as a bridge between private networks.

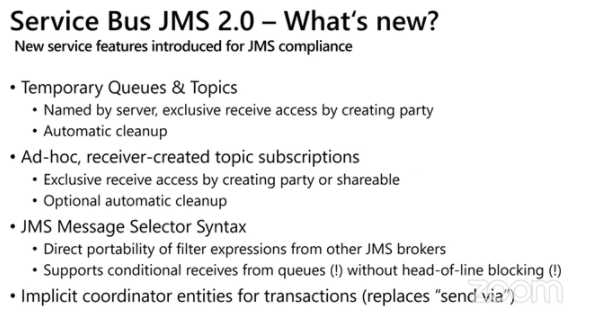

JMS!

Java Messaging Service is one of the most successful messaging services. So what is JMS?

It is now available in Azure Service Bus Premium. JMS and Java is used in many industries that have uses message queues early on. Because the Service Bus is more available and more reliable and more scalable it is the PAAS they often considered to move to. Adding the JMS 2.0 compliance made it possible for those customers to move the workload to the cloud without major changes to the applications and switch to the Service Bus as the messaging backend. BTW: Clemens shared that the reliability of the Service Bus is often between 99.9995% and 99.9999%.

Supporting JMS is the single biggest feature update since 2011 on the Service Bus. Microsoft has worked with Red Hat on getting full compliance on the API. This meant that a lot of features had to be added to Service Bus! The JMS support means that it is now compatible on the wire level with Apache Qpid and Apache ActiveMQ. All (new) features are now also available via the SDK’s of several programming languages.

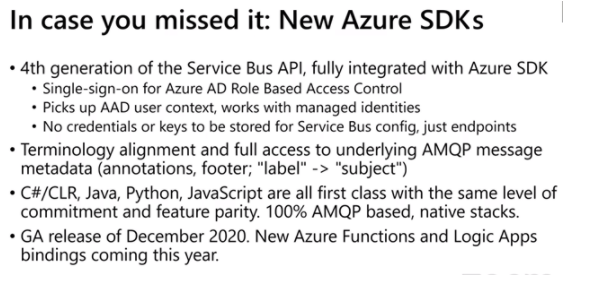

Azure SDK and Azure Function

Each with an SDK supported language now has a dedicated Architect. Here some news:

Large Messages

Service Bus Premium is about to support larger than 1 Mb messages but this is mainly a “lift and shift” (opt-in) feature. Use this sparingly because it has a performance penalty and connected applications might not be able to handle large messages. The maximum size will be 100 Mb and can be changed for existing entities as well.

Clemens still advises to stay away from messages larger than 1 Mb because having large messages is a bad idea. GA is expected to be end of 2021.

Durable Terminus

Coming soon is also the “Durable Terminus” which is full AMQP session and link recovery when connections drop. On-premises connections are very stable and the lifetime and the state of the connection and locks should be coupled to the lifetime of that connection. Cloud connections are more brittle because of NAT, containers and workloads that are moved between cloud resources. Connections break all the time now. The Durable Terminus is now to survive connection disconnects (client tries to reconnect and all in-flight info is still there). The time of a lock is now in line with the Terminus. The SDK will be able to silently recover the connection. The Link state is now on the server.

This means that there are a few more new behaviors. Message locks will no longer expire or need renewal and operations no longer fail on lack of connectivity (except for timeouts).

Federation and Replication

Coming to Service Bus is also the possibility to have a first class no code experience to set up replication and routing in the Portal. It uses Azure Functions and Logic Apps V2.

TLS protocol

There are no plans to remove the TLS 1.0/1.1 support at the moment, but a new policy is going to be introduced to have a minimal TLS version. The TLS negotiation will still complete but if the maximal client preferred level is lower than the minimal version set by the policy the connection will be disconnected.

Lightning Talks

This session is a collection of short talks (hence the title “Lightning”) hosted by Derek Li, Program Manager at Microsoft. The talks in this new format concern several topics that are often left out when talking about Logic Apps.

Lightning Talk #1 – Workflow Designer by Sonali Pai

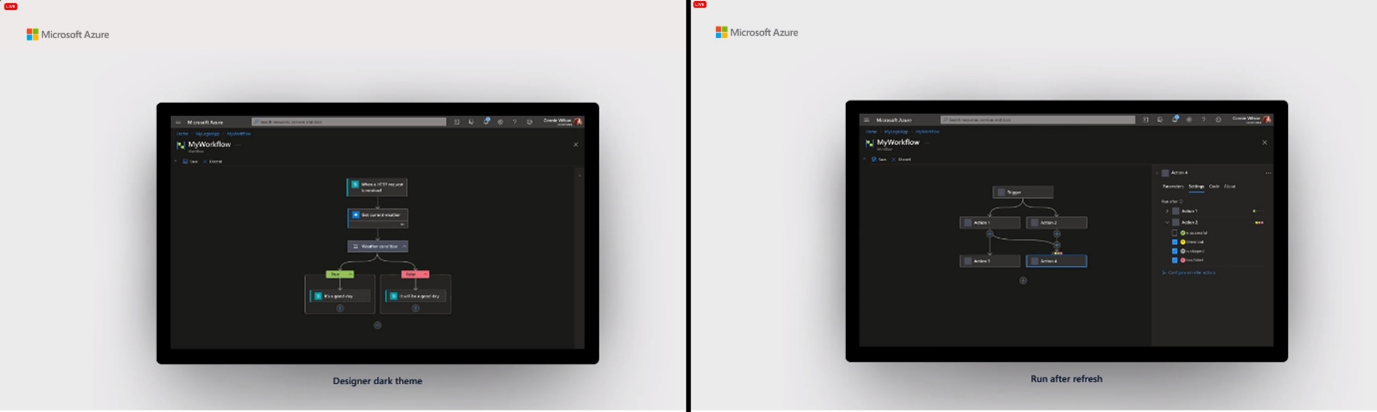

In this talk the new Workflow Designer for Logic Apps is showcased. The designer is the canvas where developers build the Logic Apps and as such it is a key component of the development environment. Compared to the old workflow designer, the most noticeable changes are:

- A more modern look and feel, the edges are cleaner and the graphics more compact.

- The visualization of the workflow is now separate from the configuration of each action. This gives a much cleaner view of the workflow and allows developers a much better experience when configuring the properties on each card.

- The cards in the designer now have a visual indicator that they are draggable (all except the trigger). They were draggable before, but now the process is much smoother. So now you can easily drag and drop cards from one place in the workflow to another, provided that it is logically allowed. The designer indicates if a card may or not be dropped in a specific place while dragging it.

Lightning Talk #2 – Logic Apps Design & Research by Xiaowei Jiang

This talk shines a light on the design development work that went into the new Logic Apps designer. Some recognition for the UX Designers, Researchers and Content Designers!

The first part of this lightning talk describes the journey towards the new designer. As it was recognized, the old designer was not very adaptable to complex scenarios, for example the overview was easily lost when implementing multiple parallel flows. During the design phase of the new designer a lot of research has been done in terms of exploration of user behavior and feedback received from the teams working with the designer.

- Unmoderated study includes for example asking the users to choose between two alternative options in the designer (i.e., choosing between the inline configuration or moving the configuration to a side pane) or asking the users where on the designer they would click to perform a certain action.

- Moderated study includes for example asking the user about their thoughts on a specific item in the designer.

- Ethnography study involves the recording of a user’s interaction with the designer at their own pace, exploring how a user normally would work with the designer.

The speaker then sent us a Call-To-Action, inviting us to provide our feedback on the designer. Finally, some upcoming design updates have been showcased: a new dark theme for the Logic App designer and a refresh of the ‘Run After’ option.

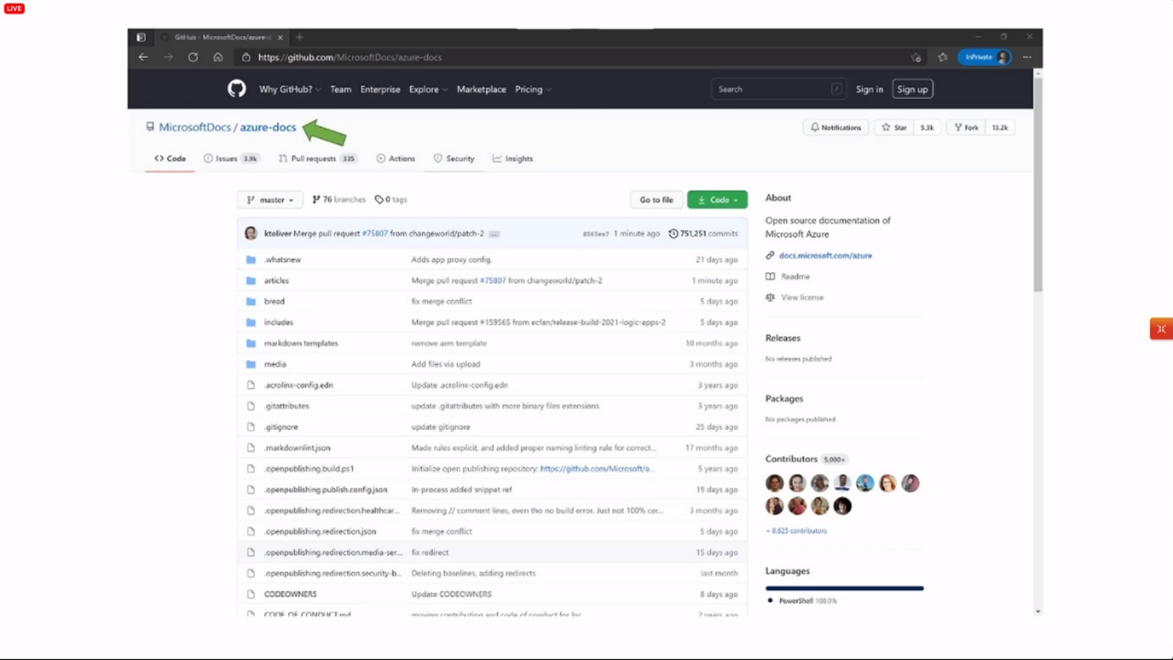

Lightning Talk #3 – Documentation by Larua Dolan

This lightning talk was at the same time a request for contribution to the Logic Apps documentation and a guide on how to contribute to this documentation. The Logic Apps documentation is open source and can be found online at https://docs.microsoft.com/en-gb/azure/logic-apps/, there is also a contributor guide with the instructions available at https://docs.microsoft.com/en-gb/contribute/.

In short, you can follow these steps:

- Go to the GitHub repository “MicrosoftDocs/azure-docs” and log in with your GitHub account;

- Navigate to the documentation page you would like to edit. If the ‘Edit’ button is available in the top right part of the page, then the content of that page is open to public contributions, so click it;

- The button you just clicked will bring you to the GitHub page with the content: make your changes in the web editor and check them in the preview tab until you are satisfied with the modifications;

- Scroll to the bottom of the page, then enter a title and a description of your changes before clicking on ‘Propose changes’;

- Next in the GitHub repository, create a Pull request with your changes to officially submit your proposed changes. This request will be examined by the team and eventually accepted. In that case your name will also be shown among the contributors to the docs. That’s it!

Lightning Talk #4 – Automation Tasks by Parth Shah

This lightning talk focuses on Automation Tasks, describing how they work with a short demo, and giving a preview of upcoming features.

Before the introduction of Tasks, users needed to have an extensive knowledge of Logic Apps to build their own automation workflows. But not everybody can or wants to be a developer, so Tasks have been introduced as a blade feature to help reduce the complexity and provide automation features that can be created and used directly from the Azure Portal.

A new Task can be created directly in the Portal, and it does not require any code. The creation process will provide a choice of a template and request only the necessary option fields. Of course, it is powered by Logic Apps, so it can always be opened with the Logic Apps designer later to further modify and customize it.

The upcoming features for Tasks:

- Additional templates when creating a new Task

- A new UI in the Portal

- In the long run, the possibility to create Tasks running in the standard runtime, since at the moment they are only available in the consumption runtime

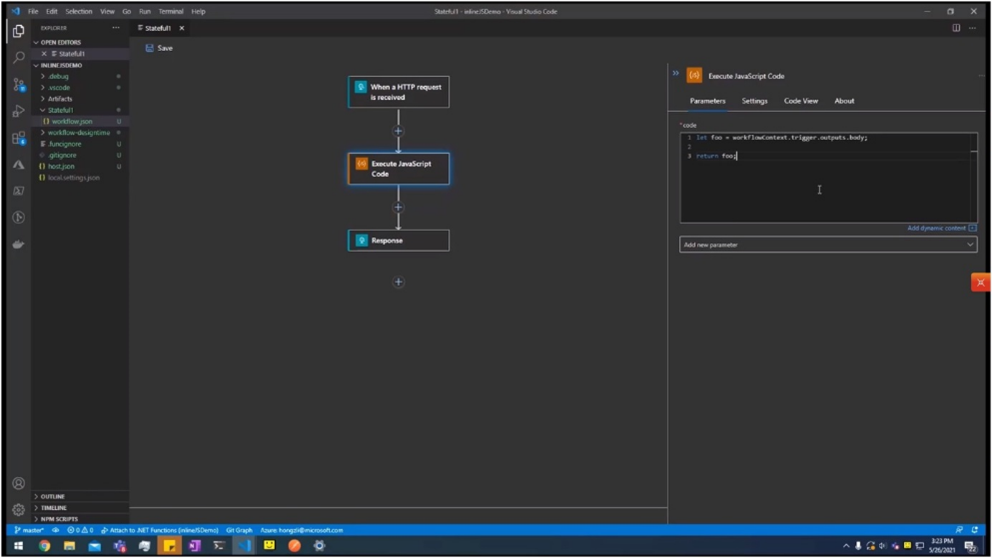

Lightning Talk #5 – Inline Code Deepdive by Henry Liu

This lightning talk is a deepdive into the new possibility of using inline JavaScript in Standard Logic Apps. We already could use JavaScript in Consumption Logic Apps, using an Integration Account to run the JavaScript code inside a Function. But now Logic Apps are themselves built on Functions! So a new action has been introduced to run inline JavaScript code directly in the workflow.

You can for example reference the trigger body of the Logic App as a variable and use it in your JavaScript code. A point to note is that for performance reasons, only referenced data are included in the available workflow context, so for example a static analysis might not detect a dynamic reference if not explicitly stated. Luckily, there is a way to explicitly add a reference to actions or triggers to allow for dynamic values only known at runtime.

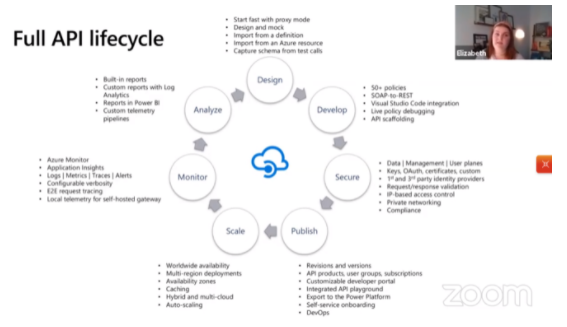

Azure API Management Update

Elizabeth Barnitt, Miao Jiang, Mike Budzynski and Vladimir Vinogradsky gave a presentation on new features in and around API Management.

Elizabeth kicked off the session by giving a broad overview of what API Management is, how it is structured, and which use cases it serves. The focus was on API Management encompassing the complete lifecycle of multiple APIs, from design to publishing to monitoring and analysis.

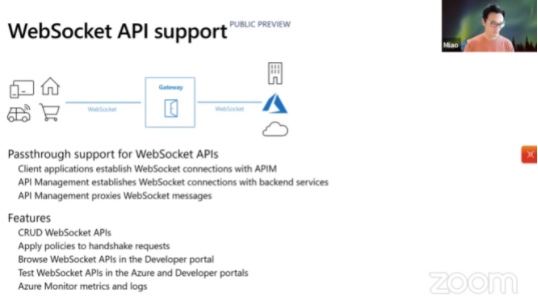

After this some new features and services were showcased, starting with Miao talking about the new WebSocket support in API Management. In such an API the API Management (APIM) instance functions as a proxy for the communication between the user and the backend. At time of writing this feature is in public preview, so it may take several more weeks until it is rolled out to all service instances. Also introduced was support for self-hosted gateways, allowing API gateways to be deployed to Azure Arc Kubernetes clusters.

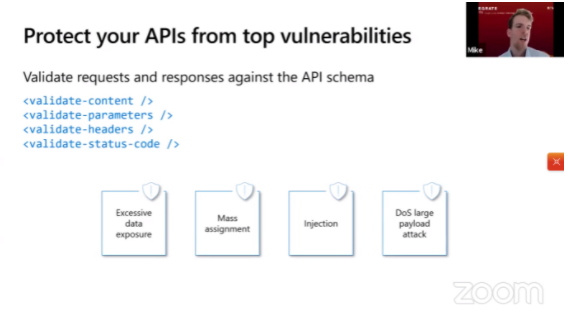

Mike picked up from here, introducing new API Management data validation policies that can aid in defense against a range of potential malicious attacks. Two of these were highlighted: first, the header validation policy, which prevents headers from the backend that may contain sensitive data from being accidentally exposed. Secondly, the content validation policy, which can defend against SQL injections, changing of fields that should not be overwritable and throttling through the sending of excessively large messages. Aside from these, validation of the parameters and the status code are also possible.

Also introduced was the API portal, a standalone platform for API documentation built on top of the existing developer portal technology. Highlights of this feature are the ability to manipulate and host it through GitHub free of charge and the ability to customize it with a codeless visual editor.

Finally, Elizabeth gave a short introduction on Availability Zone support for API Management. This allows APIM instances to have redundant copies deployed to several zones in the same region, allowing for a 99.99% uptime SLA. This feature is available only for premium-tier instances.

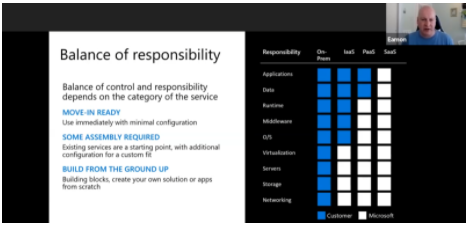

Functions: Event driven Compute Patterns and Roadmap

To close out the second day Eamon O’Reilly talked about Azure Functions. He started with a more general overview of native apps in Azure and the balance of control and responsibility of services.

Eamon explained what serverless is and what its benefits are.

He summarized the definition of serverless in 3 big points:

- Full abstraction of servers

- Instant, event-driven scalability

- Pay-per-use

This allows for numerous benefits such as:

- Focus: Solve business problems, not technology problems

- Efficiency: Shorter time to market, variable costs, better service stability, better development and testing management and less waste

- Flexibility: A simplified starting experience, easier experimentation, scaling at your own pace and a natural fit for microservices

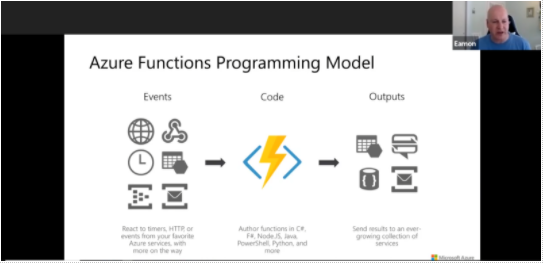

Aemon gave us a quick overview of the Azure Functions programming model. It starts with an event such as a timer, HTTP call or events from other Azure services. That will trigger the function and run the code. Authoring of the functions is supported in various programming languages. The function will output a result and send it to the service of your choice.

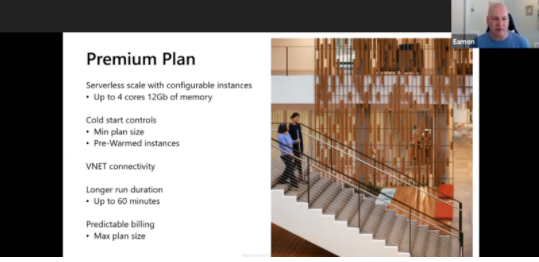

Eamon discussed the Azure Functions Premium plan, which offers a couple of benefits over the Standard plan such as serverless scale with configurable instances, cold start controls and longer run durations. This can be useful in certain scenarios where the Standard plan does not suffice. For example:

- Compute intensive jobs or longer running jobs such as image/file processing or Machine Learning interference

- Latency intensive applications such as APIs and line of business apps

- Applications requiring network isolation such as finance and health care data processing and internal facing tools

Next up, Aemon talked about durable functions and showed a quick overview of stateful patterns in durable functions such as:

- Function chaining

- Async HTTP APIs

- Fan-out/Fan-in scenarios

- Long-running monitor

- Human interaction

- Event aggregation

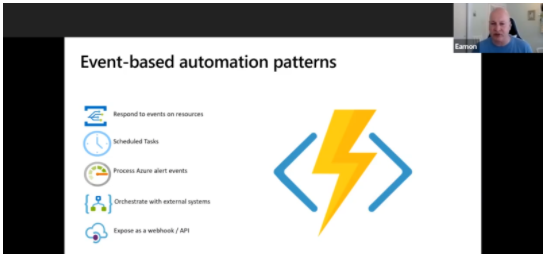

Aemon briefly touched on to the topic of automation. Azure functions can also be used for automation across the Azure lifecycle

He then showed us a demo of making a durable function using PowerShell code. PowerShell support was recently added for durable functions alongside Python support.

Eamon’s next topic was about functions that run anywhere. With the coming of Azure Arc, users will be able to run their apps anywhere. Azure Functions will be supported with Azure Arc right away. He then showed us a demo of using Azure Functions with Azure Arc.

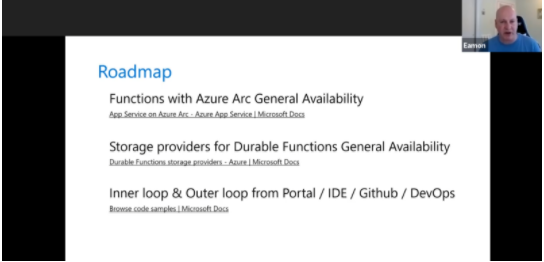

At the end we got a quick peek at the roadmap for Azure Functions. As mentioned earlier, functions will work with Azure Arc when it releases. Other notable items on the roadmap are storage providers for Durable functions and Inner loop & Outer loop from Portal/IDE/GitHub/DevOps.

Subscribe to our RSS feed