Starting from Arcus Background Jobs v0.2 we have introduced a new library to monitor Databricks clusters. The challenge with Databricks clusters in Azure is that they do not provide out-of-the-box job metrics in Azure Monitor which makes it hard to operate them. People would have to look up the status of the Databricks job themselves to figure out how it ran and using automated alerts is hard.

This post will show you how our new version of the Arcus Background Jobs library will help you with this problem so the next time you run a Databricks job, you’ll be notified right away!

Cluster jobs

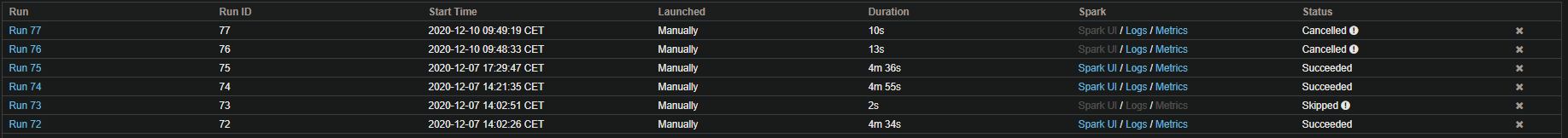

Here’s a take on some Databricks jobs which have been run on a cluster. Note the end result is shown in the last column.

The outcome of this blog post would be that we could notify the consumer of the job outcome without looking it up in the Databricks cluster itself.

Azure Function Databricks Job Metrics

The Arcus Background Jobs library includes a new package in the v0.2 version called Arcus.BackgroundJobs.Databricks. This package contains all the necessary capabilities to query the Databricks cluster so we can retrieve the result of the completed jobs.

We’ll use an Azure Function that will periodically query our Databricks cluster by using a timer trigger.

The job outcome itself will be written as an Azure Application Insights Metric so we can use it later as a source of an alert.

Here’s an example of how this Azure Function can be set up:

The DatabricksInfoProvider is an Arcus type that allows you to either measure the job outcomes directly, or query them so you can log them yourself. For more information on this type, see the official docs.

💡 We specify a time window in which we want the completed jobs to exist.

This is now set at the start and end of each run of the Azure Function time trigger.

Application Insights metrics and alerts

All the previous setup will result in log metrics like the following:

Metric Databricks Job Completed: 1 at 12/10/2020 08:50:01 +00:00 (Context: [Run Id, 77], [Job Id, 9], [Job Name, My Databricks Job], [Outcome, success])

If you want them to be in Application Insights, please use our own Application Insights Serilog sink so the written log message is discovered as metrics and not traces.

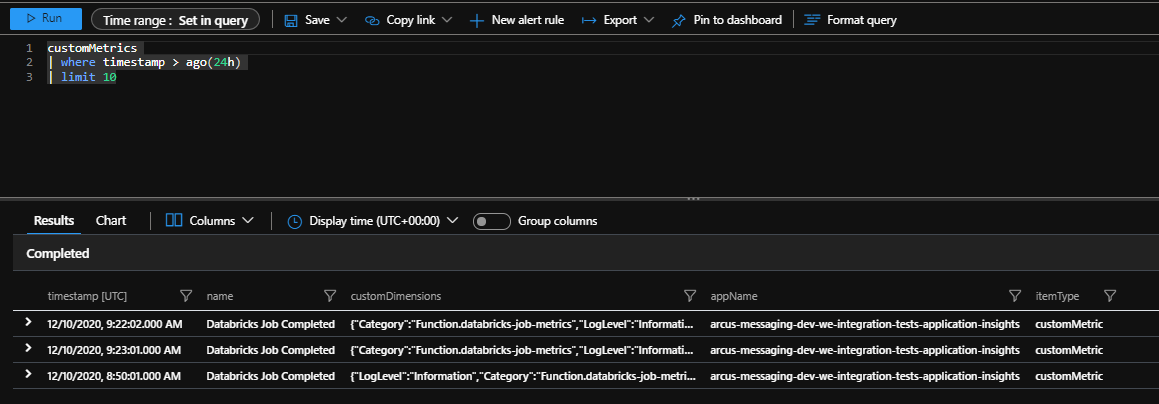

The metrics will show up as custom metrics:

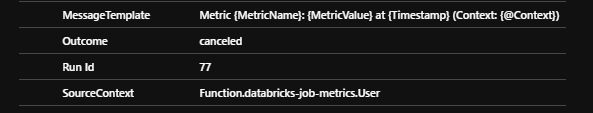

The Databricks job outcome is available as custom dimension in the metric:

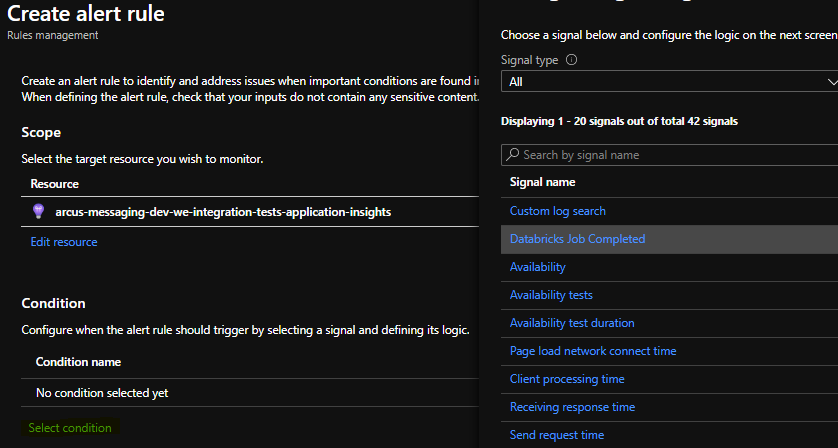

If you want, you can create an alert on this metric by going to **Application Insights > Monitoring > Alerts > New alert rule**.

There, in the alert condition, the Databricks metric will be available to you.

Conclusion

In this post, we have looked at how we can monitor Databricks jobs with the new Arcus Background Jobs library. We’re not inventing anything new here, only integrating existing services – Querying Databricks job outcomes in an Azure Function and flowing the information to Azure Application Insights.

You’ll be happy to know that we have created an project template for this with already the necessary Azure Function and packages embedded.

This template will be available starting from v0.5 in the Arcus Templates library.

Thanks for reading!

Subscribe to our RSS feed