The goal

Tests should be accessible to anyone on the project. This should perhaps be even more important than making the implementation code accessible, as it is (normally) the first place people look to understand the workings of certain functionality. With that in mind, it is only logical to integrate any type of test within source control and in the same common code language of the team.

Load testing is available in the JMeter format or via Azure load testing itself. However, both suffer from the flexibility of commonly known code, source control and availability to setup/teardown test fixtures. It should also be noted that I am in favor of providing a way to run any test locally. Logically, if a good test infrastructure is in place, this is already available. This is because the tests are run locally during test development. Waiting on the test results of a remote test run adds unnecessary development time and decreases motivation. Fast, quick cycles are the best way to move forward and to bring real added value to the project.

NBomber inputs & outputs

NBomber was something that came as a good part of the solution. It is a library fully written in .NET, which makes it available for a developer of any experience level. It will also be saved to source control.

NBomber lets you create test scenarios that describe the application interaction that needs to be load tested. This could be as simple as calling an API endpoint or as complex as you want, all separated into many different steps.

Each load test run will take one or more load simulations that specify how many times and in what manner the test scenario should run. Keeping a constant load on your endpoint? A random load between a range? It can all be defined. The flexibility here is that you can write a similar load that your application suffers during production.

An example from their page:

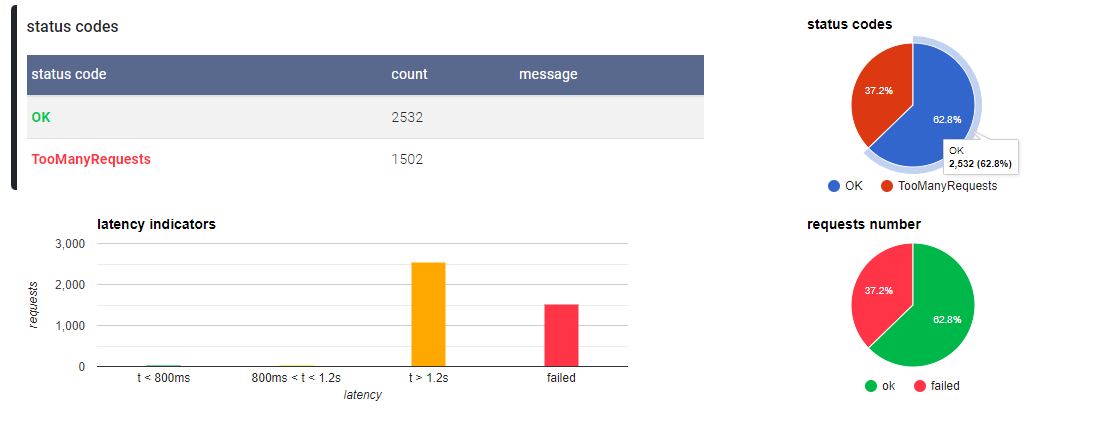

What makes NBomber even greater is not only the easy usage, but the superb reporting. The problem that occurs with load testing is that, unlike other types of testing, the result of a single call is not considered something we should assert on. It is not often a simple StatusCode = OK ? test assertion to be used. We should look in the broader sense and think about averages. It is why the NBomber report brings a beautiful HTML report with statistics (which are also accessible via the return value of Run) so that each test run can be analyzed afterward.

💡 The library is actually written in F# and is fully compatible in both .NET languages. This is another example of how these two worlds come together perfectly.

For more information about this library, see their documentation.

xUnit integration

Now that we have a load-testing tool, we can look at how we can integrate this in our test suite. As mentioned in the goal, we should be able to run load tests as easily as any other tests. We should make sure that this builds upon the general .NET testing. xUnit is one of the more commonly used testing frameworks within Codit, and is why it is used here to integrate NBomber.

For the Invictus project, we have defined several load test types: simple XSLT, complex XSLT, context usage, and others. All these types should be tested against the same endpoint and placed in the same test report. It helps to think about what ways you want to review the test reports. Grouping them by functionality or endpoint is a good strategy, as it brings all the different inputs and test loads together.

Here is a pseudo-code sample of what we are trying to accomplish:

👀 Notice that all the LoadTestType enumeration values are used as a single [MemberData] input. This is because we can then group all them together in the same report. Unfortunately, xUnit is not flexible enough to control tests during test execution. What I mean by this is that we cannot generate tests the way F# Expecto does this. If we could, we could make sure that we create a separate test for each load test type. This would provide us with the possibility of failing a single load test type based on the results we get from the NodeStats variable. Instead, we have to leave the entire test to fail.

Bonus: accompanied smoke tests

During developing load tests, it can be quite tedious to work out if the test failed because the setup was wrong, or because the application endpoint was not handling this kind of load correctly. That is why I am a fan of adding accompanied smoke tests. These are integration tests that only call the application endpoint a single time. In doing so, they give a simple way of verifying if the application endpoint is still answering correctly, both locally and remotely.

If the smoke tests also fail, then we know there is something wrong with the interaction, not with the load. This increases defect localization enormously, especially if your application does not respond with a very descriptive error. In that case, it would be hard to figure out if it was the load or just a faulty request, or even a backend test fixture.

In this case, we can reuse our HTTP send code and only send a single request.

👀 Notice that in this case, we can run a single load test type locally by replacing the testType variable. In this case we assert on a single HTTP status code; if all goes well, it will tell us what kind of problem there is, unrelated to the load tests.

Since these tests are often lightweight integration tests, we can run them alongside the load tests. Just know that they can provide extra information on the reasons why a load test fails.

Conclusion

Testing in general is often overlooked. It is not seen as part of the development process as it does not seem to bring any additional visible changes to the end-result. What is forgotten is that tests are the only safeguard you have that the system works. No manual test can compete with a complex, scheduled, automated test suite. Sometimes you see tests in a project, but they are not automated. That’s not enough. Any test that is not automated is doomed to be deprecated. We need test automation to remove as much doubt about the workings of the product as possible.

Load testing is a part of this solution. It is usually a test suite that runs longer than the 10-minute build time rule of the CI (continuous integration) process. Therefore, these kinds of tests are often scheduled instead. Test runs are also not really run in parallel, as it would ruin the input/output relationship in the test report (1000 requests/second becomes 2000 requests/second). A good report is also a frequently used report, and it is important that any failures are quickly noticeable. Automatically adding bug tasks, team notifications or a general build status page could all help in case of a test failure.

Tests are often the neglected child of the family, but they are family nonetheless.

Thanks for reading!

Stijn

Subscribe to our RSS feed