1. The Phoenix and the Unicorn - Peter Hinssen

Our Techorama journey commenced with a captivating keynote delivered by Peter Hinssen. Through examples of different economic and industrial booms, Peter underlined that adaptability and reinvention were vital traits for companies to thrive amidst the ever-changing landscape. Such companies, aptly referred to as phoenixes, symbolized the ability to change along with the times, while other companies that stagnated were assigned the moniker of dinosaur. Then there were the unicorns, the extraordinary and highly successful companies that had managed to disrupt industries and achieve remarkable growth, and that came only once in a lifetime. By likening companies to mythical creatures, he provided us with a compelling retrospective of technology’s evolution over the years, while simultaneously offering a glimpse into the future.

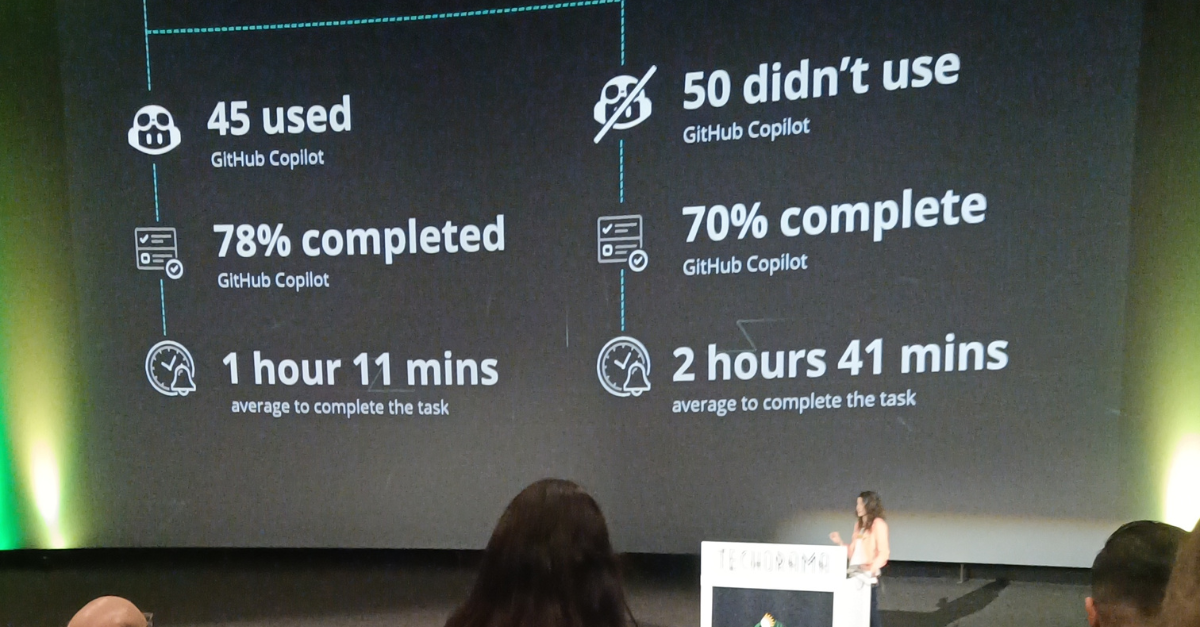

2. GitHub Copilot, Using AI to Help you Learn, Code, and Build – Michelle Mannering

In this session, Michelle Mannering took us through the varied capabilities of Github Copilot. Attendees witnessed the seamless integration of Copilot in Visual Studio Code, experiencing AI-generated code suggestions that revolutionize productivity. From code-to-explanation functionality to upcoming enhancements and data privacy, Michelle explored Copilot’s potential.

Takeaways:

- Seamlessly integrated into Visual Studio Code, GitHub Copilot provides AI-powered assistance within the coding environment, generating code suggestions based on comments and context to boost productivity and reduce repetitive tasks.

- GitHub Copilot Labs explores additional features, including code-to-explanation functionality for human-readable code explanations and code translation for easier language conversion.

- Developers should review suggestions as Copilot may occasionally generate suboptimal or incorrect code, allowing them to exercise judgment before implementation.

- GitHub Copilot’s business model prioritizes data privacy by processing generated code locally on the user’s machine without retaining user code content.

- Access GitHub Copilot at copilot.github.com, offering a free trial and pricing details on the official website.

- GitHub continues to expand Copilot’s capabilities, with upcoming features like GitHub Copilot X providing prompts, documentation generation, and bug detection and fixing assistance.

- Copilot’s chat functionality aims to support developers’ growth from beginner to expert level by offering real-time guidance and collaboration.

- While AI augments developer productivity, GitHub Copilot recognizes that human developers remain essential for higher-level problem-solving tasks.

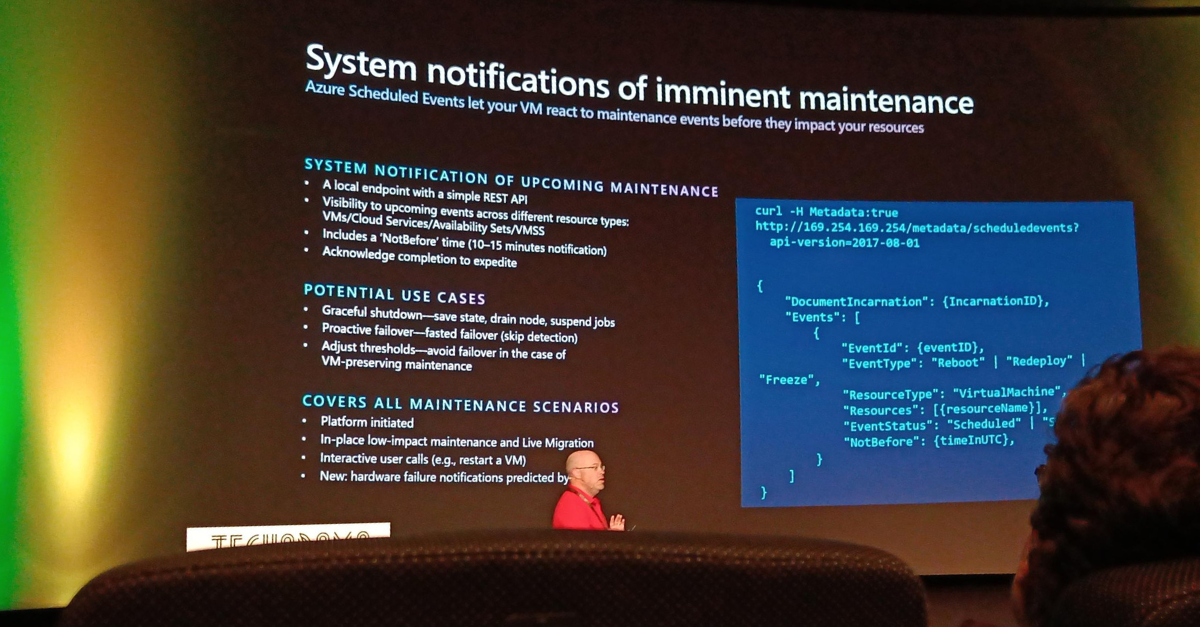

3. Cloud Disasters: Plan Now and Avoid Catastrophe Later – Derek Martin

In this session, Derek Martin shed light on the nuances of Azure’s architecture and services, offering invaluable insights that can help us understand and anticipate the challenges we may face as we prepare for the worst. We took a deep dive into how Azure is built, the tools it provides for us to leverage in an emergency, and most importantly, what steps we can take to safeguard our most valuable digital assets.

- Azure operates in 65 regions worldwide, providing extensive coverage for cloud services. The new 3+0 pairing model enhances availability and resilience with three availability zones and zero paired regions. Customers have control over failover decisions.

- Microsoft’s disaster recovery assistance is limited to catastrophic events. Specific regions like Belgium Central, Poland Central, and Italy North have highly resilient architecture.

- Best practices require aligning resources with availability zones through manual configuration. Azure is developing an automated checkbox feature for simplified zone alignment.

- Azure regions cannot be active-active, and cost and tolerance levels should be carefully considered for resiliency planning.

- Azure Chaos Studio is a tool for disaster recovery drills and testing system resilience. Availability zone failover is seamless for uninterrupted service.

- The resource group acts as the control plane, and resources within a group should be aligned with the same region. During a control plane outage, resources continue to run with limited visibility.

- Deploying resources in a single region carries significant risks, as shown by past incidents.

- Real-time monitoring via API and subscribing to Azure Service Health alerts via email are recommended for resource status and potential issue awareness.

- Fully automated disaster recovery processes are essential to avoid portal dependency during a crisis.

- Multicloud environments introduce challenges due to different cloud behaviors. ExpressRoute for interlinking can be costly and complex for achieving resilience.

- Multicloud does not eliminate all risks, and it should not be pursued solely for disaster recovery purposes.

- Azure Site Recovery focuses on application recovery, not comprehensive disaster recovery across different clouds. Azure offers compliance capabilities for specific industry requirements.

- Azure Chaos Studio helps identify weak points in applications through chaos testing, and system resilience depends on the strength of the most vulnerable component.

Takeaways:

- Avoid single-region dependency.

- Regularly practice failover procedures.

- Prepare for application/cloud failures and adopt a proactive approach to ensure resilience.

4. Stop Using Entity Framework as a DTO Provider - Chris Klug

In a thought-provoking session led by Chris Klug, a new perspective on working with Entity Framework (EF) was presented. Klung emphasized the importance of adhering to object-oriented programming (OOP) principles and encouraged developers to stop using EF as a DTO (Data Transfer Object) provider, and explored the implications of incorporating business logic within entity objects.

- Moving away from EF as a DTO provider: Chris Klung challenged the conventional use of Entity Framework as a simple data access layer. He urged developers to consider leveraging EF as a powerful tool for encapsulating business logic within the entity objects themselves. By incorporating business logic directly into the entities, developers can create more cohesive and maintainable codebases.

- Benefits of embracing OOP principles: Klung emphasized the advantages of embracing object-oriented programming principles when working with EF. By placing business logic within the entity objects, developers can achieve better encapsulation, improved code organization, and enhanced code readability. This approach aligns with the fundamental principles of OOP, leading to cleaner and more maintainable code.

- Handling EF migrations during application deployment: In addition to discussing the paradigm shift in utilizing EF, Klung shared valuable insights on handling EF migrations during the deployment of applications. He emphasized the importance of planning for database changes and provided practical tips for managing EF migrations effectively. By understanding the best practices for EF migrations, developers can ensure a smooth deployment process without compromising data integrity or causing disruptions for end-users.

Takeaways:

- Rethinking the usage of Entity Framework can improve codebases

- Move away from using EF solely as a DTO provider

- Embrace OOP principles for more maintainable, scalable, and robust applications

- Handling EF migrations during application deployment is crucial for data consistency

- Ensure a seamless user experience by managing EF migrations effectively

- Incorporate these takeaways into development practices

- Unlock the true potential of Entity Framework

- Deliver higher quality software solution

5. Writing Code with Code: Getting Started with the Roslyn APIs – Steve Gordon

Steve Gordon, a developer at Elastic, showcased the power of Roslyn APIs for automatic code generation in .NET applications. His session provided an overview of the basics, highlighted important considerations, and left attendees excited about the possibilities of “Writing Code with Code.”

- Roslyn APIs: Steve Gordon introduced the Roslyn APIs, part of the .NET Compiler Platform, which enable developers to programmatically manipulate code. Understanding the basics of Roslyn APIs is crucial for leveraging their potential in automating code generation, refactoring, and analysis.

- Automatic Code Generation: Gordon demonstrated how Elastic’s .NET client leverages Roslyn APIs for automatic code generation. By writing code that writes code, developers can automate repetitive tasks, minimize errors, and enhance productivity. This approach streamlines development workflows and promotes more maintainable and scalable applications.

- Considerations for Success: While exploring “Writing Code with Code,” Gordon emphasized the importance of maintaining code quality. Developers must strike a balance between automation and readability, ensuring that generated code is clear, understandable, and efficient. Adhering to best practices maximizes the benefits of Roslyn APIs.

- Unlocking New Possibilities: Steve Gordon’s session sparked curiosity and highlighted the endless possibilities of “Writing Code with Code.” By harnessing the power of Roslyn APIs, developers can automate tasks, accelerate development processes, and elevate the overall quality of their codebases.

Takeaways:

- Roslyn APIs enable automatic code generation in .NET applications

- Utilize Roslyn APIs to automate repetitive tasks

- Increase productivity by leveraging code generation

- Build more maintainable codebases with the help of Roslyn APIs

- Striking a balance between automation and code quality is crucial

- Explore the possibilities of “Writing Code with Code”

- Push boundaries and achieve remarkable results through code generation

6. Confidential Computing with Always Encrypted Using Enclaves - Pieter Vanhove

Pieter Vanhove led this discussion on Confidential Computing with Always Encrypted Using Enclaves—a feature introduced in SQL Server 2016 and available in Azure SQL Database and Cosmos DB. This technology enables encryption of specific database columns, providing an extra layer of security for sensitive data. With confidential computing capabilities, sensitive data can be processed without being accessed in plaintext, protecting it from unauthorized users. The session highlighted the main benefits of Always Encrypted secure enclaves, discussed best practices for configuration, and addressed the latest investments in Azure SQL and other Azure data services.

Takeaways:

- There are two encryption methods available: deterministic, which always results in the same encrypted value and offers more functionalities like grouping and indexing in SQL, and randomized, which is less predictable but provides fewer functionalities.

- However, encryption does have limitations, such as the inability to execute “WHERE” queries. To overcome these limitations, SQL 2019 introduced secure enclaves. A secure enclave is a protected region of memory within the database process where data is decrypted, and operations like WHERE and GROUP BY can be executed. This allows sensitive data to remain protected without being exposed to the client.

- To ensure trust in the enclave, attestation may be required as proof that the client can trust the enclave. There is a performance impact of approximately 20% when using encryption. Additionally, implementing encryption may require refactoring of the application, as only a few database drivers currently support this feature. SQL clients need to use parameters for secure communication.

- It’s important to note that Always Encrypted is not completely anonymous, as the administrator possesses the master password and can decrypt the data at any time.

7. Microsoft Sentinel: Find your way in the cyber threat jungle! - Els Putzeys

During this session, we gained firsthand experience of how Microsoft Sentinel can effectively collect data to detect security issues and subsequently develop corresponding countermeasures. Throughout the demonstrations, the presenter skillfully composed Kusto queries, a familiar tool for individuals working with Azure logging. However, the most striking revelation was the considerable manual effort involved in constructing any form of detection mechanism within Sentinel. It necessitates meticulous examination of incoming data and a deep understanding of transforming that information into effective protective measures.

Takeaways:

- Microsoft Sentinel effectively collects and analyzes data to detect security issues and develop countermeasures.

- The presenter demonstrated skillful use of Kusto queries, a familiar tool for Azure logging.

- The manual effort required to construct detection mechanisms in Sentinel was highlighted, emphasizing the need for careful examination of incoming data and understanding its transformation into effective protective measures.

- Microsoft Sentinel is a SIEM and SOAR tool that helps consolidate and analyze security-related logs from multiple devices and data stores.

- Azure Sentinel filters out regular usage logs and focuses on data indicating security breaches or anomalies.

- It enables the implementation of workflows for reacting to security incidents.

- The architecture involves Sentinel consuming data from a Log Analytics workspace, which consolidates logs from connected data sources.

- Data connectors, such as the Defender family and O365 defender, provide logs for analysis by Azure Sentinel.

- Custom Kusto queries can be set up to alert on security incidents, including adding involved entities and configuring frequency of occurrences.

- Threat hunting capabilities allow proactive searching for potential security issues, with preset queries based on OWASP and a live-stream of current security activities.

- Automation features enable actions on raised incidents, including adding data, creating tasks for incident responders, and utilizing workbooks for more advanced actions like reporting to Microsoft Teams or revoking user access.

8. Mind-Controlled Drones and Robots - Sherry List & Goran Vuksic

Takeaways:

- The session featured a live demonstration of using brainwaves measured by “The Crown” headpiece to control a robot’s movements.

- While the practical example shown felt somewhat limited, it highlighted the scientific understanding of different brainwaves and their potential for mapping to external devices.

- Companies like Neuralink are actively working on more accurate measurement of brain activity, which may surpass the capabilities of current external technologies.

- The session showcased the exciting possibilities of thought-controlled robotics and how advanced machine learning algorithms can interpret brainwave data to enable precise commands for drones and robots.

- The technology has the potential to revolutionize human-machine interaction and open up new avenues for exploration and innovation in various fields.

9. Building AI-enriched Applications with .NET on AWS - Alexander Dragunov

Takeaways:

- AWS offers a range of interesting services that can be implemented into your code using software development kits (SDKs).

- The session primarily focused on demonstrating different AWS services by utilizing various HTTP clients to call the Amazon APIs.

- The services were found to be straightforward to use, which is beneficial for developers familiar with nugets and dependency injection (DI).

- AWS AI services provide powerful capabilities such as image and video analysis, natural language processing, language detection and translation, and text extraction from forms and documents.

- These AI services can be used independently or combined to build sophisticated applications with AI capabilities.

- The session showcased how to iteratively enhance existing .NET applications with AI capabilities using the AWS SDK for .NET.

- While the session had limited additional learning beyond the usage of the services, it provided insights into the ease of integration and the potential of leveraging AWS AI services to enrich applications.

Overall, it was a great day with many insights gained.

Stayed tuned for our recap of Techorama: Day Two!

Subscribe to our RSS feed