Unfortunately, receiving emails for alerts can be annoying and is not a flexible approach for handling alerts. What if I want to automate a process when our database is overloaded? Should I parse my mailbox? No!

Luckily, you can configure your Azure Alerts to push to a webhook where you process the notifications and Azure Logic Apps is a perfect fit for this! By configuring all your Azure Alerts to push the events to your Azure Logic Apps, you decouple the processing from the notification medium and can easily change the way an event can be handle.

Today I will show you how you can push all your Azure Alerts to a dedicated Slack channel, but you can use other Logic App Connectors to fit your need as well!

Creating a basic Azure Alert Handler

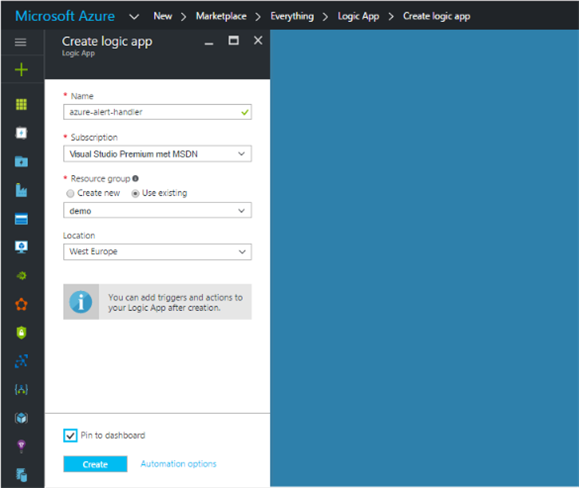

For starters, we will create a new Logic App that will receive all the event notifications – In this example azure-alert-handler.

Note: It is a best practice to host it as close to your resources as possible so I provision it in West Europe.

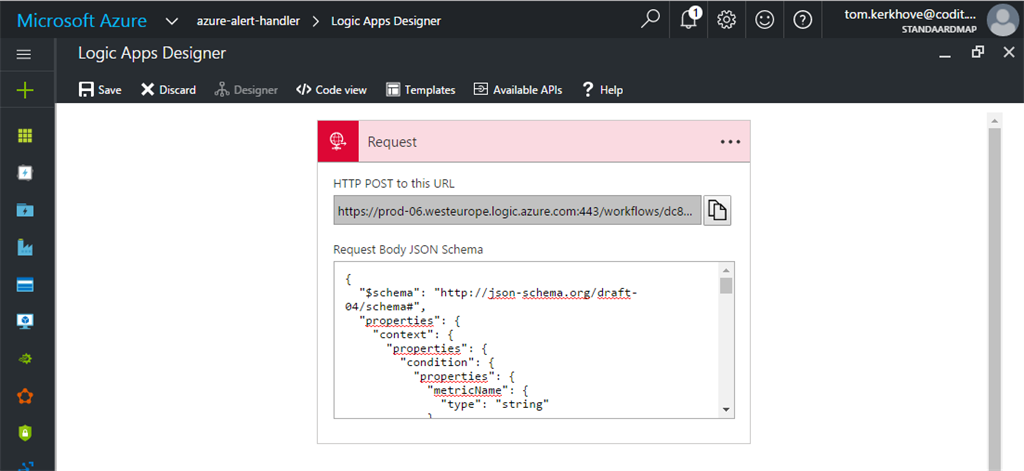

Once it is provisioned, we can start by adding a new Request Triggerconnector. This trigger will expose a webhook that can be called on a dedicated URL. This URL is generated once you save it for the first time.

As you can see you can also define the schema of the events that will be received but more on this later on.

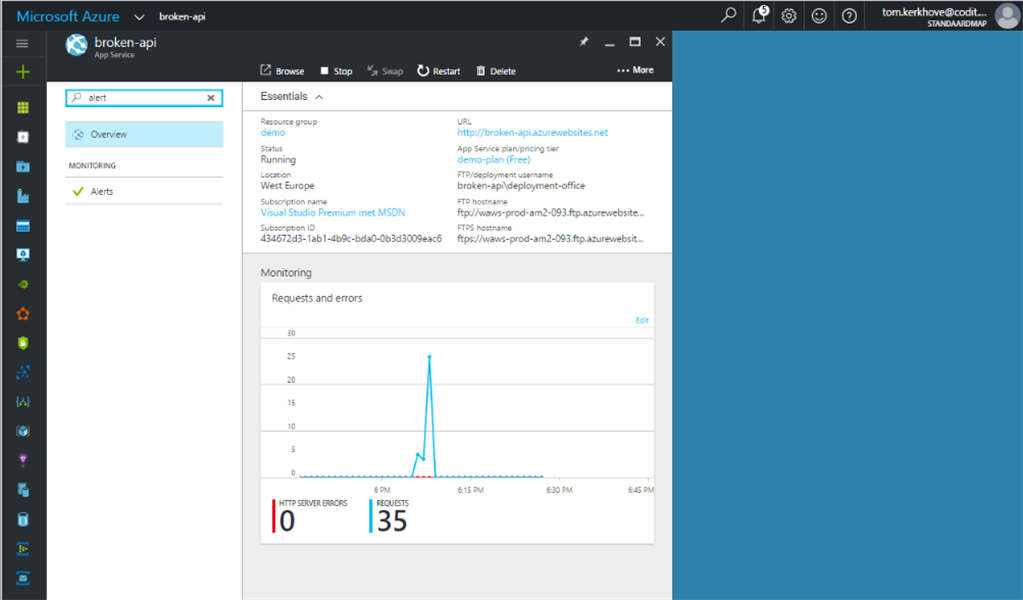

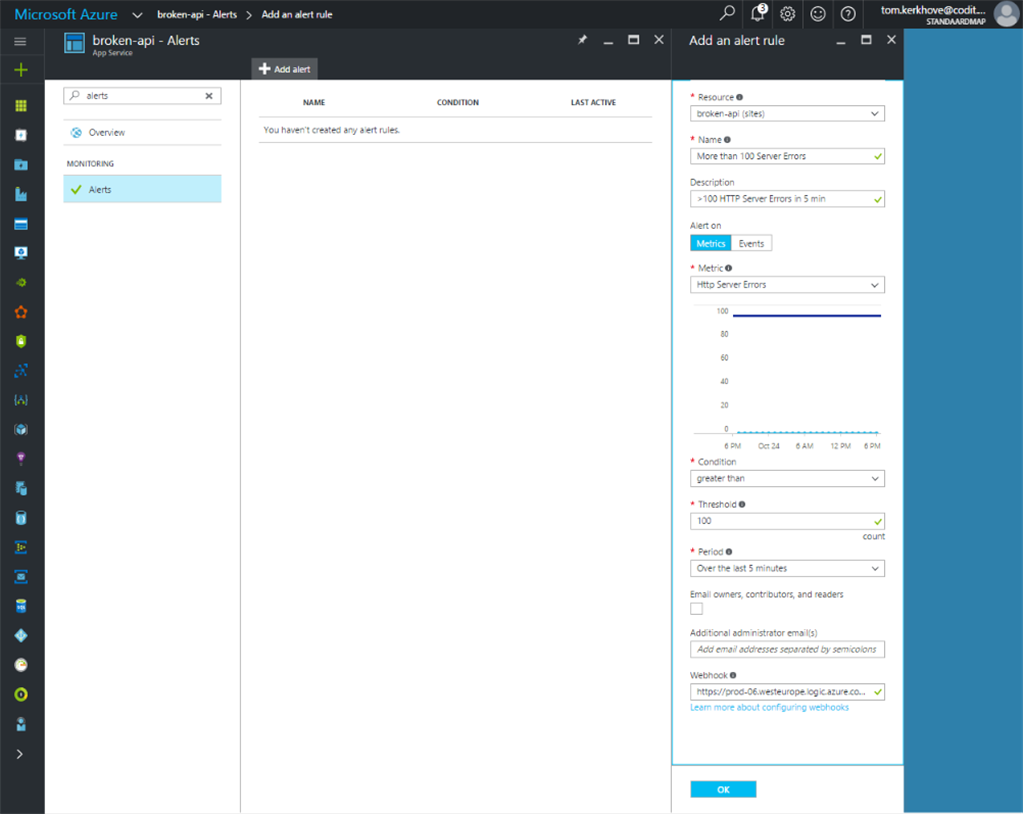

Now that our Logic App is ready to receive events, we can configure our Azure Alerts. In this scenario we will create an alert on an API, but you could do this on almost any Azure resource. Here we will configure it to get a notification once there are more than 100 HTTP Server Errors in 5 minutes.

To achieve this, navigate to your Web App and search for “Alerts”.

Click on “New Alert”, define what the alert should monitor and specify the URL of the Request Trigger in our Logic App.

We are good to go! This means that if our Alert will change its state, it will push a notification to our webhook inside our Logic Apps.

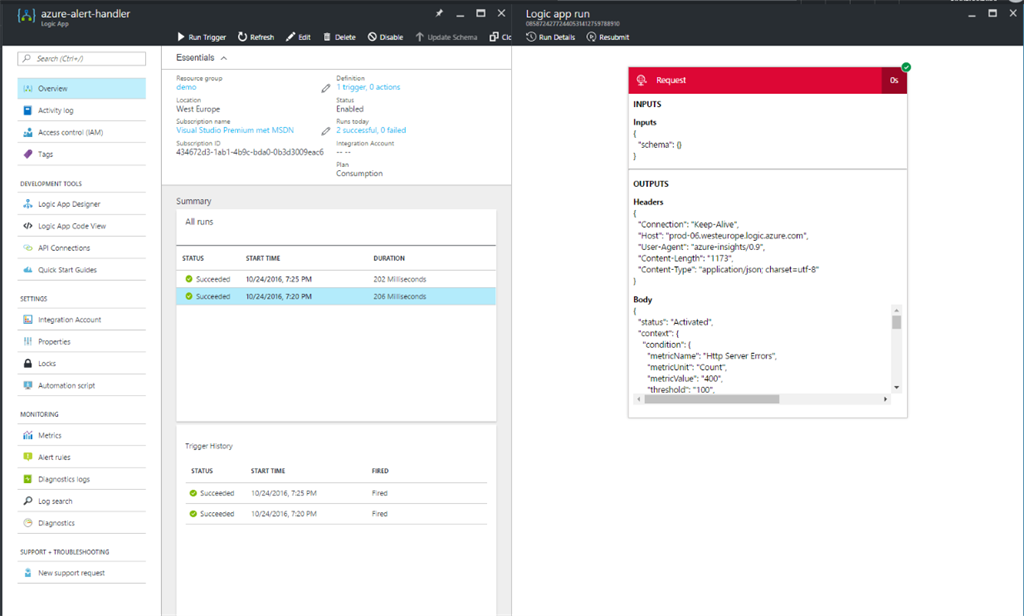

You can see all events coming in our Logic App by using the Trigger History and All Runs of the Logic App itself.

When you select a Run you can view the details and thus what it has sent. Based on this sample payload, I generated the schema with jsonschema.net and used that to define the schema of the webhook.

Didn’t specify the schema? Don’t worry you can still change it!

While this is great, I don’t want to come back every 5 minutes to see whether or not there were new events.

Since we are using Slack internally this is a good fit consolidate all alerts in a dedicated channel so that we have everything in one place.

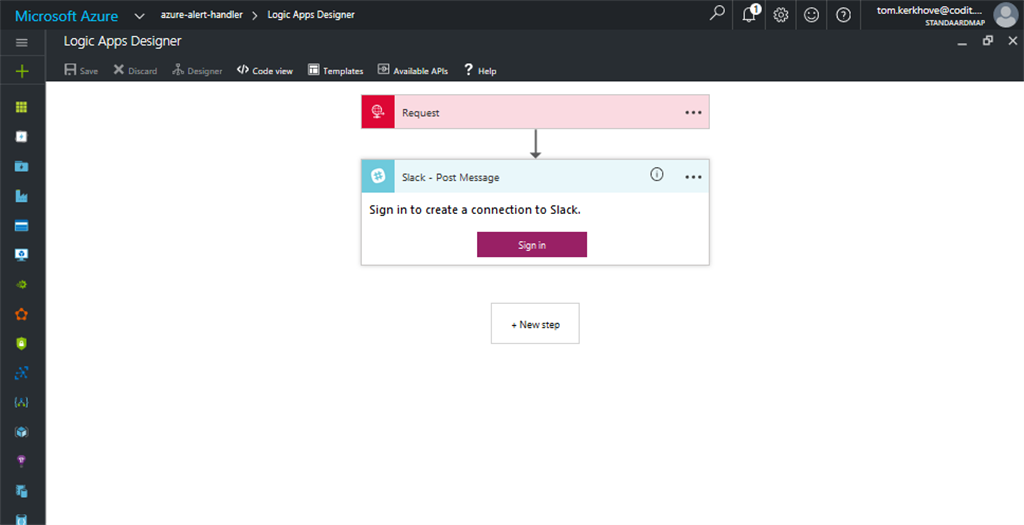

To push the notification to Slack, add a new Slack (Post Message) Action and authenticate with your Slack team.

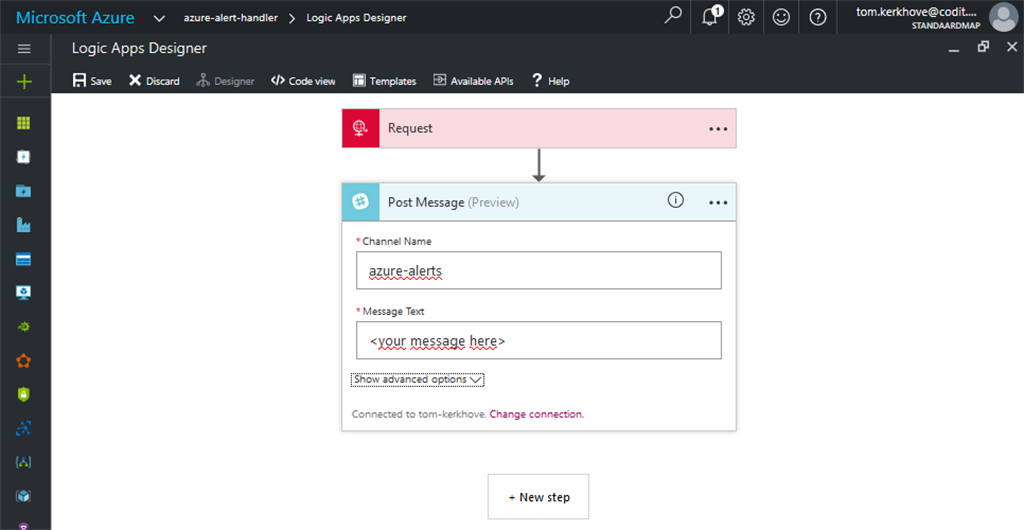

Once you are authenticated, it is fairly simple to configure the connector – You need to tell it to what channel you want to push messages, what the message should look like or even other things like the name of the bot et al.

Here is an overview of all the settings of the Slack connector that you can use.

I used the @JSON function to parse the JSON input dynamically, later on we will have a look how we can simplify this.

Alert *’@{JSON(string(trigger().outputs.body)).context.name}’* is currently *@{JSON(string(trigger().outputs.body)).status}* for *@{JSON(string(trigger().outputs.body)).context.resourceName}* in @{JSON(string(trigger().outputs.body)).context.resourceRegion}_(@{JSON(string(trigger().outputs.body)).context.portalLink})_”

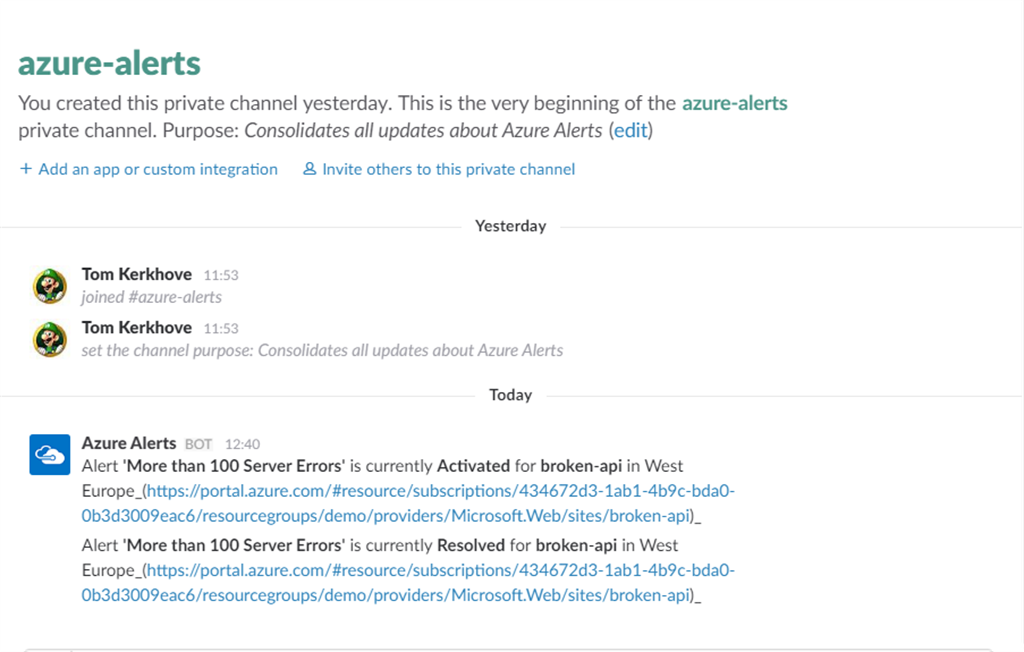

Once our updated Logic App is triggered you should start receiving messages in Slack.

Tip – You can also Resubmit a previous run, this allows you to take the original input and re-run it again with that information.

Awesome! However, it tends to be a bit verbose because it mentions a lot of information, despite that it’s already resolved. Nothing we can’t fix with Logic Apps!

Sending specific messages based on the alert status

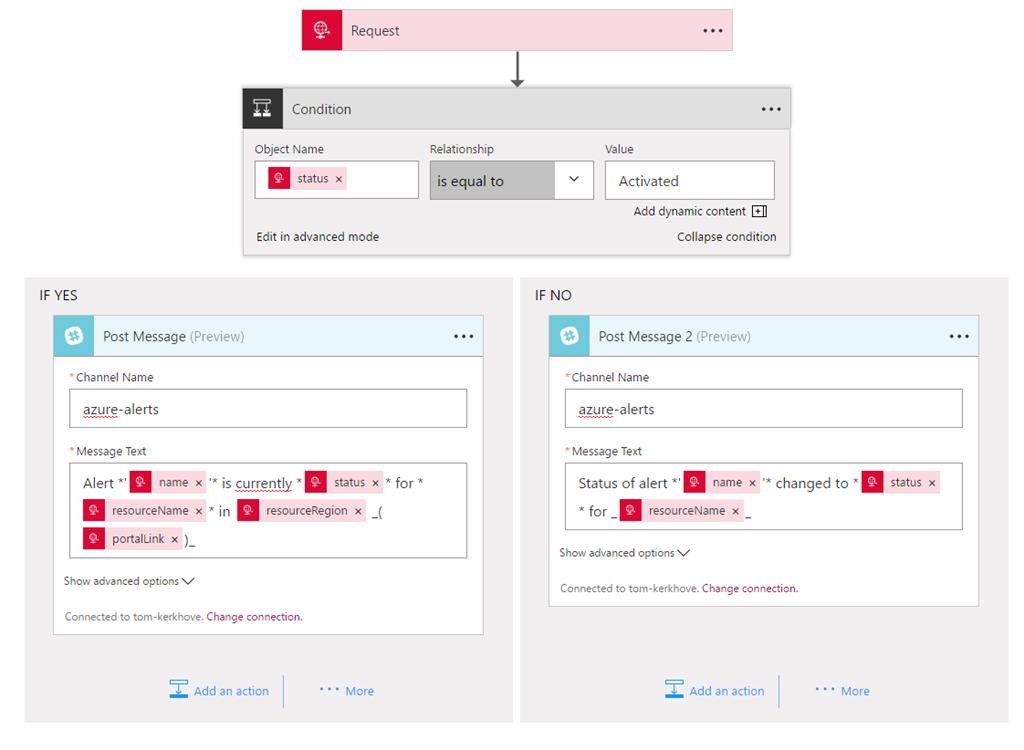

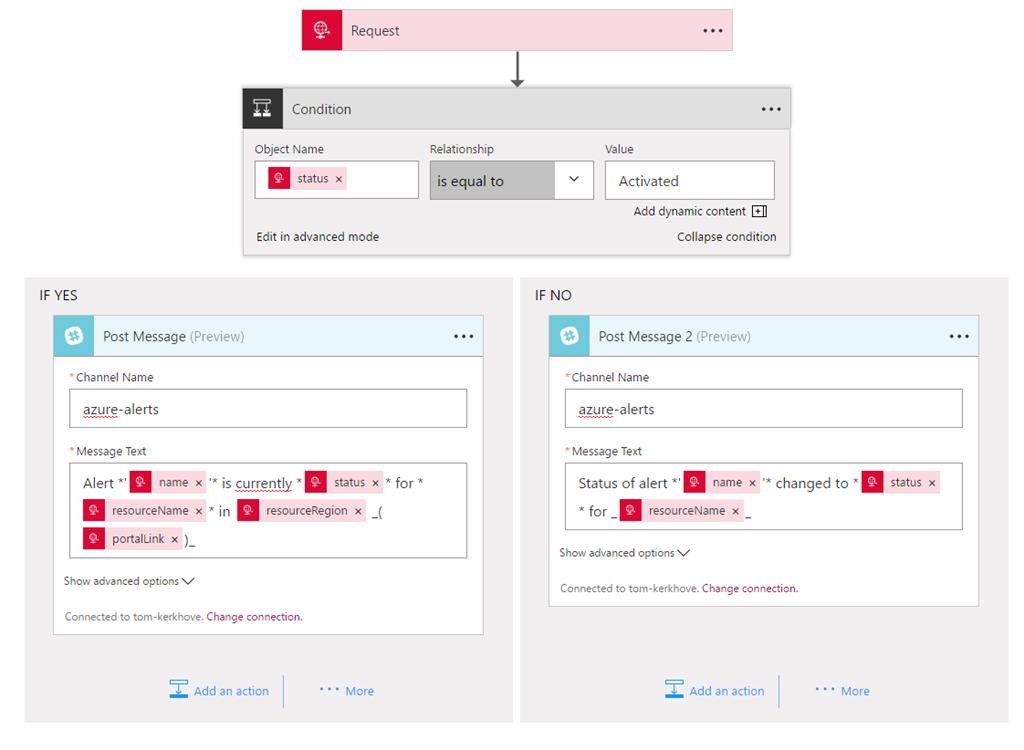

In Logic Apps you can add a Condition that allows you to execute certain logic if some criteria are met.

In our case we will create a more verbose message when a new Alert is ‘Activated’ while for other statuses we only want to give a brief update about that alert.

As you can see we are no longer parsing the JSON dynamically but rather using dynamic content, thanks to our Request Trigger Schema. This allows us to create more human-readable messages while omitting the complexity of the JSON input.

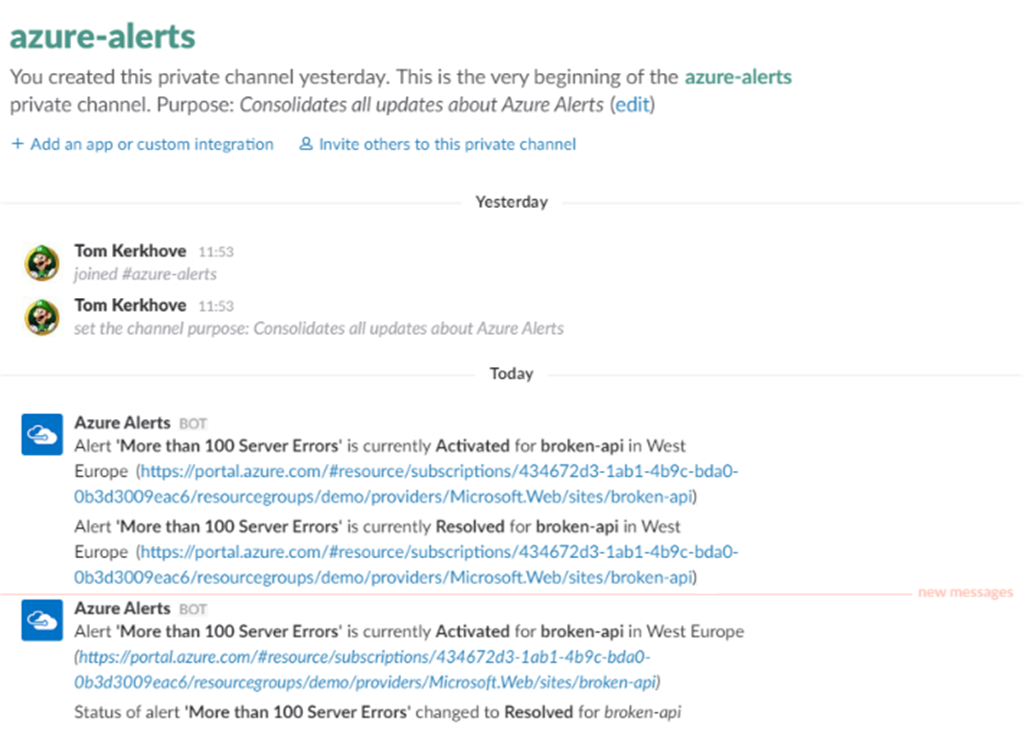

Once our new alerts are coming in it will now send customized messages based on the event!

Monitoring the Monitoring

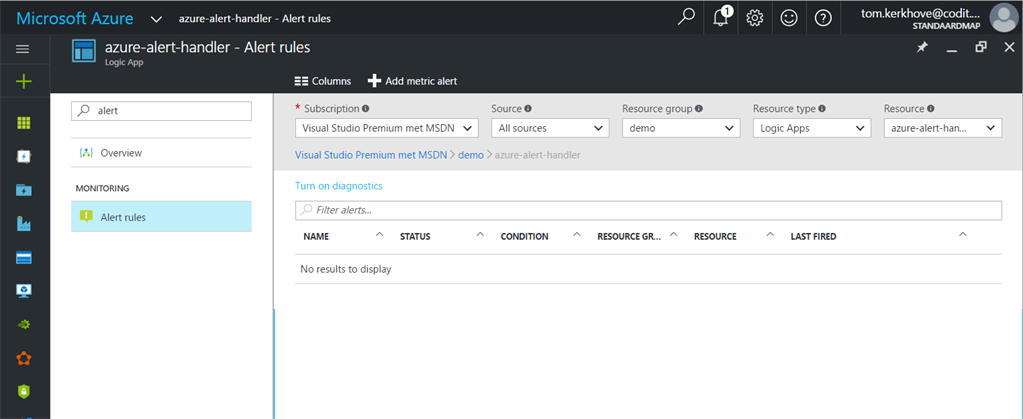

The downside of centralizing the processing of something is that you create a single-point-of-failure. If our Logic App is unable to process events we won’t see Slack messages assuming that everything is fine, while it certainly isn’t. Because of that, we need to monitor the monitoring!

If you search for “Alerts” in your Logic App you will notice that you can create alerts for it as well.

As you can see there are no alerts available by default so we will add one.

In our case we want to be notified if a certain amount of runs is failing. When that happens we want to receive an email. You could setup another webhook as well but I think emails are a good fit here.

Wrapping up

Thanks to this simple Logic App I can now easily customize the processing of our Azure Alerts without having to change any Alerts.

This approach also gives more flexibility in how we process them – If we have to process database alerts differently, we want to change Slack with SMS or another integration it is just a matter of changing the flow.

But don’t forget to monitor the monitoring!

Thanks for reading,

Tom Kerkhove.

PS – Thank you Glenn Colpaert for the jsonschema.net tip!

Subscribe to our RSS feed