Who’s ready to battle? On one side, we have a seasoned visual workflow of Azure Logic Apps. On the other, we have the new kid on the block — the DAPR workflow representing the workflow as a code family.

The two sides

Visual workflows are graphical representations of a process, allowing users to design and configure workflows using a visual interface. They involve drag-and-drop components and connectors to define the sequence of steps and logic. While they generally integrate well with existing development tools, testing can sometimes be hard.

Workflows as code involve defining and implementing workflows using programming languages. The workflow logic is expressed in code and can be version-controlled, tested, and deployed like any other software artifact.

When it’s a matter of deciding when to use what, there is a commonly agreed-upon understanding that visual workflows like LogicApps are a better fit for enterprise integration scenarios, while workflow-as-code solutions seem more appropriate to orchestrate activities that are scoped to a single application. With this exercise, I want to run a sort of comparison, in order to experiment with the implementation process and gain an overall understanding of the features, functionalities, and usability.

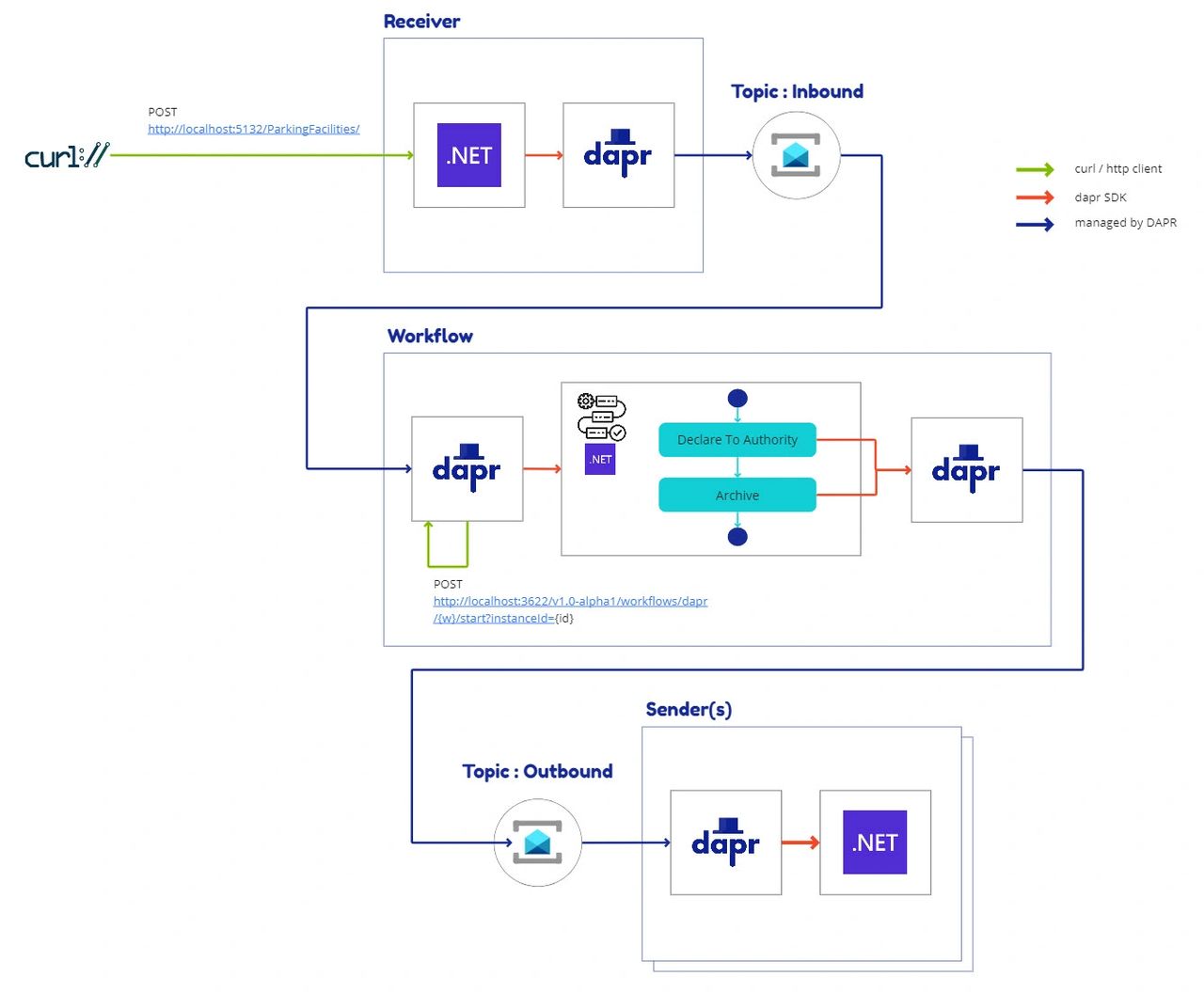

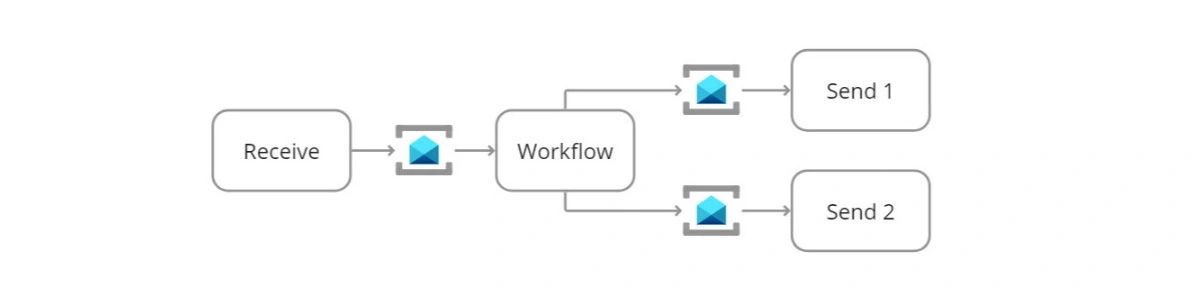

To have a better understanding of the two contenders, I’ve built up an extremely simple integration where we decoupled receivers and senders from the workflow via Azure Service Bus. The setup is depicted in the image below.

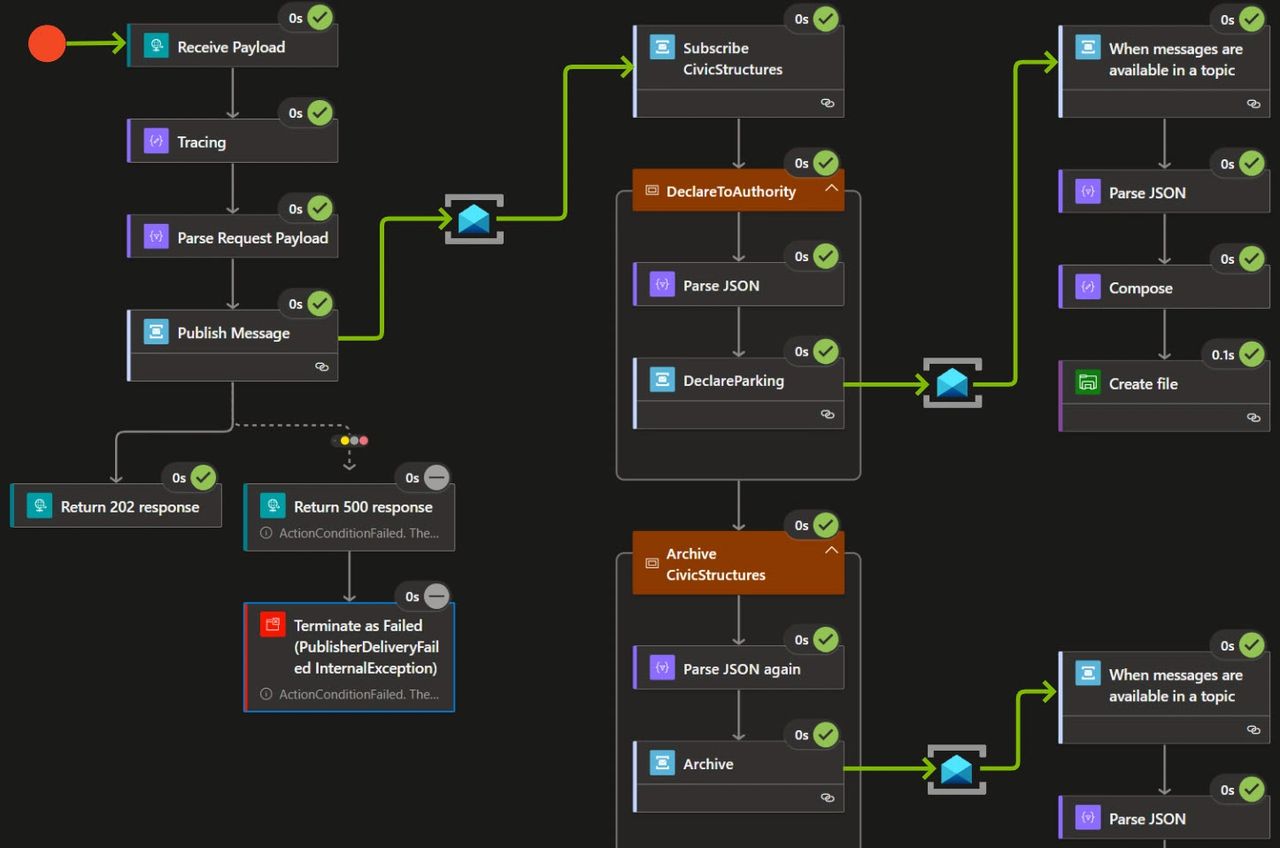

Workflow implementation with LogicApps Standard

Although I don’t consider myself to be an expert in LogicApps, I did have some experience with the technology a few years ago. As a result, I was able to quickly develop the scenario I had in mind. While I needed to refer to the documentation to have a better understanding of the function expression syntax and the message parsing, I found that delivering messages, observing the workflow, and managing operations were incredibly simple and worked like a charm.

You can find the source code here: https://github.com/MassimoC/battle-of-workflow/tree/main/logicapps/src

Key findings, benefits and limitations

➕Rapid Development: With LogicApp, development cycles can be shorter as the focus is on configuration rather than coding. This doesn’t come for free. It takes some time to familiarize yourself with the concepts such as triggers, actions, connectors, conditions, and loops and to understand how these components work together to create workflows.

➕Ease of Use: LogicApps are intuitive and user-friendly, making them accessible to users who may not have coding expertise. LogicApps comes with an extension for Visual Studio Code that provides a simplified interface for designing and managing LogicApps without leaving your development environment.

➕Visualization: The graphical representation provides a clear visual understanding of the workflow, making it easier to communicate and collaborate with colleagues and stakeholders. The ability to get the workflow history and visualize every single step along with status, inputs, and outputs makes troubleshooting easy and intuitive.

➕Managing workflow runs: Using an intuitive user interface, it is possible to access the different workflow runs and when necessary, trigger, cancel, and resubmit a workflow.

➕Adapters: LogicApps provides a wide range of connectors/adapters, allowing you to quickly integrate and automate workflows with various systems and services.

➕ State management: Workflow needs to resist a temporary outage and seamlessly resume from the exact point in time once the outage is resolved. LogicApps workflows are stateful but can also work in a stateless manner if necessary. The state can be stored on a Storage account or SQL Server. Having more options might facilitate the adoption, especially in hybrid scenarios.

➕/ ➖Observability: The integration with application insights is great. I was able to get full end-to-end visibility and correlate every single step of the workflow, including the interactions with the service bus and the time spent in the queue. LogicApps allows you to inject data into the monitoring transactions, retrieve the stored information with KQL and build up visualizations and reports. The side effect is that only Application Insights is supported.

➖Complexity: LogicApps may not be suitable for highly complex workflows that require intricate logic or extensive customization. If you try to put too much logic into a single LogicApp you will end up in a fragile monster workflow, which is difficult to maintain.

➖Flexibility / Extensibility: The drag-and-drop nature of visual LogicApps may limit the flexibility and extensibility of the workflow design. Most of the time, with logic apps complex logic can be delegated to Azure Functions. On June 22nd, Microsoft announced a new extensibility mechanism that allows calls to external libraries from a built-in action. The bottom line is that some development knowledge is mandatory to cover more complex integration scenarios.

➖ Resubmit: When a resubmit is triggered, the workflow will be restarted from the beginning and every step will be executed again. This is perfect when the system to integrate is idempotent. When you want to resubmit a failed workflow and make sure to continue where you left it previously, you have to change the overall design of the LogicApps chaining. For example, to implement a task-chaining workflow that can be restarted from where it failed, we need to chain the logic apps this way: The main Workflow calls DeclareToAuthority which calls ArchiveCivicStructures. With this setup, the DeclareToAuthority LogicApps performs 2 actions (delivery to authority and trigger the next step), which is against the single-responsibility principle.

➖ Containerization: Unfortunately, LogicApps currently lacks robust native containerization capabilities (Arc for AppService has fallen behind in development). Although it is technically feasible to containerize a LogicApp, it is not yet suitable for production usage due to several missing pieces around load balancing/leader-election and the absence of a container-based workflow management dashboard. It’s worth mentioning that cloud services like LogicApps are constantly advancing, so we remain hopeful that in the near future, containerized and portable Logic Apps will become available, enabling their deployment across various environments (connected and disconnected).

➖ Unit of scale/Resource efficiency: The LogicApps standard unit of scale is the App Service plan. It is therefore important to understand the limits and the constraints of the plan you pick up. Another fundamental question is how to group workflows in the same app service plan (group by business affinity, business criticality, etc. Read about this here), because those workflows will compete for the same resources and then scale together.

Workflow implementation with DAPR

My journey with DAPR workflow started by checking the DAPR docs, the Diagrid resources (blog post / samples) and DAPR community samples. After some trial and error, I ended up with the following setup.

✅ simple-receive: minimal API that receives the ParkingFacility message and uses the DaprClient to publish the message to the inbound topic on Azure Service Bus.

✅ simple-workflow: The subscribe-n-trigger and the workflow logic are combined in the same service. Messages are subscribed from the inbound topic and a new workflow is initiated by calling the “/v1.0-alpha1/workflows/dapr/Orchestration/start?instanceId={id}” endpoint (you can use the DaprWorkflowClient as well). The workflow implements the task-chaining pattern with two activities that publish the message to the outbound topic.

✅ simple-send: Subscribe from the outbound topic and process the message.

Key findings, benefits and limitations

➕ Flexibility and Extensibility: DAPR workflows provide the flexibility to define complex logic and customizations using programming constructs, libraries, and frameworks. DAPR workflow can be extended with other DAPR building blocks or any others.

➕ Collaboration and Reusability: DAPR workflow code can be easily shared, reviewed, and modified by developers, enabling better collaboration and promoting code reuse. The DAPR CLI improves the overall developer experience by allowing you to run and test your DAPRized solution on a local machine, without complex prerequisites or installation.

➕ Integration: Being DAPR a code-based workflow, it seamlessly integrates with existing development tools, CI/CD pipelines, and version control systems. We likely all agree that the PR experience with a code class is way better than a YAML or JSON one.

➕Containerization: DAPR is a cloud-native runtime that mainly targets Kubernetes-based platforms. The DAPR runtime is containerized and therefore portable. It can run on every cloud, on-premises, and edge location.

➕ State management: Workflow needs to resist a temporary outage and seamlessly resume from the exact point in time once the outage is resolved. The DAPR workflow state is persisted in the Actor state store (key-value pair). Having more options might facilitate the adoption, especially in hybrid scenarios.

➕ Resubmit: The event sourcing pattern is implemented in the DAPR workflow to store the execution state. In this pattern, the workflow engine manages an append-only log of historical events, capturing the steps taken by the workflow. During replay, the workflow starts from the beginning and skips tasks that were already completed. This replay technique enables the workflow to seamlessly resume execution from any “await” point as if it had never been unloaded from memory.

➕Unit of scale/Resource efficiency: It depends on the hosting platform, but you can scale a single workflow and assign resource limits in order to try to achieve better cluster efficiency. Every workflow can scale independently if needed, which is a big benefit.

➕Observability: DAPR can be configured to emit tracing data by using the widely adopted protocols of Open Telemetry (OTEL) and Zipkin. This allows you to integrate your traces with multiple observability tools like Jaeger, DataDog, Zipkin, and more.

➖ / ➕Adapters: Input and Output bindings allow integration with external sources. However, the list of available bindings is way smaller than the number of Adapters available for LogicApps.

➖ Learning Curve: As with any other workflow-as-code, DAPR requires developers to have coding skills and familiarity with the chosen SDK programming language and syntax.

➖ Maintenance: Code-based workflows may require more effort to maintain and update compared to visual workflows, Especially when dealing with complex logic, multiple versions, and frequent changes.

➖ Managing workflow runs: This is the biggest missing piece: I’d love to have a dashboard to follow up on the workflows and to interact with them. Being able to visualize which workflows are scheduled, executing, complete, etc., and interact with them, for example by canceling an instance or replay it. Today it is possible to interact with workflow by using the workflow SDK or calling a REST endpoint.

➖ Alpha version: DAPR workflow is in alpha version therefore not production ready but it looks very promising and I am thrilled to see the evolution in the coming months.

How about the Dr. Frankenstein approach?

And how about using the DAPR workflow as an orchestrator (therefore leveraging the event sourcing pattern) and LogicApps’ extensive library of pre-built adapters for seamless integration with various systems and services?

Will this approach combine the best of two worlds? Or the limitations will multiply resulting in a messy outcome? I strongly believe the latter.

Conclusion

Who is the winner of this battle? Impossible to decide.

Choosing between visual workflows and workflows-as-code depends on the specific needs of your business and the complexity of your workflows. Visual workflows are ideal for moderately complex processes, offering rapid development and a production-ready observability and management experience (meaning better MTTR). On the other hand, workflows-as-code provide flexibility, extensibility, and better portability. Carefully evaluate your requirements, development skills, and future scalability to make an informed decision that aligns with your business goals.

Subscribe to our RSS feed