The Goal

When the UEFA Euro 2020 football tournament began two years ago, we decided to build an application in Microsoft Azure to replace the age-old tradition of using Microsoft Excel to register and track people’s predictions. This allowed everyone within our company to join in, make predictions and track each others’ scores in a leaderboard. Additionally, we included AI models which also make predictions, in order to see which was better at predicting the outcome of the games. We had some really interesting outcomes: the AI performed better than expected but in the end, man turned out to be a little better at making predictions and one of our colleagues won the overall tournament.

See how this worked in the UEFA Euro 2020 football tournament.

We had a lot of fun building and using the application last time, and with the FIFA World Cup football tournament 2022 coming around, we decided to pick it up again and build a new version. The goal remains the same as last time: to create an application that predicts the outcome of games and keeps score, with an AI model joining in with the predictions and which declares a winner at the end. However, this time around the application is not only available to people within Codit, but our customers too! A new leaderboard has been introduced where organizations can see how they stack up against each other. So let the games begin!

The Architecture

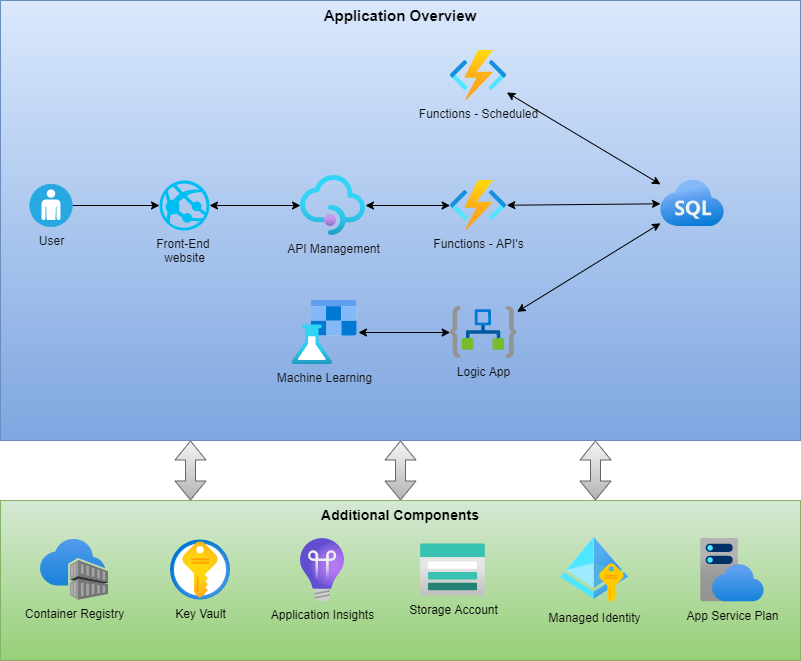

As we had built the application before, we had a good point from which to start. We were able to re-use most of it, but decided to make some additions and changes as well. The components remained largely the same:

- A front-end website where users can see the schedule, add their predictions, view the result of games and their predictions, and see a leaderboard with users from their organization as well as one that shows all organizations that are participating and the average score of their users.

- APIs to deliver the necessary business functionality to the front-end, and a separate front-end from back-end.

- A back-end database where we store information about teams, games, users, predictions and points scored.

- AI models which predict the outcome of games.

We made some small changes to the overall architecture and finally decided on the following:

As shown in the diagram above, we use HTTP-triggered Azure Functions to host our APIs. Time-Triggered Azure Functions periodically retrieve the game schedule, game results and calculate the points each user has earned. The HTTP Triggered Azure Functions will be available through Azure API Management, where we will also implement authentication and caching of GET requests. The front-end will use the APIM layer to call the APIs.

Next to that, we have the Machine Learning workspace where we host the AI model. We have a scheduled Logic App that calls the API model’s endpoint to retrieve the predictions of the AI model and which inserts this into the back-end database.

Improvements

One of the big improvements we wanted to implement is the use of automated pipelines to build and deploy our components. We are now using the newest technology and best practices to build and deploy the application. For example, we are using YAML for our pipelines, centralized Bicep modules in our Bicep templates to define our resources and gain maximum re-usability, the Azure Resource Manager Template Toolkit to lint our Bicep templates, the Spectral CLI to lint our OpenAPI files and GitLeaks to detect and prevent hardcoded secrets in our code. A big improvement indeed, and well worth the effort!

We also wanted to improve our Azure Functions and bring the code up-to-date and move to Azure Functions v4 and .Net 6.0. Fortunately, there is a great Arcus template to create HTTP Triggered Azure Functions which saved us loads of time and made sure we use all the latest and greatest standards and functionality!

We also moved from zip deployments to using a containerized approach for our Azure Functions. Why did we do this? Gillian can explain it much better than I can in this blog post.

The front-end application has also been completely rewritten using Next.js, making it better, faster and easier to use than last time.

Opening The Application Up To External Organizations

Since we also want to give our customers the opportunity to join in the fun, we needed to implement a way to allow their users to log in as well. One option was to create a simple username and password-based authentication, but we decided to take this a step further and implement Azure Active Directory B2C. This allows our customers to use their own Azure Active Directory users to log in to the front end of our application and use their own tokens to authenticate towards the API’s. Not only does this give us better security, but from a user’s perspective, it is a much easier approach as they can log in using their organization’s credentials. Some great technology that gives us exactly what we need!

Upcoming

Exciting times are ahead. The tournament starts on the 20th of November and our first customers have already been onboarded and are ready to join.

In the coming weeks, we will be posting more blog posts about the technical details of the application, the AI models and how the AI predictions stack up against the predictions of our Codit colleagues and customers!

We look forward to seeing you then…⚽

Subscribe to our RSS feed