Third and final day of Integrate 2019

Scripting a BizTalk Server installation – Samuel Kastberg

The third day of the conference started with a session from Samuel Kastberg about scripting a BizTalk Server installation.

Scripting could provide a more structural installation and therefore a more predictability and reliable end result and stable environment, respectively. Additionally, scripting can reduce time-cost and help prepare for disaster recovery.

It was noted that scripting is dependent upon the primairy goal (e.g. creating development machines, streamlined tests, SQL jobs, etc.), baseline (e.g. the use of 4 Virtual Machines, WS2016, etc. ), and time frame (is it worth scripting it?). Frankly, keep in mind to script controllable candidates that provide constant and predictable behaviors. These candidates can be the installations of Visual Studio, SQL Server, monitoring tool, etc. On the other hand, bad candidates are unpredictable or out of your control (e.g. Firewall) and shouldn’t be scripted.

It was noted that scripting is dependent upon the primairy goal (e.g. creating development machines, streamlined tests, SQL jobs, etc.), baseline (e.g. the use of 4 Virtual Machines, WS2016, etc. ), and time frame (is it worth scripting it?). Frankly, keep in mind to script controllable candidates that provide constant and predictable behaviors. These candidates can be the installations of Visual Studio, SQL Server, monitoring tool, etc. On the other hand, bad candidates are unpredictable or out of your control (e.g. Firewall) and shouldn’t be scripted.

Samual Kastberg also enriched us with some best practices:

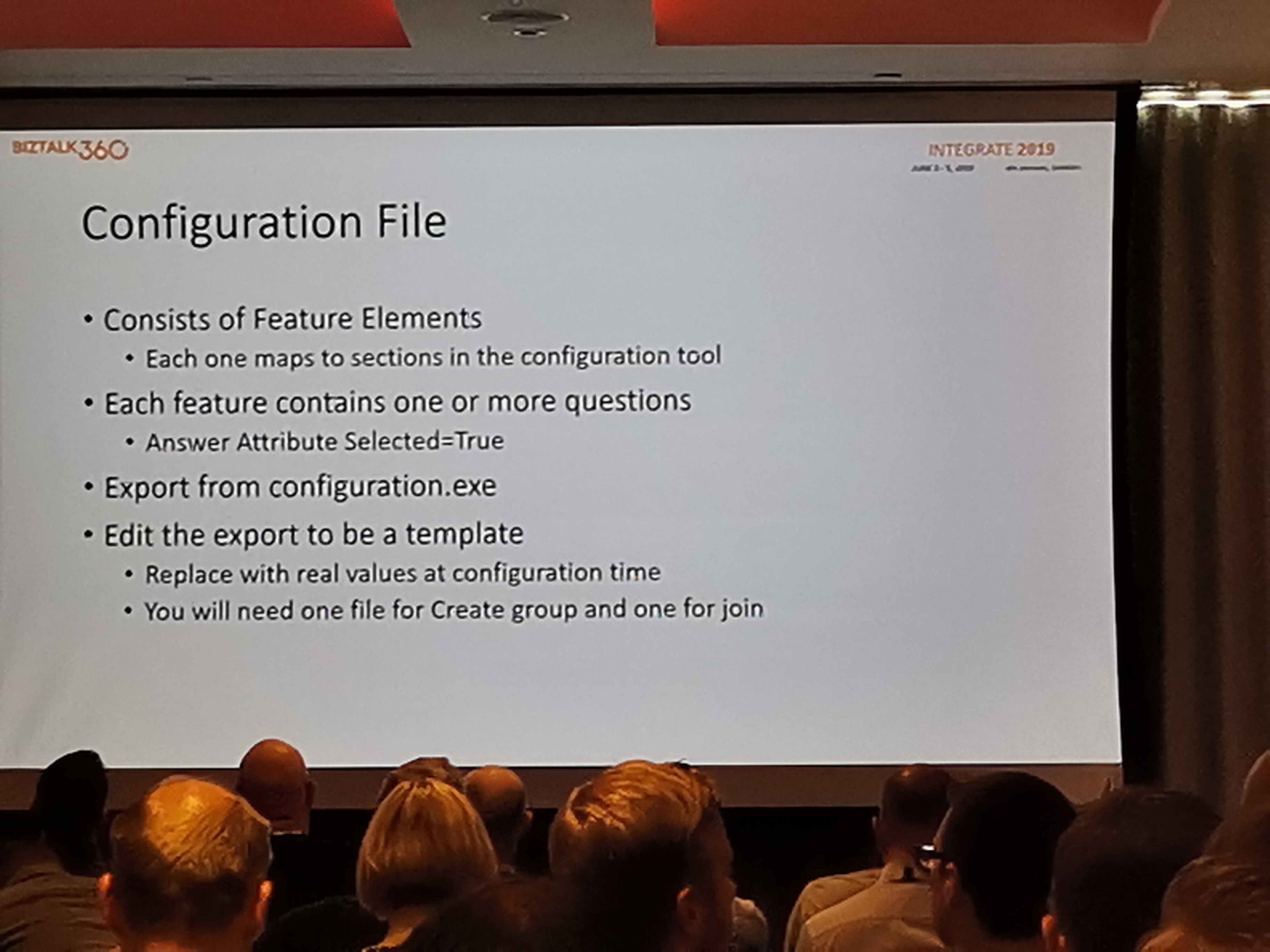

Eventually, to run a script (e.g. using Powershell providers) flexible, a configuration file can be issued that contains environmental settings such as, feature elements, hosts, host instances, and handler. By creating placeholders/tokens used for AD users, AD groups, password, etc. – within the configuration files, scripting can easily be used for other environments. The passwords should be handled with care by using a KeyVault and/or KeePass.

An important note: scripting is not a replacement for documentation!

For more information:

https://github.somc/skastber/biztalkps

BizTalk Server Fast & Loud Part II: Optimizing BizTalk – Sandro Pereira

Sandro started the second part of his Fast & Loud presentation talking about optimizing BizTalk Servers solutions. He gave the first part of Fast & Loud at an earlier Integrate event.

A few general tips were given on how to analyse what your needs for an environment are. For instance, do you need a super-fast environment, or do you need an environment that can handle a lot of messages? The emphasis was set on realizing there is no perfect configuration for all your needs. He demonstrated this by using a car analogy, with a fast car and a truck for comparison.

The first real-life example was a manufacturing business. The business has a BizTalk process to put messages from a manufacturing plant into an SAP system. BizTalk Server was already processing over five million messages for the first manufacturing plant in one month at that time. It went pretty well with the first manufacturing plant, but trouble at the SQL Server level started to arise when the second manufacturing plant was built and integrated into the processes of the company. SQL Server was running at 90% CPU and RAM. The solution was to increase the amount of RAM and improve the CPU. Maybe when development teams know beforehand how much the increase of messages to be processed will be, problems like this are solved before they caused critical states.

The other real-time example consisted of a migration project. Where a banking company was going from BizTalk 2004 to BizTalk 2016. The migration was going smooth until it turned out that testing a particular integration resulted in a much slower processing time. The processing time went from 3 seconds to 90 seconds after migrating from BizTalk 2004 to BizTalk 2016. It was not a single problem causing the slower integration. The solution consisted of:

- Tweaking the polling interval for MQ polling operation.

- Disabling Orchestration Dehydration for composite orchestrations. As Sandro mentioned, you should be careful with options like this, as you can run into memory issues.

- Editing the XLANG element in the BTSNTSvc.exe.config file to optimize memory usage.

- Improving MQ Agent recycle times in Windows.

- Setting up the priority in Send Ports.

- Setting up the priority in orchestrations.

Changing the game with Serverless solutions – Michael Stephenson

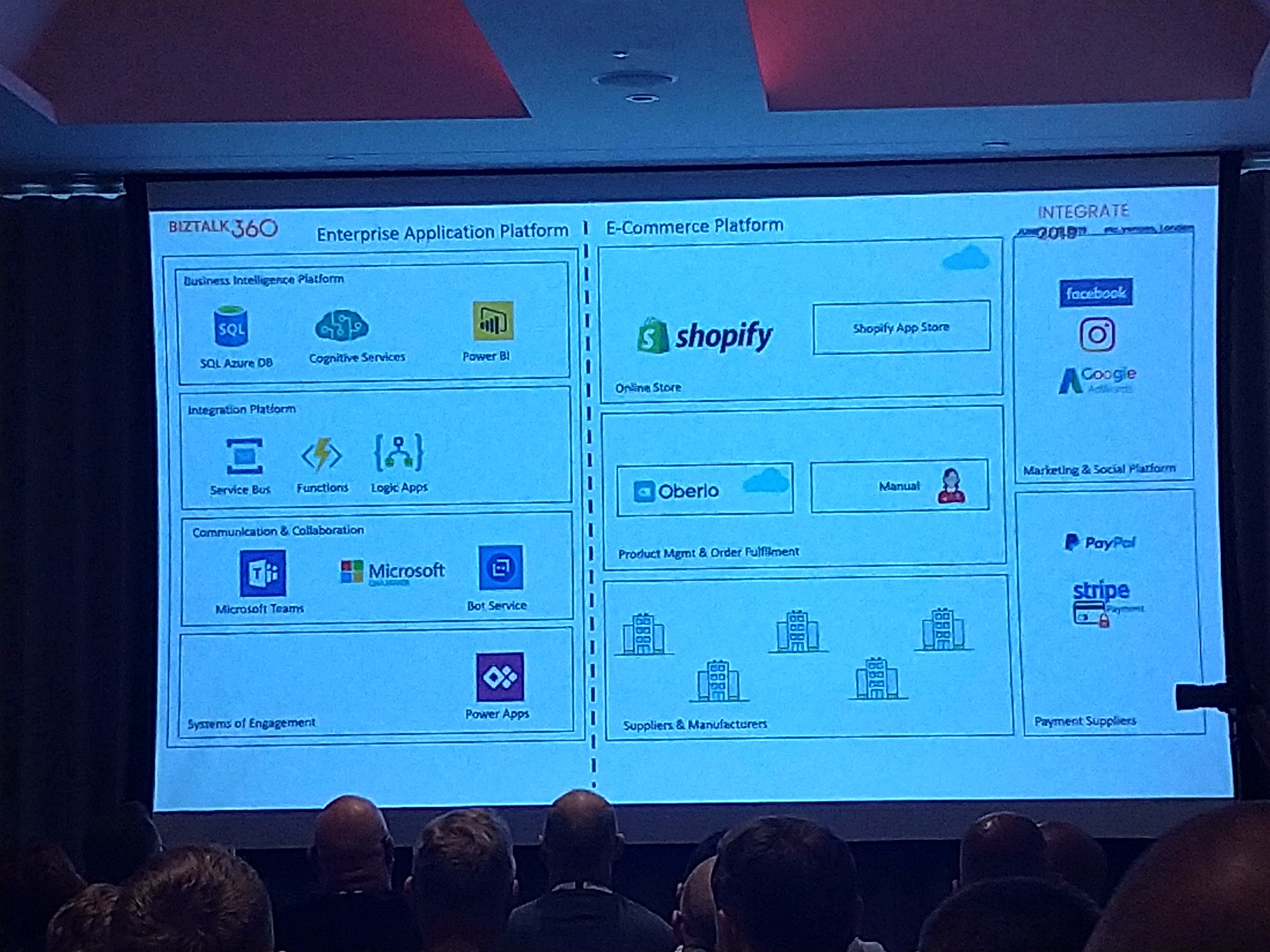

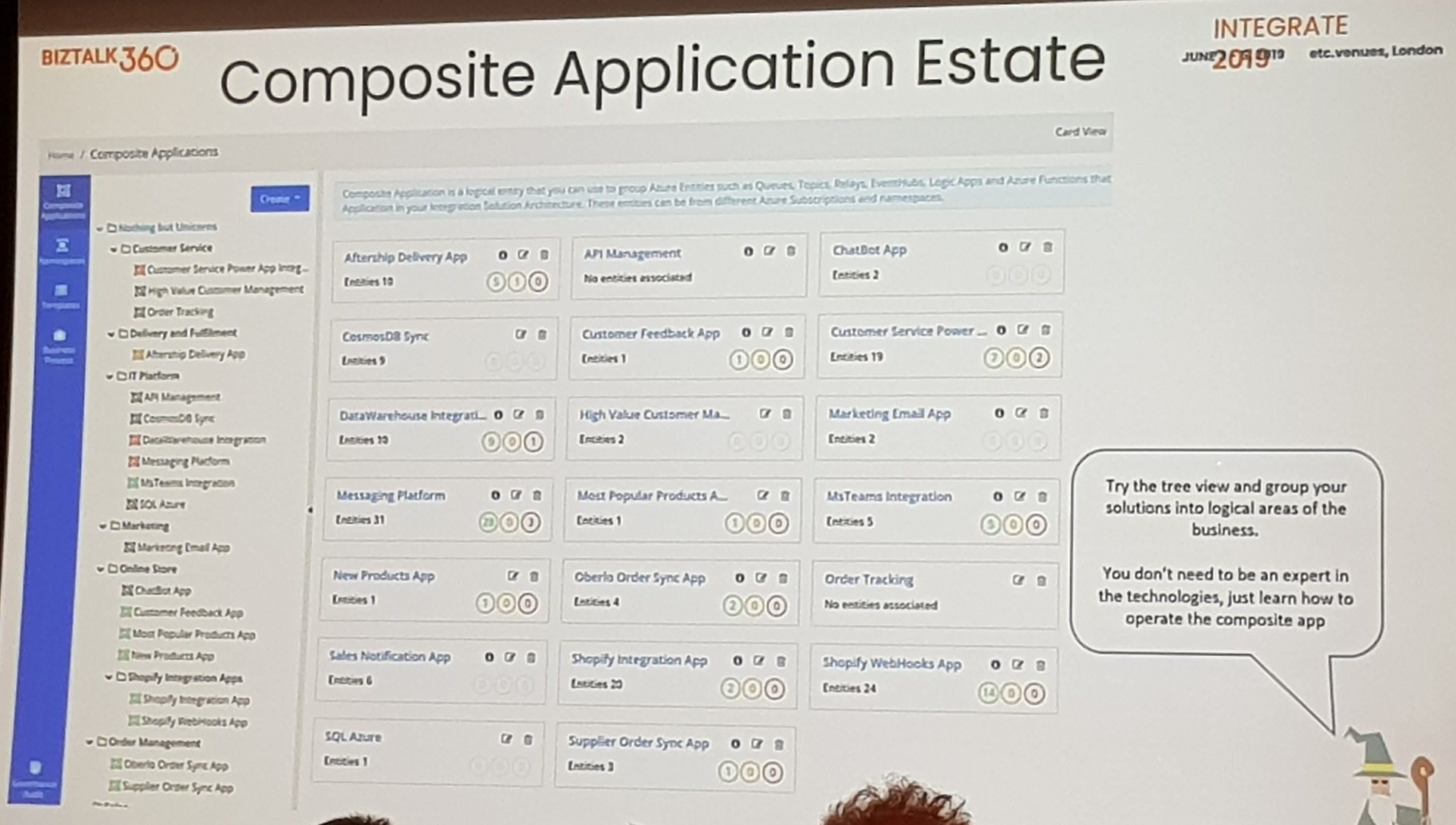

Michael started with his own Unicorn web shop called Nothing but Unicorns as example. You can find the site here: https://nothingbutunicorns.com/. He made his shop using Shopify as the platform for the e-commerce aspect of the web shop.

For tracking orders in the back office aspect of the web shop, he leverages a few PowerApps in Dynamics 365. This helps with managing exceptions, Michael feels like even big company can replicate their order tracking system in this way.

For support ticket tracking, Microsoft Teams is leveraged. This also comes handy with the Power BI integration, so adding existing Power BI diagrams is a breeze.

Integration between different systems is made possible by Azure Service Bus, Azure Functions and Logic Apps. Michael clearly demonstrated how this serverless structure with these Azure components brought affordability & scalability in businesses.

Even for adding notes and to-do’s, he expanded the Shopify template for each sellable product to include a button, which enables you to make a quick text message and send that to a Logic App. This shows that he goes pretty far to make the administration experience as seamless as possible.

Mike demonstrated that back-end order processing could be simply integrated with Shopify, dispatch & parcel tracking companies, and Power BI to visualize orders. Webhooks provide an easy means of surfacing events occurring during the shopping process and triggering logic apps to run back-end processes, and integrations with other parties.

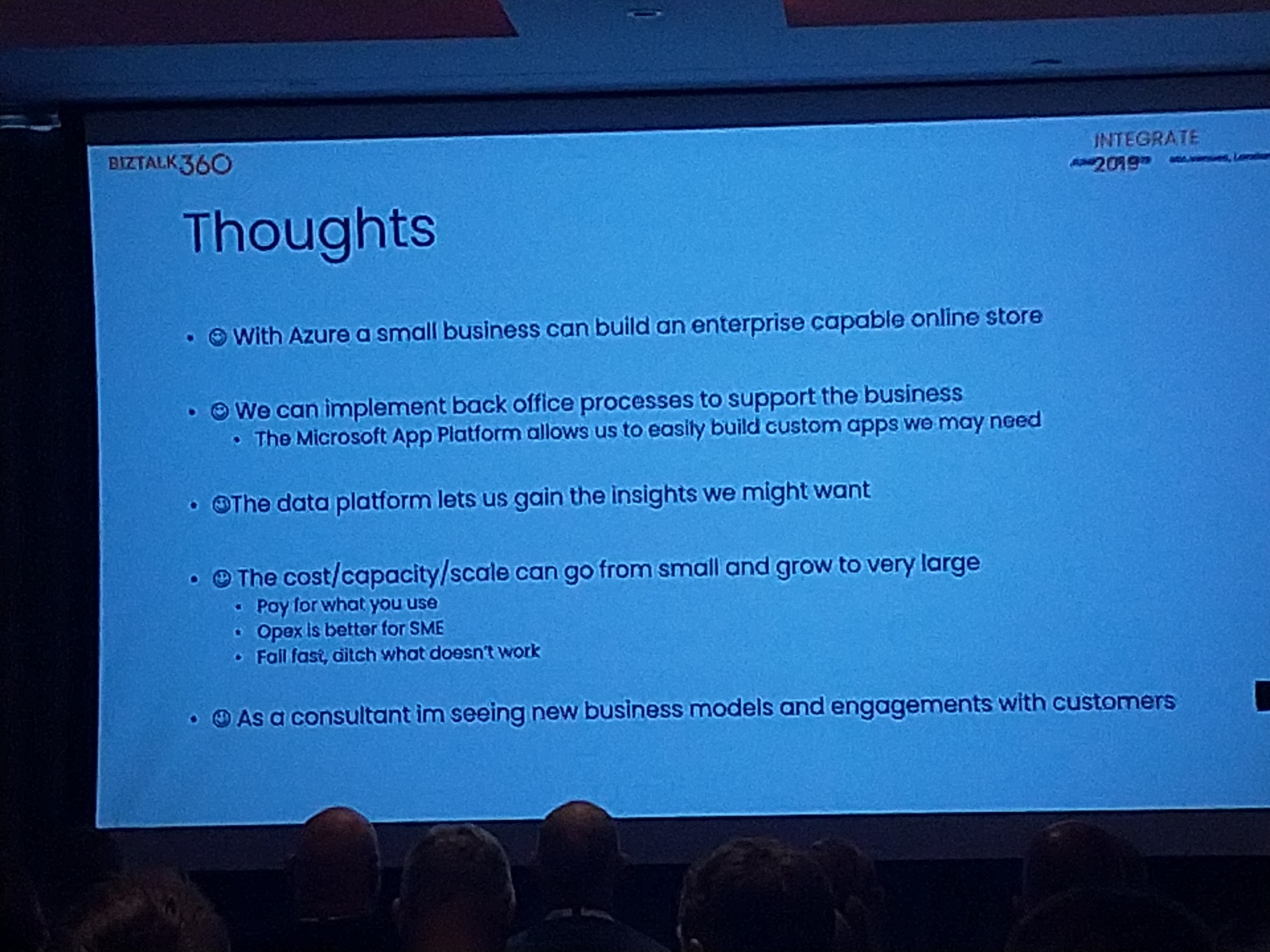

Key takeaways… it’s very easy to build an enterprise capable online store using the Microsoft App platform. The data platform allows us insight into our processes. Provides a low entry cost, and can scale to very large when needed.

Lowering the total cost of ownership of your Serverless solution with Serverless360 – Michael Stephenson

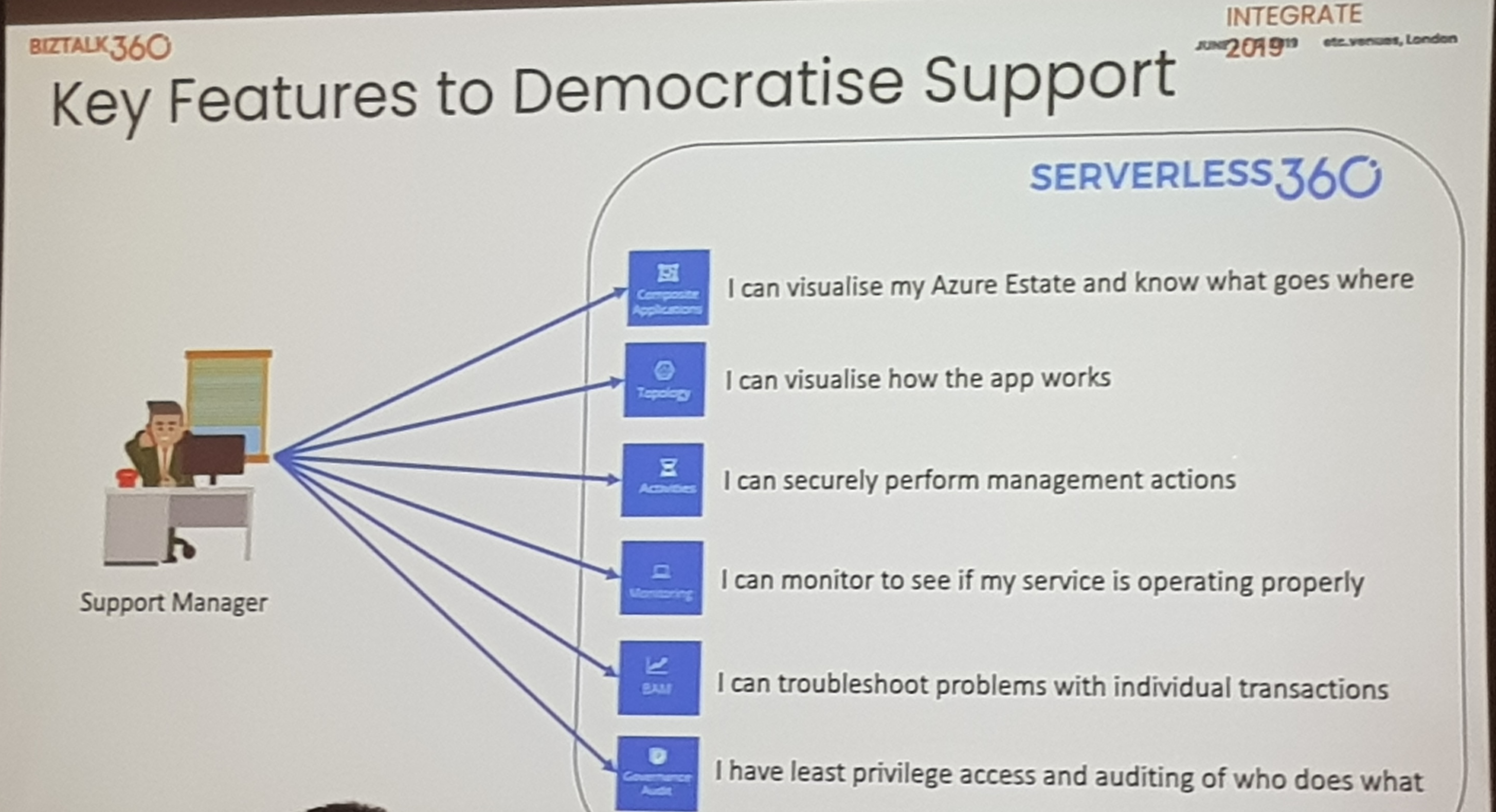

Michael walked us through the challenges industries are facing due to lack of skilled people in newer technologies related to Azure. He emphasized on choosing right tooling in order to reduce the severity of this challenge.

Michael explained and demonstrated how Serverless 360 could be used to administer the Azure solution from outside the Azure portal. Serverless360 has a unique feature of Business Activity Monitoring which helps the organization front desk track message flows and to facilitate analysis of the root causes of failures. Enabling first and second line support staff frees up more skilled resources, so they can focus on development of new features.

He also announced that Atomic Scope is now part of Serverless360 and supports integrations in Azure and also Hybrid Integrations.

Serverless360 provides a way to visualize your Azure etsate and to visualize how your App works. It provides a secure environment for performing management actions, seeing if your services are running properly. It enables troubleshooting of individual transactions, operates on the principle of least privilege access and audits who does what.

Adventures of building a multi-tenant PaaS on Microsoft Azure – Tom Kerkhove

With Codit, we’ve been building several cloud-native Azure solutions and platforms for our customers in Europe. In this session, Tom shared some of his (and our) insights and key take-aways when building PaaS solutions on Microsoft Azure.

There are many to consider when implementing scalable solutions (and especially when auto-scaling is enabled). The most important take-away on scaling is the importance to set maximum scaling limits and have near-real-time insights of the scaling actions that happen, together with the impact they have on the cost of the solution. Tom shared that it was especially hard with Azure Functions to have insights in this, although Azure Functions does everything for you.

Multi-tenancy is another challenge that we’ve faced at several of our projects. The trade-off between full isolation, flexibility in deployment and cost is hard to make, but should ideally be decided upfront. Introducing multi-tenancy in an existing architecture is much harder than starting with it from the beginning. Sharding with SQL Database Elastic Pools is the scenario that we quite often use. An important take away there is to constrain resources (DTU) on different tenants or to even introduce different tiers (and have customers pay more) for heavy-weight customers. Directing requests to the right tenant is something that was explained with an API management policy or with a cached KeyVault lookup.

When having an Azure PaaS architecture designed, a lot of different services are commonly used and result in a best-of-breed design. This means that monitoring the different components in that solution (which are commonly loose-coupled) is very important. The most important take away is that the development team should use their own operational tools, so that they know the solution that will be operated by the managed services team can be monitored and followed. And to achieve that, correlating events and enriching telemetry is of the utmost importance.

All of the above lead to a conclusion that enterprises (and their partners) have to make a shift in project approach. Instead of a traditional project approach, cloud solutions will need to be handled much more in a product-oriented way. And designing a solution that runs on a cloud platform that constantly evolves and changes, will force us in building architecture that are ready for change, because change is coming.

Microsoft Integration, the Good the Bad and the Chicken Way – Nino Crudele

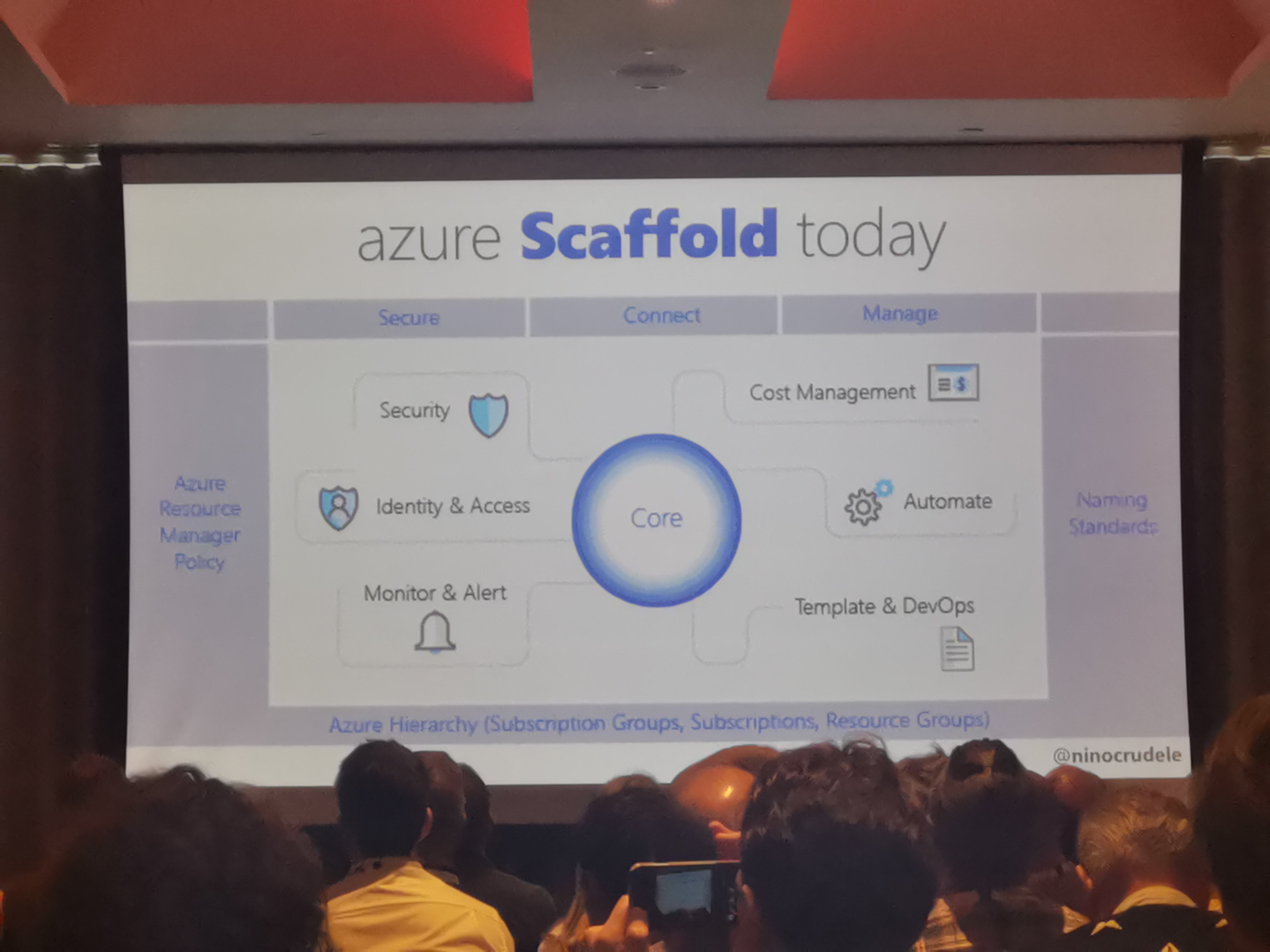

In this session Nino Crudele guided us through the pros and cons about Integration using Azure. He emphasized on the importance of Governance inside Azure, Governance is also a part of Azure Scaffold

He then walked us through good and bad approaches to use Azure:

- Within Azure Governance you are able to become a global admin, but that should be avoided. A better approach is to use Privileged identity management, to avoid exposed security to an Admin.

- The management group can provide permissions, policies and cost information regarding subscription, resource groups and tabs.

- Understand price sheet, which can be retrieved from the Portal, to obtain possible discounts.

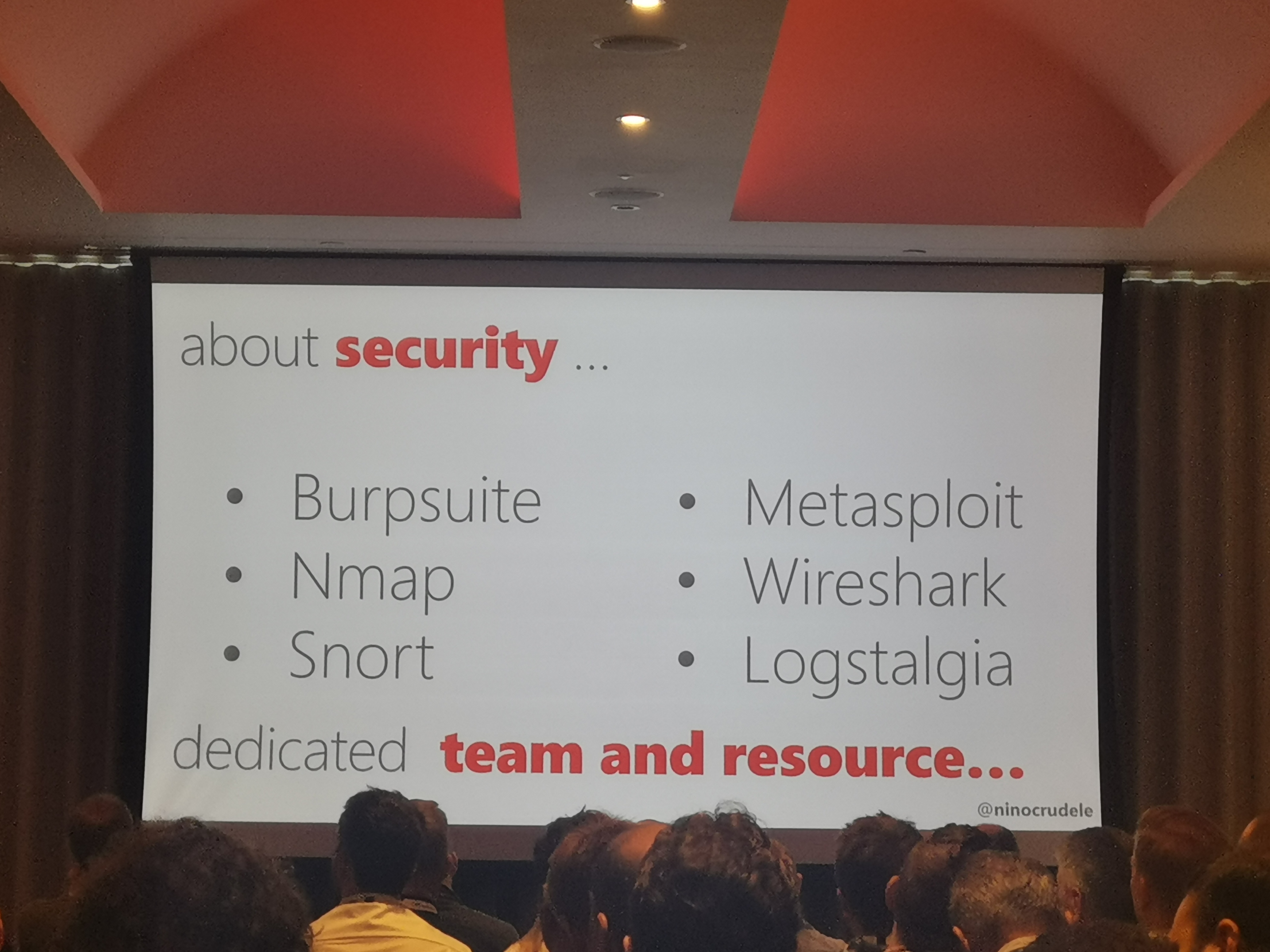

- Azure security was also pinpointed by Nino as an area of concern. He suggested to use various tools to identify security threats inside your Azure environment.

- Networking is the core of Azure. Thereby it is imported to keep track of IP schemas and use centralized firewalling/security. Although, you will always expose some parts of Azure to the public and make it more vulnerable for attacks.

- Use a naming standard in Azure.

- Hashing integrity in your infrastructure

- Tool which could be used for documentations

(see: https://www.cloudockit.com/samples)

He concluded by saying that you should avoid the chicken way to build the new stuff, but rather face the change.

More info on https://www.aziverso.com

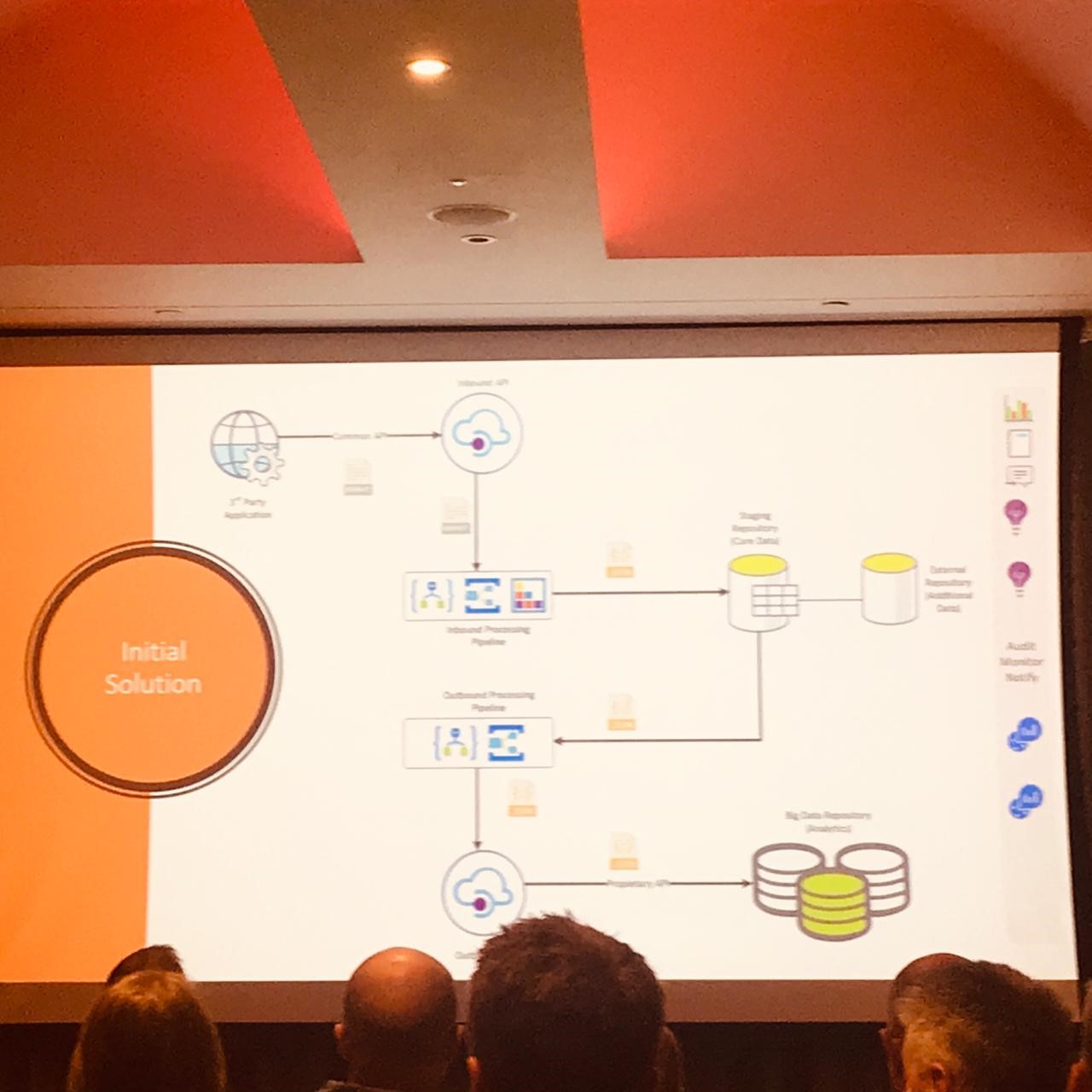

Creating a Processing Pipeline with Azure Function and Azure Integration Services – Wagner Silveira

Wagner Silveira started his session with description about how we can create a processing pipeline with Azure Functions and Azure Integration Services. The session continued with the case study of Government agency initial solution and how it has been updated. The case study explains how it has been different from initial design and updated design.

The requirements for Government ageny solution were:

- Onboarding of external EDI messages

- Should allow for different transports

- Message need to be validated, translated, enriched, routed

- Control on retries and notification over failed messages

- Auditing of initial messages

- Visibility of where a single message was while in the process

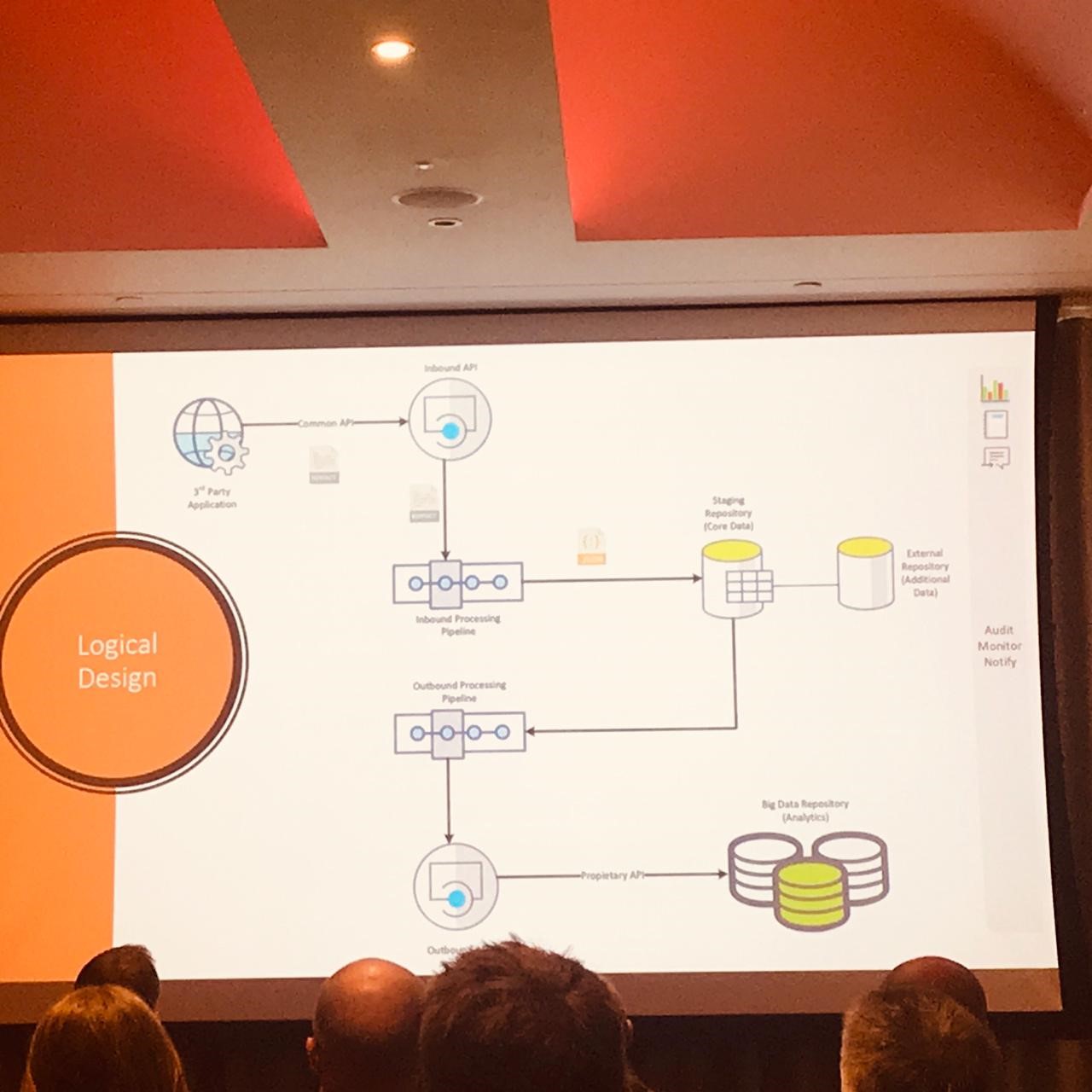

The Logical Design of the Government agency solution:

The Initial Design of the Government agency solution

Reality check and issues while creating the initial design of the Government agency solution:

- Big payloads

- EDIFACT schema not available

- Operational costs

- End to end monitoring

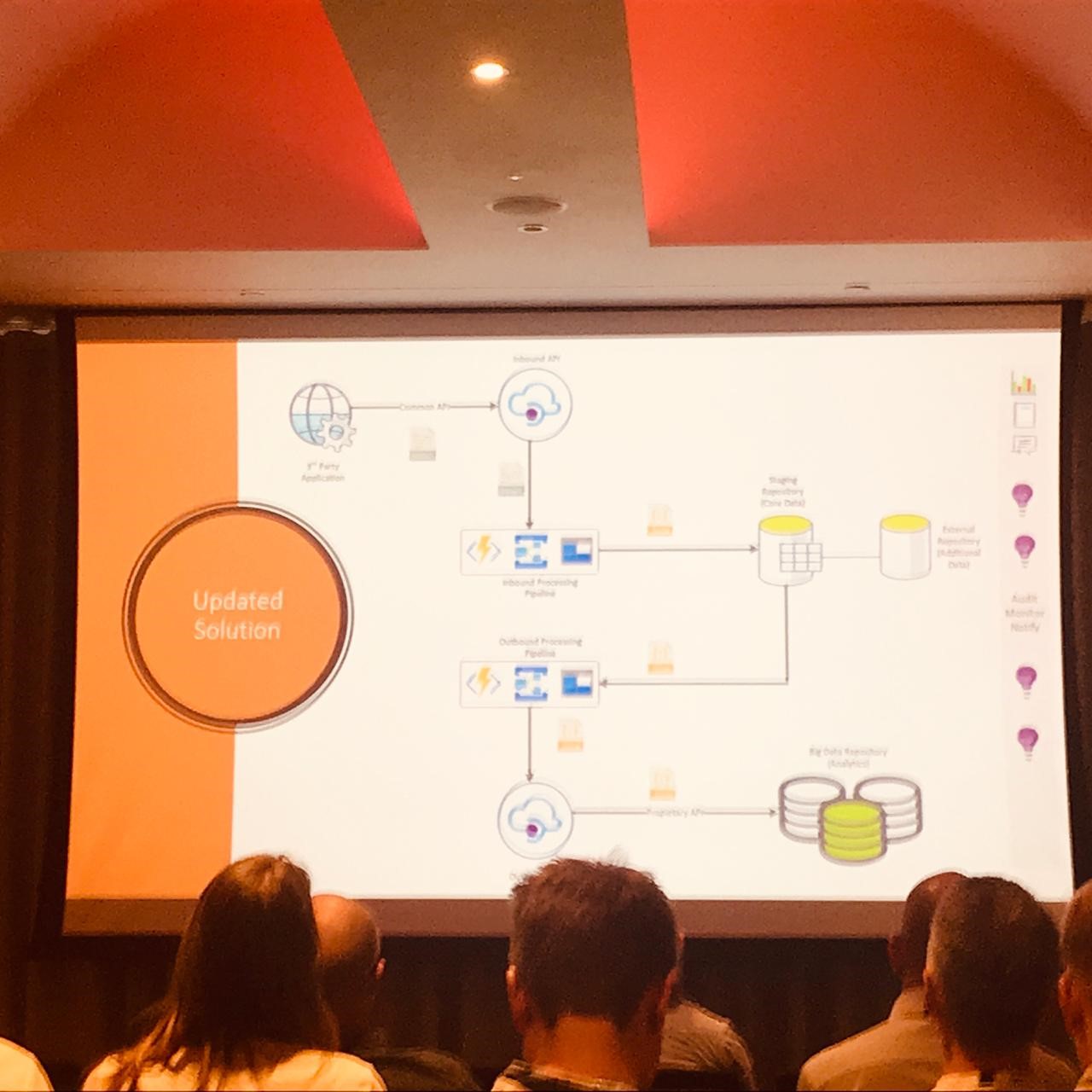

The Updated Design of the Government agency solution:

Wagner explains what has been changed from initial design to updated design:

1. Azure Functions

- EDIFACT support via .net package

- Claim Check Pattern

- Dedicated instances

2. Azure Storage

- Payload Storage

3. Application Insights

- End to end custom events

- Single technology for monitoring and notification

Key Components

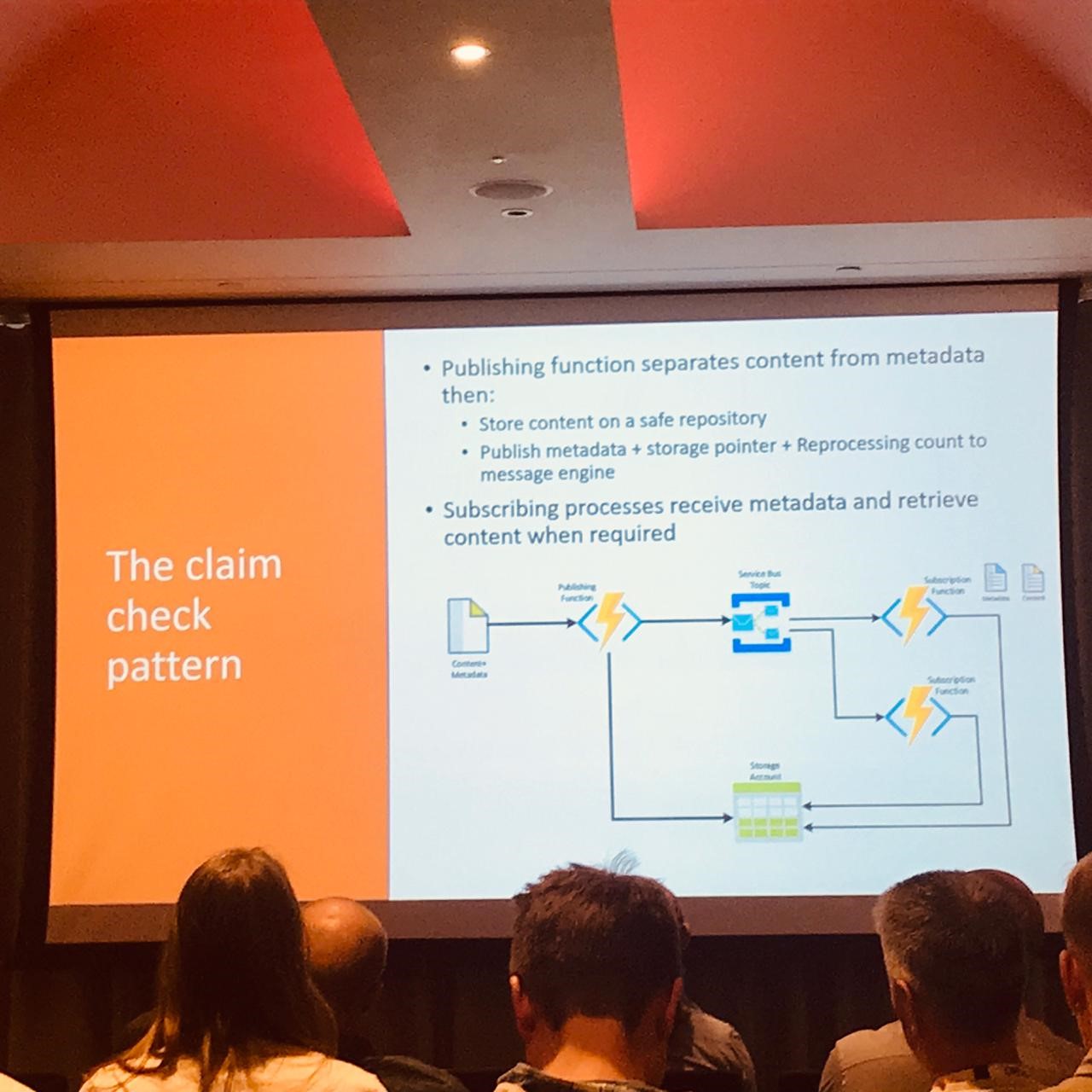

1. The Claim Check Pattern

2. App Insights Custom Events

- End to end tracebility using correlation ID

- Allow for exceptions to be captured in the same way

- Leverage Functions support for App Insights

- Implemented as a reusable components

Exception Management & Retries on Functions:

- Catch blocks using a notify and throw pattern

- Leverage Function SB binding peek-lock pattern

- Adjusted Maximum Delivery Count on SB

DLQ Management

- Logic Apps polling subscription DLQ every 6 hours

- Each Subscription DLQ could have its own logic

- Reprocessing count

- Resubmission logic

- Notification Logic

- Email Notification

- Error blob storage

Lessons learned

- Review the fine print

- Operational Cost is a design consideration

- Make the best of each technology

- Think about the big picture

The session ends with a great demo of claim check pattern using Azure Functions and Applications Insights.

Thank you for reading our blog post, feel free to comment or give us feedback in person.

Bart Defoort, Charles Storm, Erwin Kramer, Francis Defauw, Gajanan Kotgire, Jonathan Gurevich, Kapil Baj, Martin Peters, Miguel Keijzers, Peter Brouwer, Sam Vanhoutte, Steef-Jan Wiggers and Tim Lewis

Subscribe to our RSS feed